What is AI Infrastructure? Definition + how-to-build guide

Artificial intelligence (AI) is transforming how businesses operate, automating workflows, enhancing customer experiences, and generating real-time insights. But without the right AI infrastructure (or AI stack), even the most advanced AI models can’t deliver meaningful results.

From performance to efficiency to security, AI infrastructure is key to ensuring businesses can effectively deploy, scale, and optimize their AI-powered applications.

Whether you're a CTO, AI engineer, or business leader exploring AI—this article will help you understand how to build an AI infrastructure that drives innovation and real-world impact, without sacrificing scalability, security, privacy, or performance.

Here’s a preview of what you’ll learn:

AI infrastructure definition and overview

AI infrastructure refers to the hardware, software, and systems that support artificial intelligence and machine learning (ML) applications. Reliable infrastructure has the computing power, storage, and data pipelines necessary to train, deploy, and operate AI solutions efficiently.

Just like traditional IT infrastructure supports websites and enterprise software, AI infrastructure is built to handle the unique demands of machine learning, deep learning, and large-scale data processing.

With reliable infrastructure, AI applications can process massive datasets and learn from real-time inputs. Without it, AI projects may stall due to slow training times, data bottlenecks, or systems that can’t scale.

Benefits of AI infrastructure

Enterprises that invest in AI infrastructure are better positioned to move faster, scale smarter, and unlock real business value from AI. Here’s why:

Scalability

As AI adoption grows, businesses need infrastructure that keeps up. With scalable, cloud-based AI infrastructure, businesses can dynamically allocate resources based on real-time demand, avoid service slowdowns, and support growth without overbuilding their systems.

Reduced long-term costs

Building AI infrastructure requires upfront investment, but over time, it’s far more cost-effective than scaling AI on outdated or inflexible systems. Purpose-built infrastructure helps teams use the right tools from the start and maximize ROI.

Data privacy and compliance

AI infrastructure provides the tools and architecture needed to secure data, including encryption, access controls, audit logging, and environment isolation. It also supports the compliance workflows required by regulations like GDPR and HIPAA.

Improved performance and reliability

AI workloads are resource-intensive and often time-sensitive. Without the right infrastructure, response times lag, services slow down, and model output becomes inconsistent. Building AI infrastructure ensures low latency and keeps systems running smoothly.

Better collaboration across teams

AI infrastructure creates a shared foundation for teams to build, train, and deploy AI models together. With the proper infrastructure, developers, engineers, and data scientists can collaborate more efficiently and move AI projects forward with fewer bottlenecks.

Faster innovation

Due to the size of the datasets, the complexity of model architectures, and the need for repeated experimentation, training AI models requires massive compute power. If the infrastructure is underpowered or poorly optimized, model training can take weeks or fail entirely. However, with the proper AI infrastructure, businesses can move from idea to deployment in days.

Seamless integration across systems

AI applications often rely on multiple data sources and tools, from CRMs to analytics platforms to cloud storage. Scalable, flexible infrastructure makes it easier to integrate these systems so AI models can access the data they need to deliver accurate, context-aware results.

Learn more: Why enterprise-grade infrastructure is key in the AI agent era

Reinvent CX with AI agents

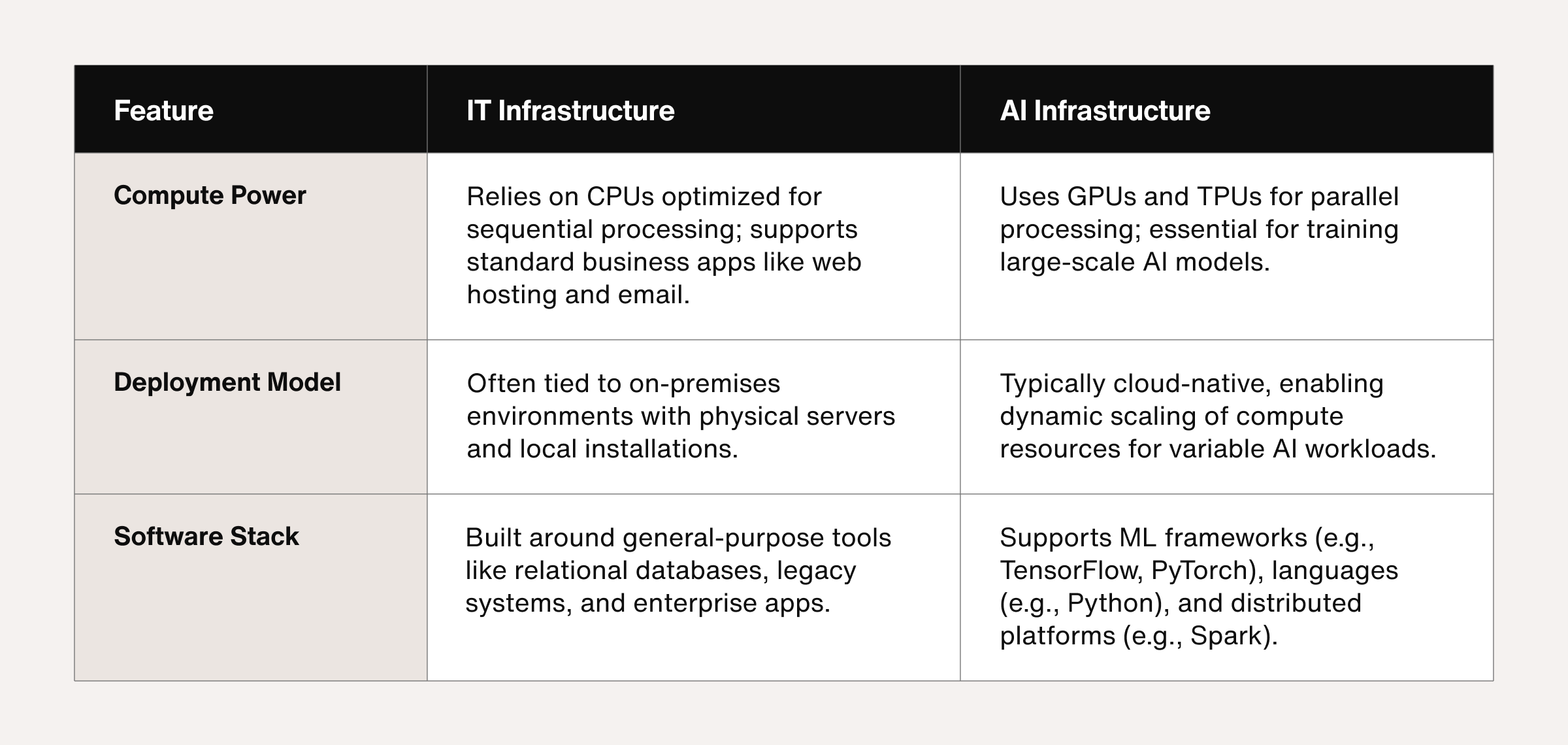

AI infrastructure vs. IT infrastructure

Most businesses already have traditional IT infrastructure, including servers, databases, networking, and cloud storage. However, AI infrastructure is fundamentally different because it’s built to support the computing and data demands of machine learning and deep learning.

Understanding the difference between AI infrastructure and IT infrastructure can help businesses avoid common bottlenecks.

Compute power

IT infrastructure relies on CPUs (central processing units) optimized for sequential processing to handle standard business applications such as web hosting, email servers, and databases.

AI infrastructure, however, uses high-performance GPUs and TPUs, which process thousands of computations in parallel. This is essential for training AI models that require large-scale matrix calculations.

Cloud-native vs. on-premises deployment

IT infrastructure is often tied to on-premise environments, such as physical servers, local data centers, and software installed on individual machines.

AI infrastructure, by contrast, tends to be cloud-native. This enables businesses to scale up or down compute resources on demand. Flexibility is key for AI workloads, which often involve fluctuating processing needs and real-time performance requirements.

AI-optimized software stacks

Another key difference lies in the software stack. IT infrastructure is built around general-purpose tools like relational databases, legacy systems, and business applications.

AI infrastructure supports a more specialized toolkit. This includes machine learning frameworks like TensorFlow and PyTorch, programming languages like Python and Java, and distributed computing platforms like Apache Spark or Hadoop.

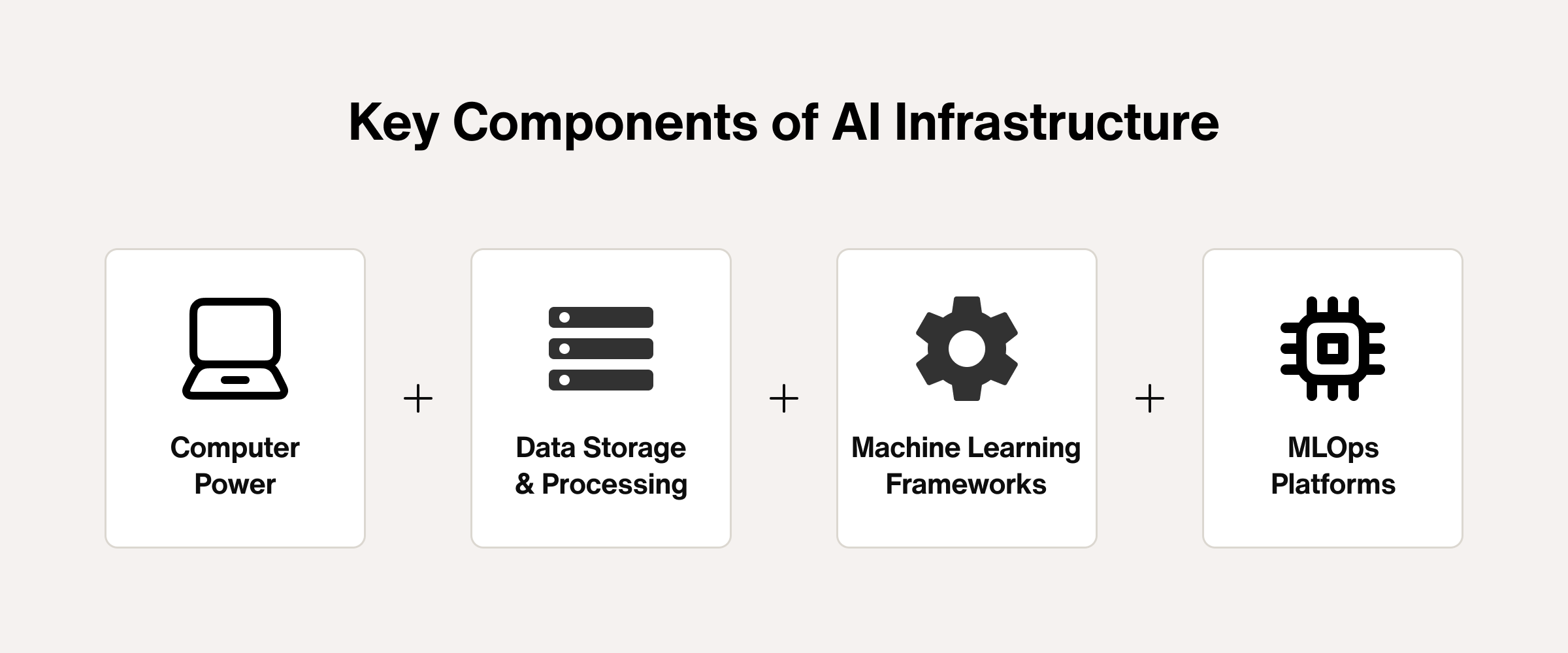

How AI infrastructure works

Rather than a single tool or platform, AI infrastructure is a stack of connected components that work in tandem to support end-to-end machine learning workflows.

Here's a breakdown of the key components:

1. Compute resources

Training and deploying AI models is computationally intensive. That’s why AI infrastructure includes specialized processors built for parallel workloads:

GPUs (Graphics Processing Units): Ideal for training deep learning models due to their ability to process many calculations simultaneously. Widely used across industries for high-performance AI computing.

TPUs (Tensor Processing Units): Custom-built accelerators designed to handle tensor operations in deep learning. Known for high throughput and energy efficiency in large-scale training tasks.

2. Data storage and processing

AI models need large volumes of data to learn patterns, make predictions, and improve over time. That means businesses need scalable and reliable storage solutions to manage structured and unstructured data, whether in the cloud, on-premises, or hybrid.

Teams rely on data processing libraries like Pandas, NumPy, and SciPy to prepare data for model training. These tools clean, transform, and format raw data so it's ready for use in training pipelines.

3. Machine learning frameworks

Machine learning frameworks simplify model development and accelerate experimentation and deployment. Frameworks like TensorFlow and PyTorch offer pre-built components for data handling, model architecture, and training loops, making it easier for engineers to get AI projects off the ground.

These tools also enable support for different types of machine learning models (supervised, unsupervised, and reinforcement) and are often optimized to work directly with GPU or TPU compute environments.

4. Machine learning operations (MLOps) platforms

Once a model is trained, it needs to be deployed, monitored, and continuously improved. This is where MLOps comes in. MLOps platforms help automate and manage the lifecycle of AI models, from data ingestion to training to deployment and monitoring.

With tools like MLflow and Kubeflow, teams can streamline experimentation, maintain model version control, and monitor production performance over time. For enterprise AI infrastructure, MLOps is essential for ensuring repeatability, governance, and scalability.

Automate customer service with AI agents

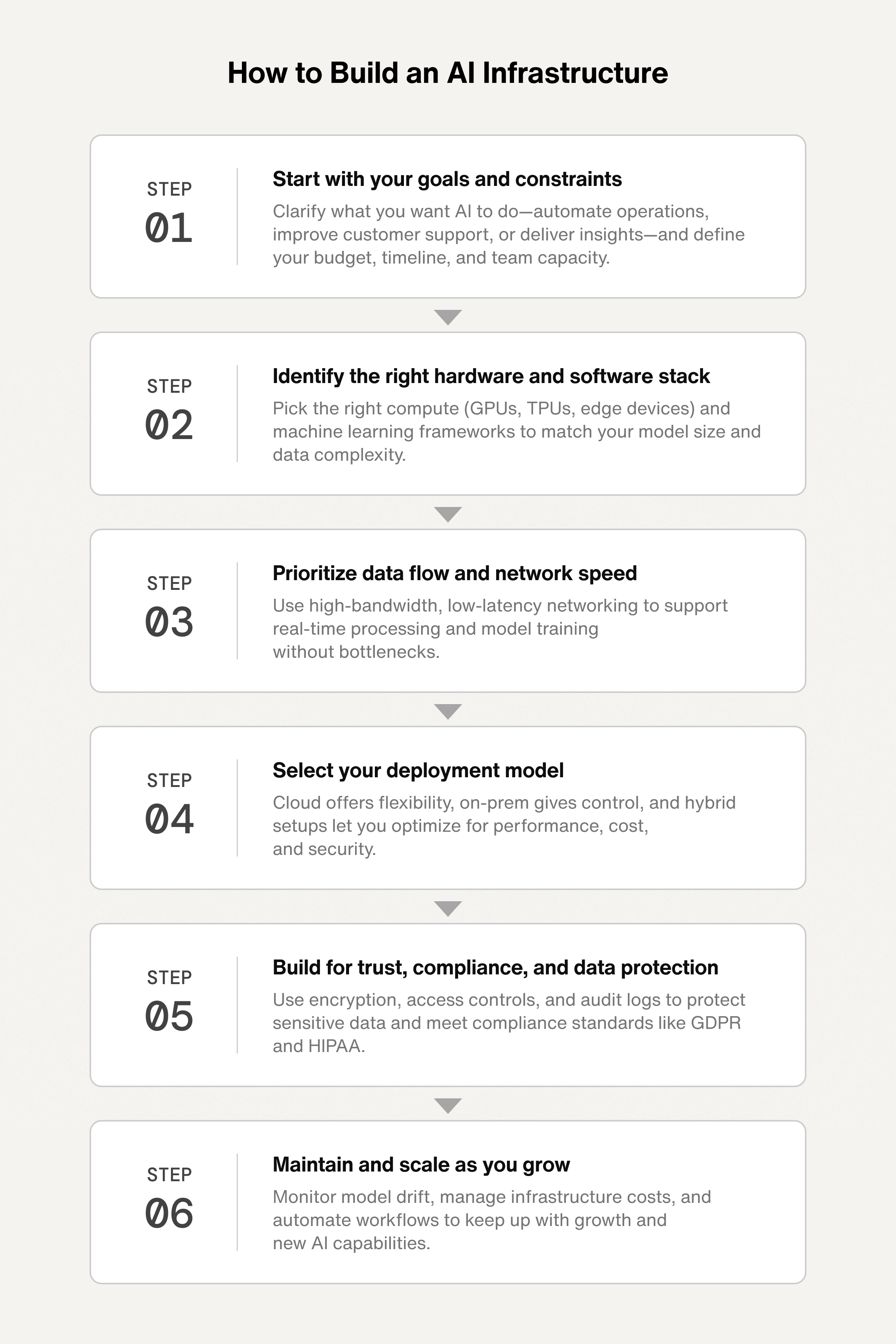

How to build an AI infrastructure

AI infrastructure isn’t a one-size-fits-all solution. The right setup depends on business goals, AI workload demands, and available resources.

Whether you're a startup training small AI models or an enterprise running an AI workforce, building a scalable AI infrastructure requires careful planning.

Here’s a step-by-step guide to designing and implementing an AI-ready infrastructure.

1. Start with your goals and constraints

Define what you want to achieve with AI and how much you're prepared to invest. Are you optimizing customer engagement, automating operations, or enabling new product features?

Understanding the core use case will shape the technical requirements and prevent over-investing. Budget, timeline, team capacity, and strategic priorities should all guide infrastructure decisions from day one.

2. Identify the right hardware and software stack

Building effective AI infrastructure means selecting the right tools for your needs. That includes compute options—like GPUs, TPUs, or edge devices—as well as machine learning frameworks and data libraries that match your model complexity and scale. The key is to choose components that align with your goals and can grow with your AI workload over time.

3. Prioritize data flow and network speed

AI infrastructure depends on fast, reliable data flow between compute, storage, and applications. Without it, even the most advanced models can stall. High-bandwidth, low-latency networks help ensure your AI systems can process and respond to data in real-time. Consider private or dedicated networking options for sensitive workloads to enhance performance, security, and control.

4. Select your deployment model

Your infrastructure can live in the cloud, on-premises, or across both. Cloud-based infrastructure offers flexibility and scalability, while on-prem gives you more control over performance, security, and cost. You can deploy a hybrid model by training models in the cloud and deploying them on on-prem systems for speed and security.

5. Build for trust, compliance, and data protection

AI systems often rely on sensitive data, which means compliance is essential. From GDPR to HIPAA, ensure that your infrastructure supports encryption, access controls, audit trails, and other safeguards. Designing for privacy from the beginning helps mitigate risk and build user trust.

6. Maintain and scale as you grow

Once deployed, AI infrastructure needs ongoing maintenance. Monitor model drift, system performance, and infrastructure cost. Automate where possible and refine workflows as your team and data scale. A strong foundation now makes it easier to adopt new AI capabilities later.

Leverage omnichannel AI for customer support

AI infrastructure is the foundation for what comes next

Whether you’re deploying AI to streamline customer service, drive personalization, or unlock real-time insights across departments, your AI infrastructure determines how far and how fast you can go.

That’s why infrastructure isn’t just a technical concern. It’s a strategic one.

At Sendbird, we provide an AI agent platform and enterprise-grade messaging infrastructure that powers intelligent, real-time engagement. We can help you design AI agents that are interoperable across tools and departments. These agents carry data and context wherever they go, connecting systems, streamlining operations, and helping businesses turn AI strategy into action.

Learn more about AI agents and how they can transform customer engagement, streamline operations, and scale intelligently across your business from these resources: