AI security: A best practices guide to mitigate risk and build secure AI systems

What is AI security?

AI security refers to the use of artificial intelligence (AI) to enhance the security posture of an organization.

In an era marked by increasingly frequent, dynamic, and harmful cyberattacks, AI offers a powerful set of tools that can automate threat detection, prevention, and remediation—helping to strengthen defenses against cyberattacks and data breaches.

AI can enhance cybersecurity in many ways. Using machine learning (ML) and deep learning, AI tools can analyze massive datasets in real time—such as user behavior, network activity, app usage—to uncover patterns and set a security baseline. When deviations occur, AI flags them as anomalies for immediate investigation.

Generative AI, powered by large language models (LLMs), can translate complex data into natural language insights, helping security teams to streamline decision-making. The latest addition is agentic AI—autonomous AI agents that continuously monitor environments, detect threats, and respond proactively in real time to neutralize risks across systems.

AI-driven security practices have been shown to significantly enhance threat detection and incident response. According to the Ponemon Institute, 70% of cybersecurity professionals say AI is highly effective at detecting threats that previously would have gone unnoticed. Additionally, research by IBM shows the cost associated with experiencing a data breach is 33% lower among organizations that extensively use AI security and automation.

Given these benefits, AI security is seeing increased investment across industries. According to Statista, the market for AI in cybersecurity is expected to grow considerably in coming years, from over $30 billion in 2024 to $134 billion by 2030.

Securing AI from cyberattacks

AI security can also be defined as the process of securing AI from cyber threats. AI systems introduce a new set of vulnerabilities and security risks into SecOps—both expanding the threat surface and enhancing existing cyberattack techniques with automation.

For example, a recent automated cyber attack deceived a Chevrolet dealership’s generative AI chatbot into offering a new Tahor for $1. AI models also present new vectors for threat actors, including AI supply chain attacks or input manipulation.

As AI becomes more embedded in enterprise operations, cybersecurity professionals must consider a dual security posture: one that not only uses AI to strengthen defenses, but also secures AI itself.

AI for security: Using machine learning, deep learning, generative AI, and agentic AI to detect, prevent, and respond to cyber threats faster and more intelligently.

Security for AI: Applying robust security practices to protect AI systems from attacks, manipulation, or misuse.

This article focuses on how to use AI to enhance cybersecurity. However, it also outlines AI’s potential vulnerabilities and the best practices for protecting AI systems.

Why is AI security important?

The digital attack surface is expanding rapidly. Between the cloud sprawl, hybrid environments, edge computing, connected devices, and now AI-driven systems—all introduce new vulnerabilities. Adversaries also use AI themselves. For example, using LLMs to craft more convincing phishing attacks, or automating attacks with machine learning.

Meanwhile, organizations face shortages of cybersecurity talent, with 63% reporting one or more unfilled cybersecurity roles in 2024. Cyberattacks are increasingly frequent and costly as well. According to one study, the average costs of a data breach is now $4.5 million, on top of any costs associated with operational disruption and reputational damage.

AI can help with cybersecurity. By automating routine threat detection and response tasks, AI helps security teams stay ahead of increasingly sophisticated attacks and catch threat actors in real time. Using various AI technologies from machine learning to agentic AI, organizations can continuously monitor operations to identify and proactively remediate attacks in real. This includes a range of critical cyberthreats, from malware attacks to repeated login attempts to brute force attacks.

"AI is at a definitive crossroads — one where policymakers, security professionals and civil society have the chance to finally tilt the cybersecurity balance from attackers to cyber defenders."

Sundar Pichai, CEO of Google

Security challenges of AI security

For all its benefits, AI introduces a novel set of security challenges, particularly around data security. AI models and systems rely heavily on their training data, making them vulnerable to data manipulation, poisoning, or adversarial attacks. These new threats can lead to inaccurate outputs, false positives, and skew decision-making, exposing organizations to risks of non-compliance and reputational damage.

Threat actors also increasingly use AI to exploit security vulnerabilities and enhance cyberattacks. For example, since 2022, there’s been a 967% increase in phishing attacks that use ChatGPT to launch more credible attacks that bypass traditional email filters. This has created a new strain for security teams. In one survey, 74% of IT security professionals said their organizations are experiencing a significant impact from AI-powered threats.

Harness proactive AI customer support

Benefits of AI security

AI offers a set of significant advantages when it comes to cybersecurity defenses. These include:

Enhanced threat detection: AI can continuously analyze huge data streams in real time to detect potential cyber threats with greater speed and accuracy. Agentic AI goes further, acting autonomously to mitigate threats by isolating devices, revoking access, or triggering protocols. AI can also identify sophisticated attack vectors that traditional security measures can miss.

Faster incident response: AI tools reduce the time needed to identify and respond to security incidents, helping organizations to address threats more quickly to mitigate damage. Agentic AI can autonomously investigate alerts, escalate incidents, and initiate containment across systems, further shortening the time between detection and resolution.

Operational efficiency: AI can automate repetitive tasks like log analysis or triage, streamlining operations, reducing human error, and freeing security teams for more strategic efforts.

Proactive security posture: By analyzing data and responding to threats in real time, AI security enables organizations to adopt a proactive security approach, anticipating threats rather than just responding to them to better mitigate risk.

Threat prediction: AI models can identify patterns and predict potential threats before they emerge. Agentic AI can learn from threat incidents and adjust in near-real-time to self-evolve its defenses to emerging attack vectors.

Improved user experience: AI tools can improve security measures without impacting the user experiences. For example, context-aware authentication based on behavioral analytics reduces user friction while maintaining strong AI role-based access controls.

Regulatory automation: AI can automate compliance monitoring, data handling, and reporting. This frees security teams from tedious work to improve operations while meeting regulatory requirements for AI.

Scalability: AI can be scaled to protect large, complex environments without manual tuning. Agentic AI interoperates across hybrid and multi-cloud environments, coordinating defenses and adjusting dynamically to changing AI infrastructure without reconfiguration.

Leverage omnichannel AI for customer support

The key risks and vulnerabilities of AI security

Despite its potential benefits, AI also introduces a new set of challenges for security teams. Attackers can exploit AI models and systems, or use AI technology to accelerate and scale cyber attacks.

These major AI security risks include:

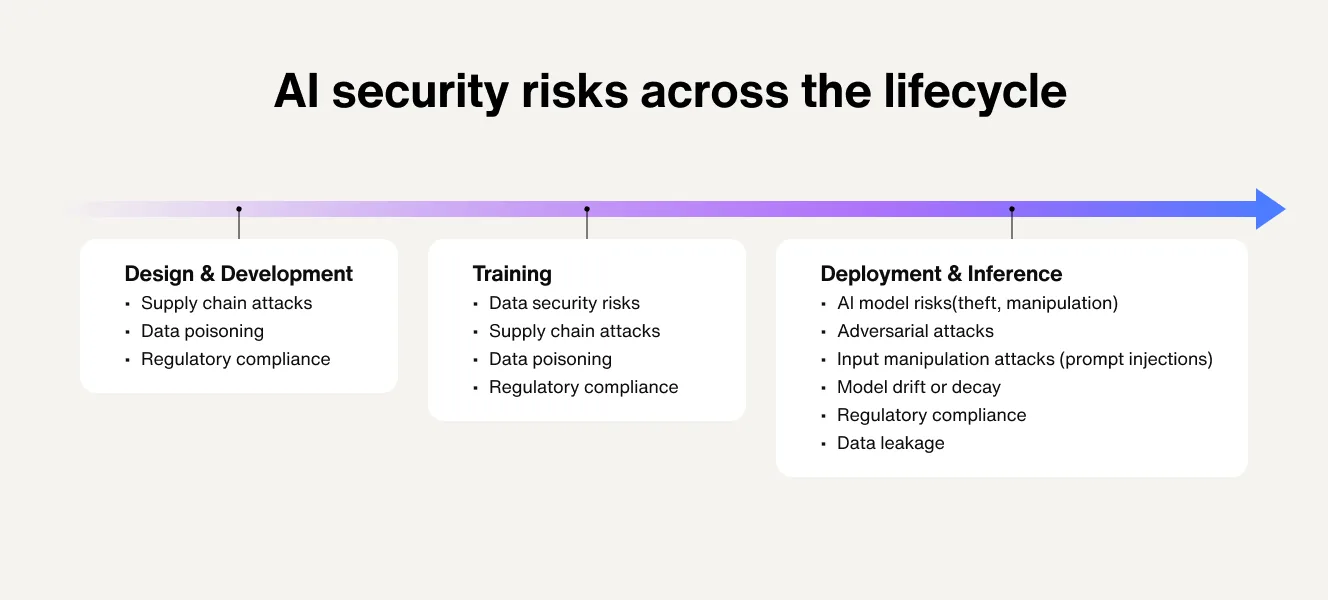

1. AI data security

AI systems depend on large datasets. If training data is poisoned, manipulated, or leaked, it can skew model behavior, expose sensitive information, and result in biased or non-compliant outputs.

Solution: Protect the integrity, confidentiality, and availability of data across the entire AI lifecycle.

2. AI model security threats

Models can be reverse-engineered, manipulated, or stolen. For example, attackers could tamper with the model's architecture, weights, or LLM parameters—the core components that determine the model’s behavior and performance.

Solution: Ensure model integrity using a multi-faceted approach that involves data quality assurance, bias mitigation, exposure management, and robust ongoing testing.

3. Adversarial attacks

Threat actors can manipulate AI’s input data, deceiving models into making incorrect predictions or classifications. For example, attackers could introduce adversarial examples to interference with AI models’ decision-making to produce biased responses.

Solution: Mitigate by training models with adversarial examples, validating inputs, monitoring for anomalies, and securing access to model endpoints.

4. Input manipulation attacks

Similarly, threat actors can manipulate AI’s input data to evade detection, bypass security measures, or influence decision-making processes—feeding it bad data to create biased, harmful, or inaccurate outputs.

Solution: Validate and sanitize inputs, apply anomaly detection, and use adversarial defenses to ensure model reliability.

5. Prompt injections

Malicious prompts can trick AI models and systems into taking harmful actions, like impelling a model to leak sensitive intellectual property or delete critical data.

Solution: Sanitize user inputs with strict input/output formatting, implement role-based access controls, and monitor model behavior for unexpected or unsafe outputs.

6. AI supply chain attack

AI systems are typically built from various third-party components, software libraries, and external modules, the vulnerabilities of which can be exploited by threat actors at every stage of developemnt—potentially leading to data breaches or unauthorized access.

Solution: Vet third-party components, enforce code provenance and integrity checks, and continuously monitor dependencies for vulnerabilities.

7. AI model drift and decay

Models degrade over time as training data ages, leading to slight changes in performance and effectiveness that make it difficult to detect exploitation attempts.

Solution: Monitor AI models for changes in performance, behavior, or accuracy to avoid hallucinations and maintain their reliability and relevance.

8. Ethical and safety failures

AI systems deployed without transparency, fairness checks, or governance can introduce bias, discrimination, or privacy violations.

Solution: Use secure-by-design principles and align with established AI governance policies that ensure fair, transparent, ethical, and accountable AI use across the lifecycle.

9. Regulatory noncompliance

The regulatory landscape around AI continues to evolve in patchwork fashion, requiring organizations to navigate a growing set of state, federal, and international laws and guidelines (e.g., EU AI Act, CCPA, GDPR)—or face significant penalties, security risks, and reputational damage.

Solution: Establish AI governance that aligns with the evolving regulatory picture and frameworks to avoid compliance risk.

AI-specific security threats exist before, during, and after development. More than just an engineering and IT problem, AI security is a mindset. This makes it crucial to raise awareness about AI-specific security threats among all teams and stakeholders to effectively secure AI systems.

Learn more: 15 AI risks to your business and how to address them

Leverage omnichannel AI for customer support

Use cases for AI security

AI can enhance cybersecurity efforts in various ways. This includes identifying, investigating, and responding to threats in real time while streamlining operations and enhancing security defenses at scale.

Data protection

Data protection helps to safeguard sensitive information from unauthorized access, loss, or corruption to ensure its availability and regulatory compliance.

AI can protect sensitive data across environments by automatically classifying data, identifying shadow data, monitoring data access and movement, and alerting cybersecurity professionals to threats.

Endpoint security

Endpoint security is the practice of safeguarding endpoints like computers, servers, and mobile devices.

AI can continuously monitor endpoints for suspicious activity and anomalies to identify and address threats in real time. Machine learning can also detect malware, ransomware, or behavioral anomalies on user devices—even in zero-day scenarios.

Cloud security

AI can strengthen the security of data in cloud environments. This involves the continuous monitoring of hybrid environments to detect shadow data, suspicious access patterns, and misconfigurations to combat cyber attacks and minimize exposure.

Advanced threat hunting

Threat hunting involves proactively searching for signs of malicious activity within an organization’s systems.

AI enhances manual threat hunting by constantly scanning vast data logs for patterns humans would miss—enabling faster, more reliable, and proactive defense against subtle patterns that may indicate compromised security.

Fraud detection

AI helps banks, fintech companies, and e-commerce platforms detect and prevent fraudulent transactions in real time.

By continuously analyzing transactional data and behavior against historical patterns, AI models can flag anomalies that signal fraud in real time. They can also adapt to new fraud tactics to help create more resilient systems.

Cybersecurity automation

By automating tasks like alert triage, correlation, and remediation, AI-driven security orchestration, automation, and response (SOAR) solutions can help to streamline incident handling. They can help teams respond faster to threats, reducing manual workloads while freeing teams to focus on high-level strategy.

Identity and access management (IAM)

Identify and access management (IAM) governs how users access digital resources and ensures access is only granted to authorized individuals.

AI improves IAM by analyzing user behavior, detecting unusual login activity, and enforcing adaptive authentication based on live context and past patterns. It also enhances role-based access management with fine-grained policy controls.

Phishing detection

AI can help to identify advanced AI-driven phishing emails and malicious links before they reach users.

Machine learning models can detect and block phishing attempts based on message content, metadata, and sender reputation, even improve over time. Agentic AI further enables models to self-improve over time to boost detection rates.

Vulnerability management

Vulnerability management involves discovering, prioritizing, and remediating software and system weaknesses.

AI can correlate known vulnerabilities (CVEs) with asset exposure and threat intelligence in real time, helping teams focus on the most exploitable risks. AI for security can also automate patch deployment, reducing time to resolution and lowering overall risk.

Leverage omnichannel AI for customer support

AI security best practices

According to a recent IBM study, 96% of business leaders believe that adopting generative AI makes a security breach more likely. However, only 24% have secured their current generative AI projects.

The following best practices can guide organizations on how to approach AI security in a way that effectively mitigates risk and regulatory non-compliance across the AI lifecycle.

Establish AI data governance

AI data governance is the process and practice that manages and oversees the use of data within an organization's AI systems. It involves ensuring that datasets are high quality, well-documented, and access-controlled to prevent data breaches or misuse.

Integrate AI with existing security tools

Integrating AI into security systems involves a multi-step process to assess current infrastructure and identify areas where AI can help. Embed AI into your SIEM, XDR, and IAM ecosystems to maximize insights while minimizing disruption.

Prioritize ethics and transparency

Maintaining transparency in AI processes by documenting algorithms and data sources can help to identify and mitigate potential biases and unfairness. Document model decisions, bias mitigation steps, and training sources to ensure stakeholder trust and regulatory compliance.

Apply security controls to AI

Treat AI tools and models like code: encrypt them, audit access, apply threat detection, and monitor for tampering.

Regular monitoring and evaluation

Continuously monitor AI models and systems for drift, bias, performance, and adversarial signals. Schedule red team simulations and model audits regularly to track performance, accuracy, and compliance to improve AI over time.

AI security frameworks and standards

As AI becomes more embedded in business operations, a set of comprehensive frameworks and standards have emerged to guide AI design, deployment, and governance.

These frameworks offer a structured approach to identifying vulnerabilities, mitigating risk, and ensuring regulatory compliance through the safe, ethical use of AI systems. They include:

NIST’s Artificial Intelligence Risk Management Framework: Breaks AI security into four primary functions: govern, map, measure, and manage. Also known as NIST AI RMF.

Google’s Secure AI Framework: Outlines a six-step process—from risk assessment to monitoring—to mitigate the challenges of AI at scale across organizations.

Mitre’s Sensible Regulatory Framework for AI Security and ATLAS Matrix: Catalogs attack tactics and proposes regulatory guidance for secure AI system development and deployment.

OWASP’s Top 10 Risks for LLMs: Identifies the top risks for large language models (LLMs), including prompt injections, data leakage, and AI supply chain vulnerabilities.

Automate customer service with AI agents

5 trends on AI security

AI-driven security tools are reshaping how organizations approach cybersecurity across industries. In the future, emerging AI technology will empower organizations to better combat cyberattacks, while efforts to secure AI systems from attack will become more established and effective.

The top trends on AI security in 2025 include:

1. Rise of agentic AI security: Omnichannel AI agents for security can continuously identify and respond dynamically to threats without human intervention, promising faster detection and remediation of threats across large-scale and dynamic environments.

2. Multi-AI agent security technology: Teams of utility-specific AI security agents can collaborate across environments, promising to further enhance enterprise cybersecurity.

3. AI-powered cyberattacks: Some say only AI can keep up with AI, as attackers increasingly use AI for sophisticated phishing, evasion techniques, and vulnerability exploitation.

4. Secure-by-design AI systems: To effectively mitigate AI cybersecurity risks and ensure AI compliance, there’s increasing emphasis on embedding security practices early in AI model development and lifecycle management.

5. Zero-trust architecture: Agentic AI-powered systems can dynamically assess risk and grant access based on context like user identity, device, and location, enhancing security and reducing attack surfaces.