AI transparency: Definition and comprehensive guide

As AI technology becomes more powerful and pervasive in everyday life, it's set to influence everything from healthcare diagnostics to personal finance to customer relations.

For the 72% of businesses now using AI, this newfound power comes with a responsibility—yes, just like in the movie Spider Man—to mitigate its potential risks to users and society, and align with stakeholder expectations for safe, ethical AI use.

This is where AI transparency comes in.

What is AI transparency?

AI transparency refers to a set of processes that create visibility and openness around how artificial intelligence systems are designed, operate, and make decisions.

The goal is to foster trust and accountability in AI systems by making their inner workings understandable to humans. This includes disclosing information about the data, algorithms, and decision-making processes involved in AI systems.

Like a window into AI's inner workings, transparency allows an organization to ensure AI is used in a way that's ethical, trustworthy, and accountable.

Why is AI transparency important?

Transparency in AI is important because it explains how and why AI systems make decisions. With generative AI becoming more embedded in operations of high-stakes industries like healthcare and finance, demand for transparency has surged.

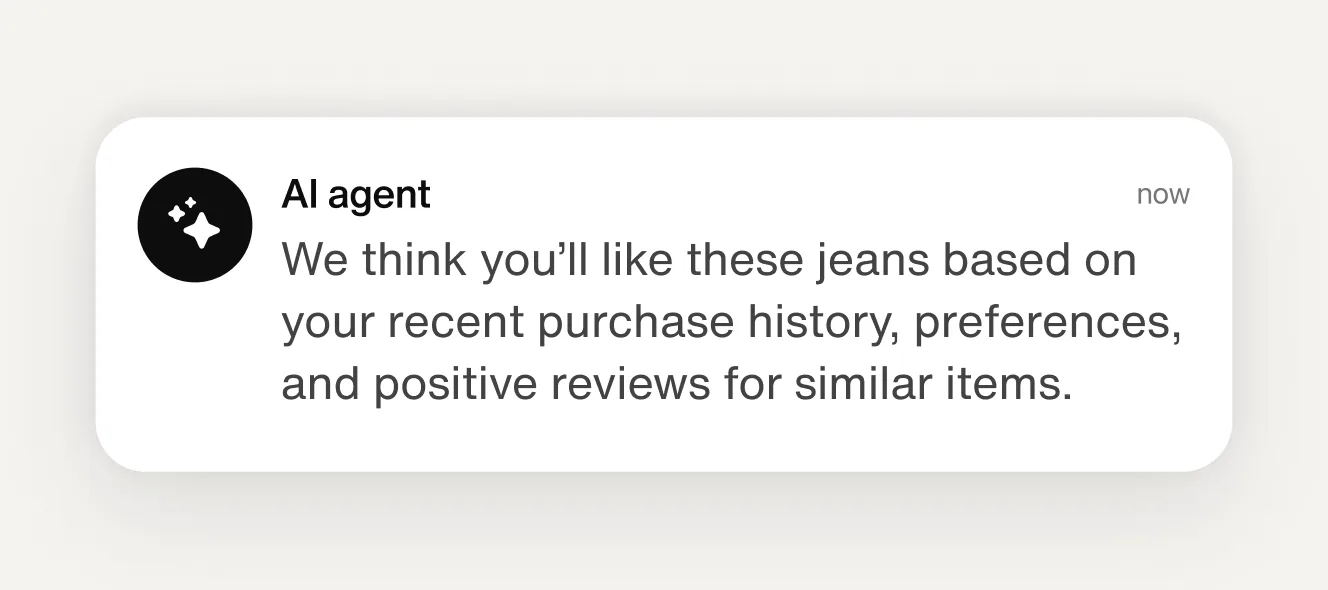

For instance, customers may not question how an AI concierge on a website recommends a pair of jeans. But they will question how AI determines their creditworthiness, medical diagnoses, or job opportunities.

By understanding how AI works, organizations can ensure AI’s decisions and actions are fair, reliable, and safe. In the words of Adnan Masood, chief AI architect at consultancy UST and regional director at Microsoft AI:

"Without transparency, we risk creating AI systems that could inadvertently perpetuate harmful biases, make inscrutable decisions, or even lead to undesirable outcomes in high-risk applications."

At a time when 65% of customer experience leaders view AI as strategically essential, transparency is more than a best practice—it's a requirement to build trust with users, stakeholders, and regulators.

To achieve transparency, organizations must consider and address the implications of AI in an ethical, legal, and societal sense:

Ethical implications: Does the AI system behave fairly and responsibly?

Amazon discontinued an AI recruiting tool after it favored male candidates over female ones. The system, trained on resumes submitted over a 10-year period, reflected historical gender biases. Transparent AI helps reduce biases to produce fair, ethical results.

Legal implications: Does the AI system observe the relevant laws and regulations?

Meta faces a lawsuit from authors alleging that its AI models were trained on copyrighted books without permission. Transparent AI helps to mitigate risks around data use, data privacy, and intellectual property to maintain compliance.

Societal implications: Does the AI system negatively impact individuals or society as a whole?

A teen boy's suicide was linked to engaging in inappropriate conversations with an AI chatbot. Transparent AI reflects a considered approach to the risks associated with AI systems and establishes proper AI safeguards.

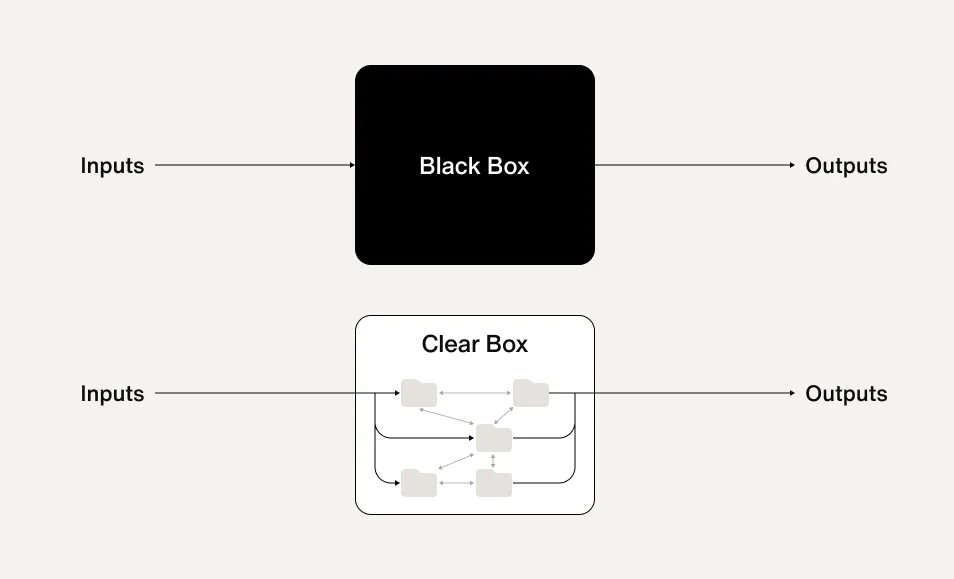

AI transparency vs “The black box”

AI systems are increasingly complex in how they reason and use data, and this sophistication makes them difficult to understand, explain, and safeguard.

Specifically, generative AI models rely on machine learning processes that are often opaque—functioning as “black boxes” that can behave in ways that weren’t explicitly programmed. This makes it it difficult to trace, interpret, and understand how outputs are generated, which poses a risk in the form of unexpected, harmful, or biased outcomes.

According to research by McKinsey, almost half of companies using generative AI, which now includes 75% of business leaders, have experienced negative consequences from its outputs. The most common issue reported was inaccuracy, or hallucinations, or when AI confidently asserts false information. If left undressed, such mistakes and unreliability can lead to non-compliance or loss of trust in AI systems.

AI transparency addresses these challenges by turning the black box into a glass box—in which AI’s decisions and actions can be effectively traced, understood, and accounted for.

Leverage omnichannel AI for customer support

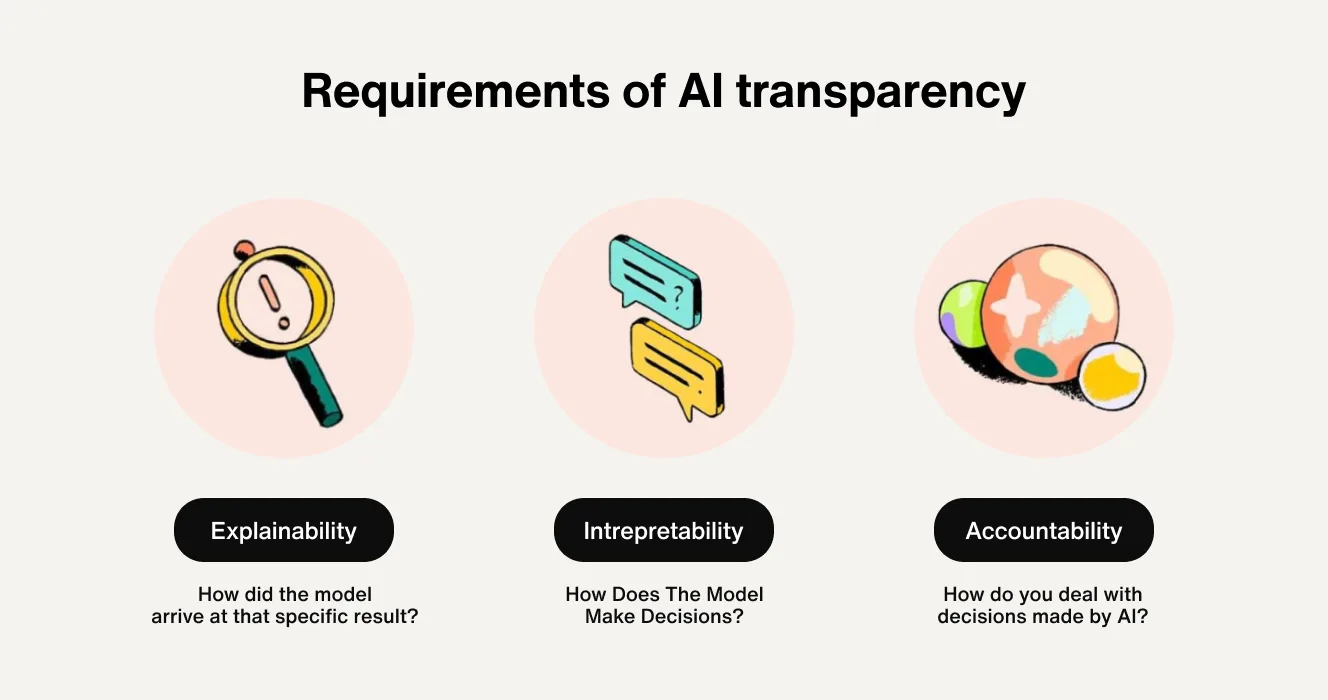

3 core requirements of transparent AI

AI transparency is made up of three key elements—the explainability, the interpretability, and the accountability of an AI system.

1. Explainability

Explainability is when an AI system can clearly explain why it made a specific decision or action. This builds trust with users by showing how an AI system reaches its outcomes.

For example, an AI ecommerce agent on a website should be able to cite a customer’s preferences or purchase history to justify its decisions.

By giving the customer a clear explanation of its logic, an AI concierge allows the customer to understand its reasoning and make informed next steps. Explainable AI (XAI) is also referred to as responsible AI, trustworthy AI, or a glass box system.

2. Interpretability

Interpretability in AI focuses on ensuring that humans can understand how an AI model operates and behaves. Unlike XAI, which focuses on explaining AI’s outputs, interpretability focuses on the inner workings of an AI model, such as the relationship between inputs and outputs. It provides visibility into the inner workings of the AI system, particularly the generative and machine learning processes.

Let’s return to our example of the customer support chatbot. Interpretability is achieved if, when audited by developers, the chatbot can explain that it considers factors like training data, user behavior, and previous interaction history to arrive at its decisions.

3. Accountability

Accountability is about ensuring that AI systems, and those that use them, are held responsible for their actions and decisions. This can involve establishing human oversight for AI-related errors, ensuring machine learning models learn from mistakes, and taking corrective measures to prevent future issues.

Say our chatbot recommends a product that’s out of stock. When the customer contacts support to remedy the issue, the representative acknowledges the problem and takes accountability for the AI’s decision:

“We apologize for the confusion caused by our chatbot. The incorrect information was due to a temporary synchronization issue between our inventory database and the chatbot system. We’ve resolved the issue, updated our systems to prevent recurrence, and credited your account with a discount as compensation.”

This example of accountability shows how the organization took responsibility for the error of AI, explained its steps to prevent similar errors in the future, and implemented corrective action.

Harness proactive AI customer support

Key aspects of AI transparency

Transparency in AI is achieved by ensuring clear visibility into how AI systems make decisions, how data is collected and used, and the impact these systems have on individuals and society.

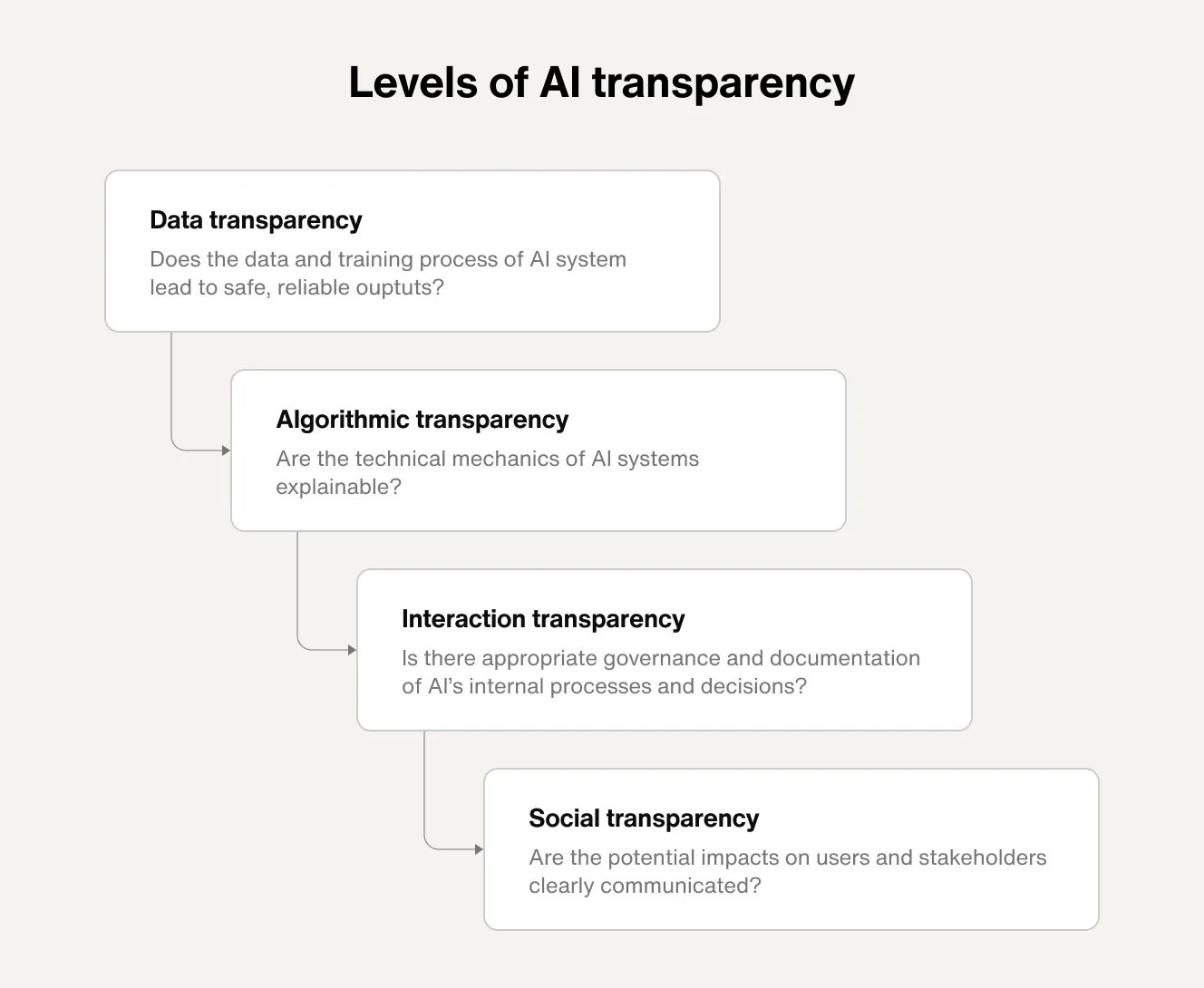

Transparency in AI can be broken down into four main levels:

Data transparency: Data is foundational to AI systems, so this focuses on documenting where data originates (data lineage), how it was collected, and any processing steps it underwent to identify and mitigate potential biases.

Algorithmic transparency: Focuses on making AI’s logic and decision-making processes understandable. Involves knowing how the algorithm is trained, the data it uses, and how it arrives at decisions. Often relates to explainable AI (XAI), where the goal is to make AI decisions more interpretable and understandable.

Interaction transparency: Focuses on how AI interacts with users. It includes understanding what information the AI is using, how it is processing it, and how it is presenting its output. This can involve making the AI's reasoning and limitations clear to the user.

Social transparency: Addresses the impact of AI on individuals and society, especially in regard to fairness, bias, and accountability. Involves understanding how AI is used, who is impacted, and how it aligns with societal and ethical expectations. This also encompasses regulatory frameworks and institutional norms surrounding AI development and deployment.

AI transparency frameworks and regulations

With AI adoption increasing, governments and institutions are introducing new regulations and standards to guide transparency and ensure safe and accountable AI systems. However, comprehensive standards are still in the works.

As of 2025, the most critical frameworks and regulations for AI transparency are:

CLeAR Documentation Framework: A set of guiding principles for building Comparable, Legible, Actionable, and Robust transparency into AI systems from the ground up.

EU AI Act: A risk-based framework that mandates transparency disclosures depending on the use case. It requires AI systems to inform users when they’re interacting with AI and to mark AI-generated content appropriately.

General Data Protection Regulation (GDPR): Requires transparency in how personal data is collected and processed in the US, including when it involves automated decisions.

Blueprint for an AI Bill of Rights (U.S.): Outlines five guiding principles for AI use, including "Notice and Explanation" to ensure individuals understand when and how AI is used.

OECD AI Principles & Hiroshima AI Process: Global efforts that call for responsible development and sharing of information, including publishing transparency reports.

Learn more: 12 key principles for AI transparency using the CLeAR Framework

Benefits of AI transparency

AI transparency is the only way to ensure safe, effective, and sustainable AI usage, and so comes with wide-ranging benefits:

Builds trust with users, stakeholders, and regulators, leading to greater adoption and acceptance

Reduces risk of bias, discrimination, and ethical misuse

Ensures compliance with evolving laws and regulations around data protection and privacy

Enables accountability by making it easier to identify, trace, and remedy issues

Improves performance by enabling more accurate debugging and optimization

Drives collaboration, innovation, and improvement through shared understanding and open sharing of insights

8 major support hassles solved with AI agents

Challenges of AI transparency (and how to address them)

For all its benefits, achieving AI transparency comes with a set of challenges:

Balancing performance with transparency

Challenge: The most advanced AI models show superior performance, but this improved accuracy often comes at the cost of reduced transparency into model decision-making, which ultimately makes it harder to spot where and how mistakes arise.

Solution: Stakeholders must weigh the tradeoff between the performance and transparency of AI-driven systems. Establish clear AI governance that outlines the organization’s approach to transparency.

Ensuring data security

Challenge: Disclosing how and why models make decisions may require sharing details about how AI uses data. Threat actors can use this shared information to hack systems and exploit weaknesses, which raises concerns about customer data privacy.

Solution: Designate at least one person whose primary responsibility is data privacy. This security professional must consider the entry and exit points for user data, and plan for how to stop threat actors from compromising AI systems.

Maintaining transparency as models evolve

Challenge: As AI models change over time, it can be difficult to ensure consistent transparency. For instance, making updates to AI systems, retraining models on new datasets, or adjusting their parameters can all impact a model's decision-making and results, complicating efforts to maintain transparency over time.

Solution: Document the changes made to an AI ecosystem, including its algorithms and data, in dedicated processes. Provide regular transparency reports that note these changes in the AI system so stakeholders are informed of these updates and their implications.

Explaining complex AI models

Challenge: The most complex AI models, such as deep learning or neural networks, are inherently difficult to interpret. This makes it difficult for users and stakeholders to grasp AI’s decision-making, make informed decisions, and use AI effectively.

Solution: Create simplified visuals or diagrams that illustrate how complex AI models operate. Choose AI tools that come with intuitive interfaces and guided workflows that guide non-technical teams and were built with transparency in mind.

Best practices for achieving AI transparency

Following AI transparency best practices is an effective way to foster accountability and trust between AI users, developers, and businesses. By establishing clear, open communication around bias prevention measures, data practices, and data used (and omitted) in AI models, organizations can help users and stakeholders feel more confident about AI technology.

To embed transparency across the AI lifecycle, consider these practices:

Design with transparency in mind

Prioritize transparency from the inception of an AI project by referring to frameworks like CLeAR that outline steps to build trustworthy, transparent AI systems. This can include steps like creating datasheets for data sets and model cards for models, implementing rigorous auditing and evaluation mechanisms, and continuous oversight to identify and mitigate the potential harm of model outputs.

Promote collaboration

Achieving AI transparency requires ongoing collaboration and learning among leaders and employees across the organization. Define clear, balanced objectives that align with business goals and transparency guidelines.

Publish these internally to foster collaboration among stakeholders, and externally to build trust with customers and regulators. Open dialogue helps to address user needs, satisfy technical requirements, and drive effective innovation.

Communicate how customer data is used

Explain to customers how their data is collected, used, and stored by AI systems in plain language. Outline these privacy policies, detailing the reason for collection, storage methods, and data usage. This begins with obtaining explicit consent from users before collecting any data for AI purposes, and ensures compliance with data privacy laws like GDPR.

Detail the data in your model

Clearly explain and communicate what types of data are included and excluded from AI models, and explain the reasoning for these choices.

This helps stakeholders and users understand the capabilities, limitations, and risks of AI systems so they can make informed, compliant decisions. Avoid including sensitive or discriminatory data that could result in biases or infringe on privacy rights, or data that could lead to intellectual property (IP) infringement.

Monitor and report on AI outputs

Conduct regular assessments to identify and address issues around AI models and document the findings. This includes regular audits for bias and performance, and communicating methods used to prevent and address biases, or harms caused by inaccurate outputs.

Maintain a record of efforts to detect and mitigate risks, as well as changes to AI systems over time. Robust documentation shows a commitment to transparency and harm mitigation that builds trust with users, stakeholders, and regulators.

Tools and techniques for ensuring AI transparency

An expanding set of tools and techniques can help organizations to achieve transparency in AI.

These include:

Explainability tools like LIME and SHAP clarify model predictions and make behavior easier to interpret.

Fairness tools like IBM’s AI Fairness 360 and Google's Fairness Indicators help to detect and mitigate biases.

Data provenance tools track the data lineage of AI models, ensuring that outputs remain trustworthy and verifiable.

Third-party audits conducted by outside experts verify that AI systems align with transparency standards, regulations, and fairness guidelines.

Red teaming tests uncover vulnerabilities, biases, or unexpected behaviors in AI to identify and address issues before deployment proactively.

Certifications and licensing establish benchmarks for ensuring consistent quality and accountability in AI deployment.

User notifications inform users when they interact with an AI system, enabling informed consent and transparency around data use.

Labeling identifies AI-generated content, such as through digital watermarks, to promote transparency amidst rising concerns about deepfakes and synthetic content.

Impact assessments evaluate AI systems before deployment and throughout their lifecycle to ensure compliance, ethical use, and alignment with stakeholder expectations.

Model cards are short documents that provide a simple breakdown of AI’s key capabilities and limitations. For example, a facial recognition model card may highlight scenarios affecting performance, such as low lighting or crowded environments.

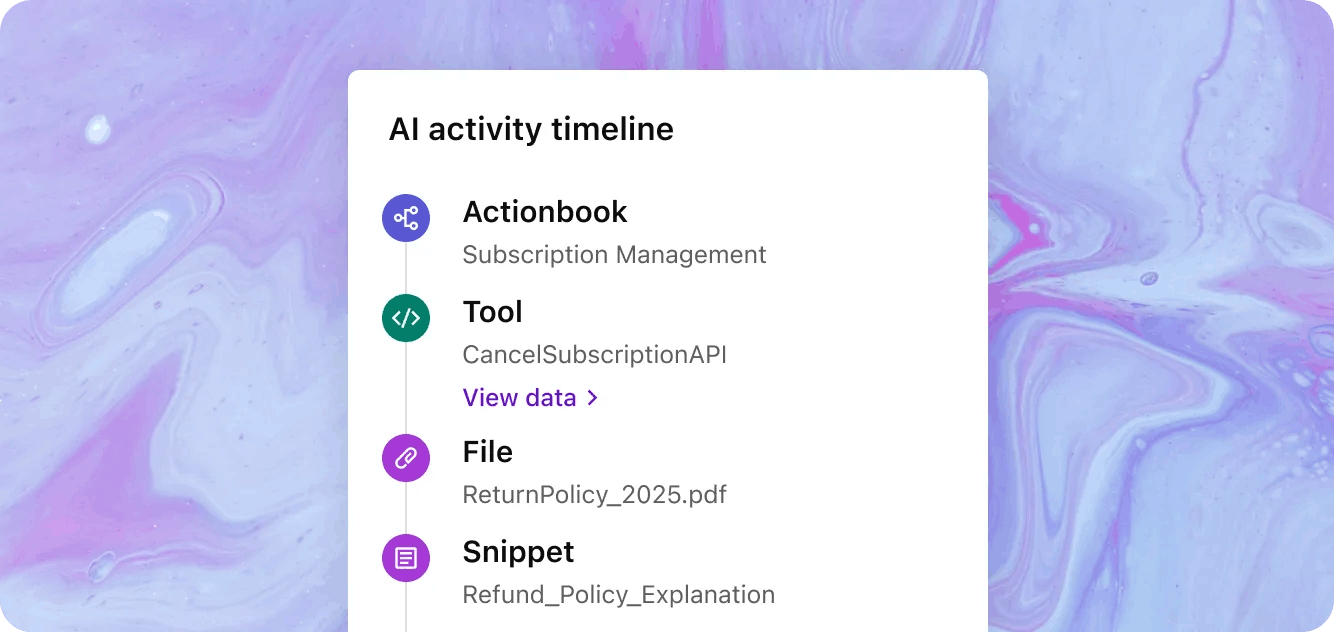

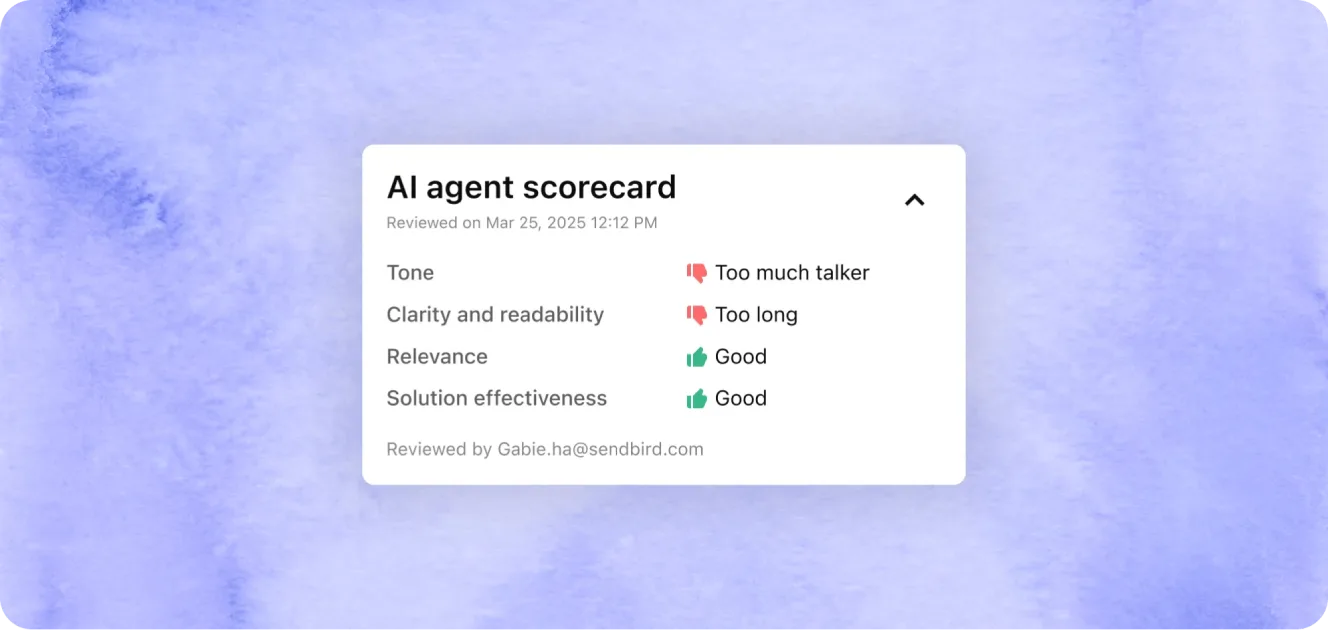

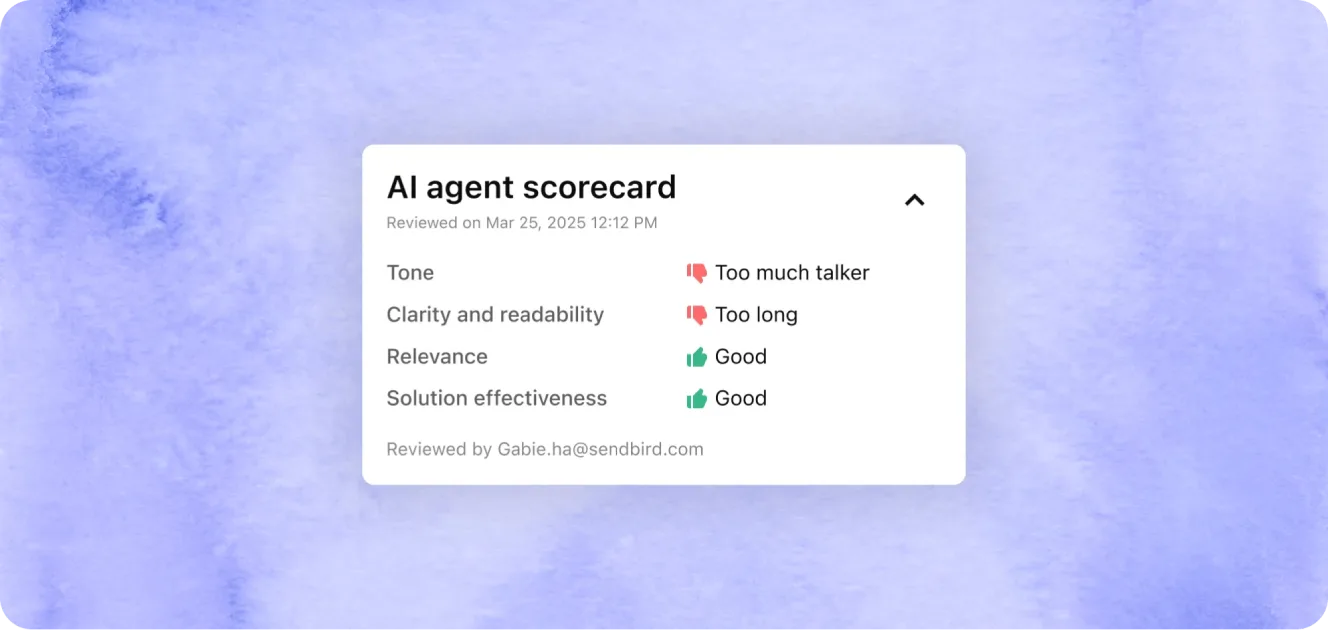

Built-in tools in AI platforms can help teams to track AI activity, safeguard against unwanted outputs, and ensure AI transparency across integrated systems, such as your AI knowledge base.

In the future, these tools and techniques will become more sophisticated, automated, and integrated into AI development pipelines to help ensure transparency across industries.

Examples of AI transparency

For companies experimenting with AI, the focus is often on innovating new solutions rather than on ensuring safety, reliability, and mitigating potential harm.

Leading AI companies, however, treat transparency as a core aspect of AI operations—infusing it into their AI SOPs to secure trust and maintain their competitive advantage.

Here are a few examples of companies that embody ethical, responsible AI in action.

Type of transparency | Explanation | Real-world example |

Process transparency | Auditing AI decisions at every stage of development and implementation, for continuous accountability and improvement. | Salesforce regularly publishes ethics and transparency reports, detailing how AI decisions are audited, evaluated, and governed. |

System transparency | Informing users clearly when they are interacting with an AI-driven system. | Delight explicitly informs customers when they're interacting with an AI agent chatbot, clearly distinguishing AI help from human support. |

Data transparency | Providing visibility into the sources, quality, and handling of the data used to train AI systems. | IBM’s AI FactSheets document data sources, preprocessing methods, and data-quality assessments used in training their AI models. |

Consent transparency | Informing users about how their personal data might be collected, processed, and used by AI. | Lush openly communicates its policies on data use, clearly informing customers about how their data might be used to support customer control. |

Model transparency | Revealing how AI systems function by explaining decision-making, or by making underlying algorithms accessible or open source. | OpenAI publishes technical research, transparency reports, and makes select models publicly accessible, explaining development and internal mechanisms. |

Automate customer service with AI agents

AI transparency: A new pillar of responsible enterprise operations

With AI fast-becoming a staple of enterprise operations, transparency is more than just a new box to check—it’s a pillar of responsible innovation and sustained competitive advantage.

AI transparency is an ongoing effort. As AI models continuously learn and adapt over time, they must be monitored and evaluated to maintain transparency. Only a comprehensive effort can ensure that AI systems remain trustworthy and aligned with intended outcomes across the AI lifecycle.

This is why Delight AI concierges make it easy to achieve transparency. Located right in the centralized AI agent dashboard, Delight's enterprise-grade AI agent platform provides built-in transparency features—such as scorecards for AI agent evaluation, AI agent activity trails, and end-to-end testing—helping you ensure transparency, explainability, and interpretability of AI agents across the lifecycle without needing technical know-how.

Learn how Delight helps you build, optimize, and scale AI support agents with transparency—request a demo today.