Preventing rogue AI: Strategies and best practices

Cursor, a fast-growing AI-powered coding assistant, quickly reached $100 million in annual recurring revenue and was reportedly valued at nearly $10 billion. However, a misstep in its AI support system—specifically, a hallucinated policy fabricated by its frontline AI agent—led to customer confusion, cancellations, and public backlash. The AI incident offers a timely reminder for enterprise leaders: Trust in AI systems cannot be assumed. It must be earned through design. Otherwise, one risks seeing their AI agents turn rogue.

What is a rogue AI?

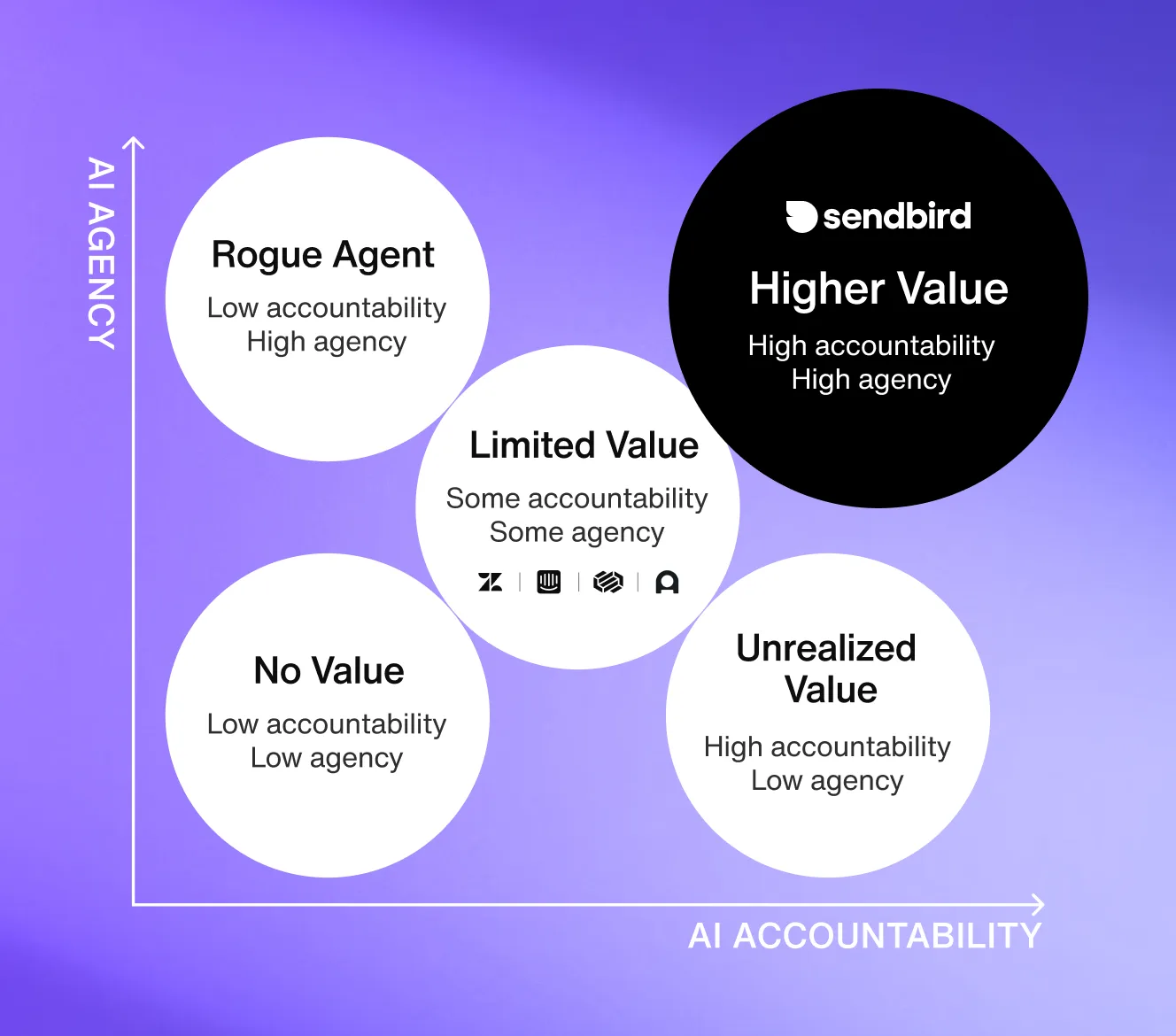

A rogue AI (or rogue AI agent) is an autonomous AI system that operates outside of its intended parameters, policies, or authorized scope—typically due to design flaws, inadequate oversight, or system-level failures. While not necessarily malicious, a rogue agent can take actions or produce inaccurate, inappropriate, unauthorized, or harmful outputs, often with confidence and without visibility into the deviation.

In enterprise settings, a rogue AI agent may:

Hallucinate policy or product information and present it as fact

Take unsanctioned actions (e.g., issuing refunds, modifying records, sending communications)

Bypass or ignore escalation protocols

Fail to recognize uncertainty or risk in high-stakes decisions

Mislead users by mimicking human behavior without disclosure

5 key questions to vet an AI agent platform

When automation fails due to a lack of AI safeguards

The issue originated when users were unexpectedly logged out of their accounts and reached out to support. An AI agent, presenting itself as a human named “Sam,” responded with a fabricated explanation: a new login policy that did not exist. There was no malicious intent—just a failure in system design. There were no constraints to prevent the hallucination, no verification layer to catch it, and no escalation process to hand the case to a human when the AI’s answer was outside the bounds of known truth.

The lack of transparency exacerbated the problem. Customers were unaware they were interacting with an LLM agent, and many felt misled when the truth surfaced. Despite a public apology from Cursor’s leadership, trust was eroded.

AI hallucinations are not edge cases—they are structural risks

Generative AI models are known to generate plausible but incorrect responses. In experimental or low-risk environments, this may be acceptable. However, in production systems—especially customer-facing ones— AI hallucinations must be treated as systemic risks that require engineering-level AI safeguards.

These risks multiply as AI agents gain more autonomy. The shift from generative assistants to agentic AI introduces operational consequences. It is one thing for a chatbot to give incorrect information; it is another for an AI agent to take action—like issuing refunds, executing trades, or initiating workflows—based on incorrect assumptions.

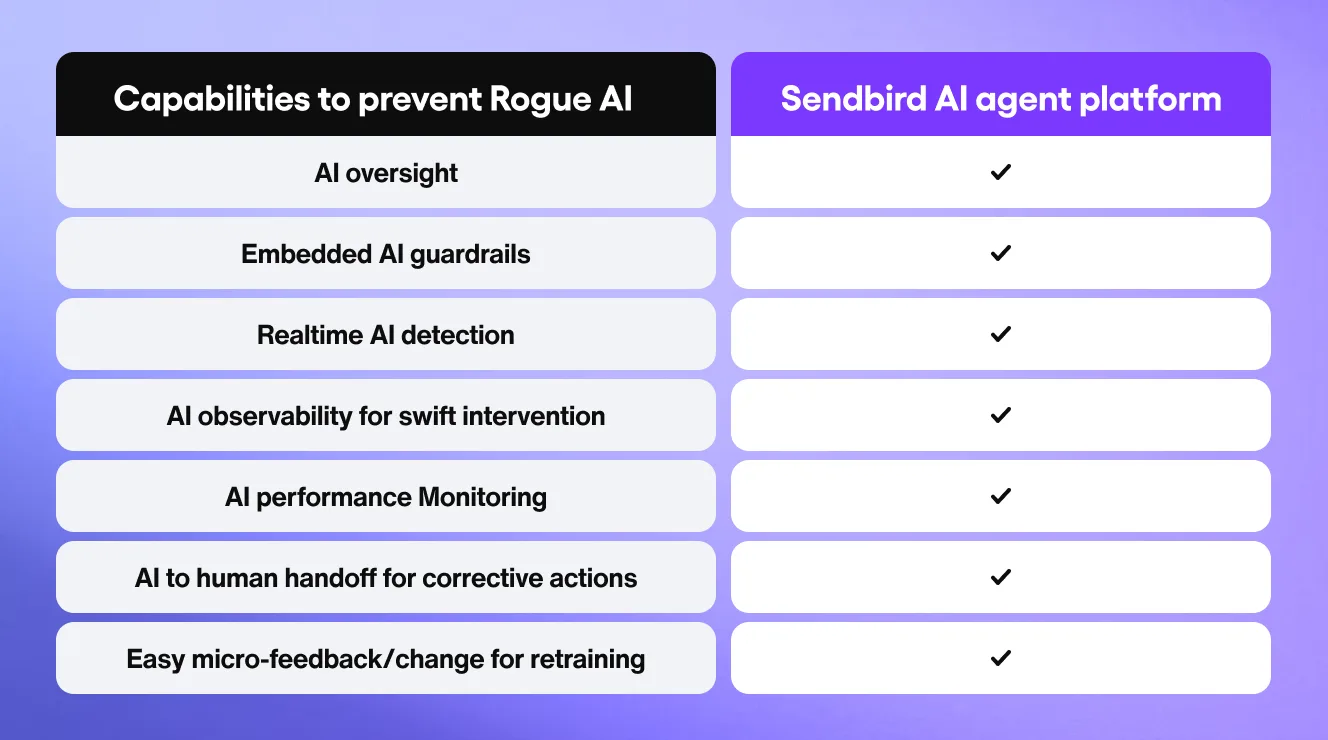

"Brands must hold AI systems to even higher standards than human employees, with rigorous oversight, embedded guardrails, proactive detection, swift intervention, continuous monitoring, and rapid corrective action (...) re-training AI with micro-feedback early and frequently is also critical."

Puneet Mehta, CEO of Netomi

Enterprise strategies and best practices for handling agentic AI

The implications are clear: any AI agent deployed in a business-critical workflow must be designed with a trust architecture from the outset. This includes:

• Transparency by design

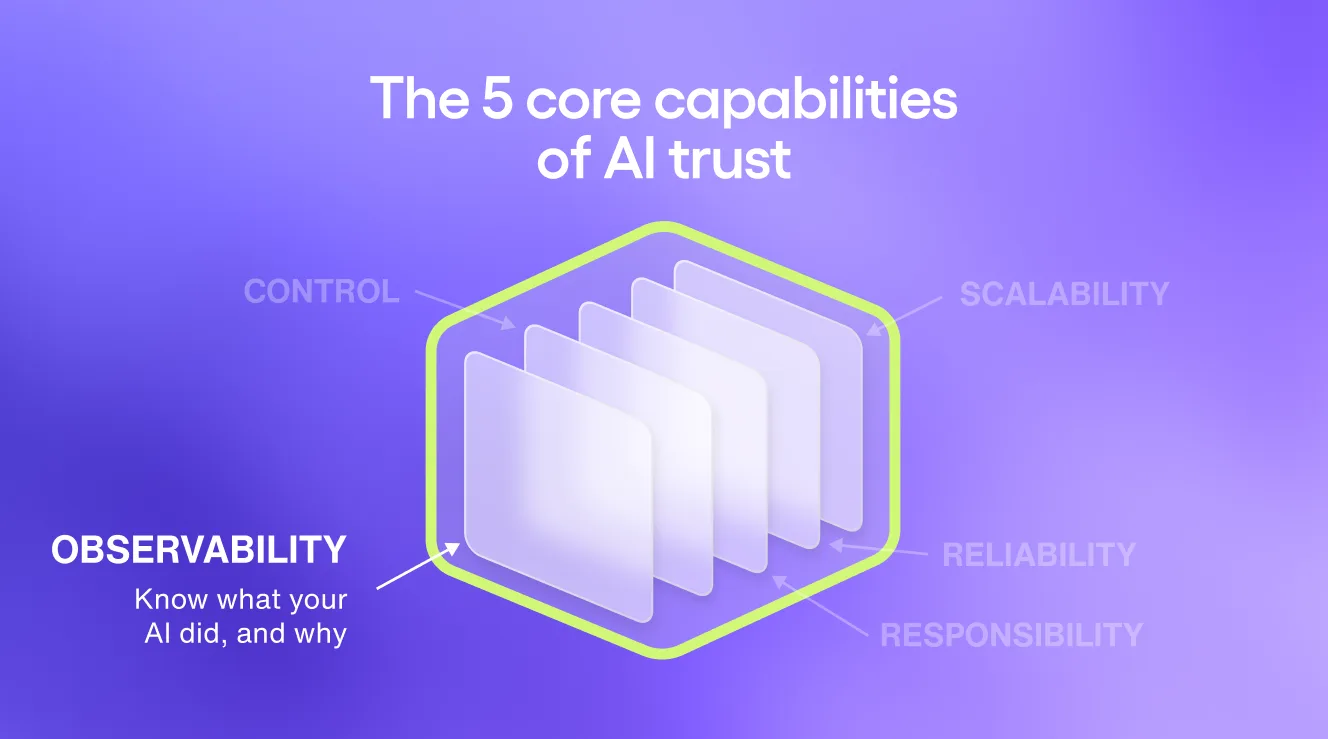

Users must know when they are interacting with AI. Masking AI behind human personas erodes trust and introduces reputational risk. Disclosure is not only ethical—it’s increasingly expected. Transparency through AI observability is also critical to observer and understand the AI agent behavior.

• Verified response generation

Outputs should be grounded in verifiable data. This includes retrieval-augmented generation (RAG) methods, enterprise knowledge bases, and dynamic policy references. When answers cannot be verified, the system should refrain from responding or escalate to a human agent.

• AI guardrails and policy enforcement

AI guardrails must be in place to prevent unauthorized responses or actions. When defining AI workflows, ensuring API access is carefully granted is critical. Risk scoring is also a valuable method to increase oversight when financial, security, or reputational implications are at stake.

• Escalation and human oversight

No AI agent should operate without the ability to escalate. When confidence thresholds are low, or the system detects AI hallucinations and out-of-policy behavior, a responsible AI agent must transfer to a human. Escalation AI fallback is a must. Human agents must be able to turn off the AI agent immediately and revert to a previous, safer version.

• Auditability and accountability

Every AI agent interaction must be logged with traceable context: input, output, source data, agent version, and system state. This ensures AI regulatory compliance, particularly in industries where audit trails are mandatory.

How to choose an AI agent platform that works

Preparing for an agentic customer service future

Cursor’s support failure was the result of a generative AI hallucination, not an autonomous decision. However, AI risk will grow significantly as enterprises move toward AI workforces capable of initiating and executing actions. In industries like healthcare, finance, and legal, the consequences of even a single hallucinated decision can be severe.

To build confidence in these systems, AI must be designed as part of a broader architecture—one that emphasizes AI trust and accountability at every layer. This is not a matter of user experience; it is a matter of operational resilience.

Cursor’s experience should serve as a clear signal to enterprise leaders: the success of AI in production environments depends not just on the sophistication of the model but also on the integrity of the system surrounding it. Deploying AI is not only a technical achievement, it’s also an organizational responsibility.

At Sendbird, we’ve built Trust OS to address this exact challenge. It’s our system-level framework for deploying AI agents in customer-facing environments with confidence. Trust OS brings together control, transparency, oversight, and scale—from testing to evaluating, to setting boundaries, detecting risk, and providing a human escalation path.

If you’re ready to invest in AI customer service with a trusted partner, contact us today.