Prompt engineering: A guide to AI prompting for beginners

If you’ve ever been disappointed with the answer from a generative AI tool like ChatGPT, you already know—getting useful results from AI isn't always straightforward.

As AI becomes more embedded in business operations and our daily lives, this raises questions like—

How do you talk to AI so it does what you want? How do you unlock the full potential of an AI model?

The answer to these questions lies in prompt engineering.

Whether you're new to AI or brushing up on your prompting skills, this comprehensive guide will help you understand the ins and outs of prompt engineering so you can quickly create effective AI prompts.

Specifically, we cover:

8 major support hassles solved with AI agents

What is prompt engineering?

Prompt engineering is the process of guiding generative AI tools, particularly LLMs (large language models), to produce the desired outputs. By carefully crafting AI prompts—or the instructions you give to AI—you provide the model with context, examples, and detailed directions that help it respond in a meaningful way.

Think of it like writing a recipe for the AI. Like a chef, you're providing the instructions and the ingredients needed to create the specific output you have in mind. The goal of prompt engineering is to get generative AI to work as intended, and reliably produce useful and contextually relevant outputs.

"Well-crafted prompts direct the LLM to produce accurate, relevant, and contextually appropriate responses."

IT World Canada

What is an AI prompt?

In the context of AI, a prompt is the input you provide to the model to generate a specific response. In other words, it’s the set of instructions you give to a generative model to guide its output.

These instructions can take various forms, ranging from a simple question to multi-step instructions to code snippets. They can also include multimodal inputs such as pictures and audio.

Ultimately, the quality and relevance of the AI’s output is directly influenced by the effectiveness of the AI prompt.

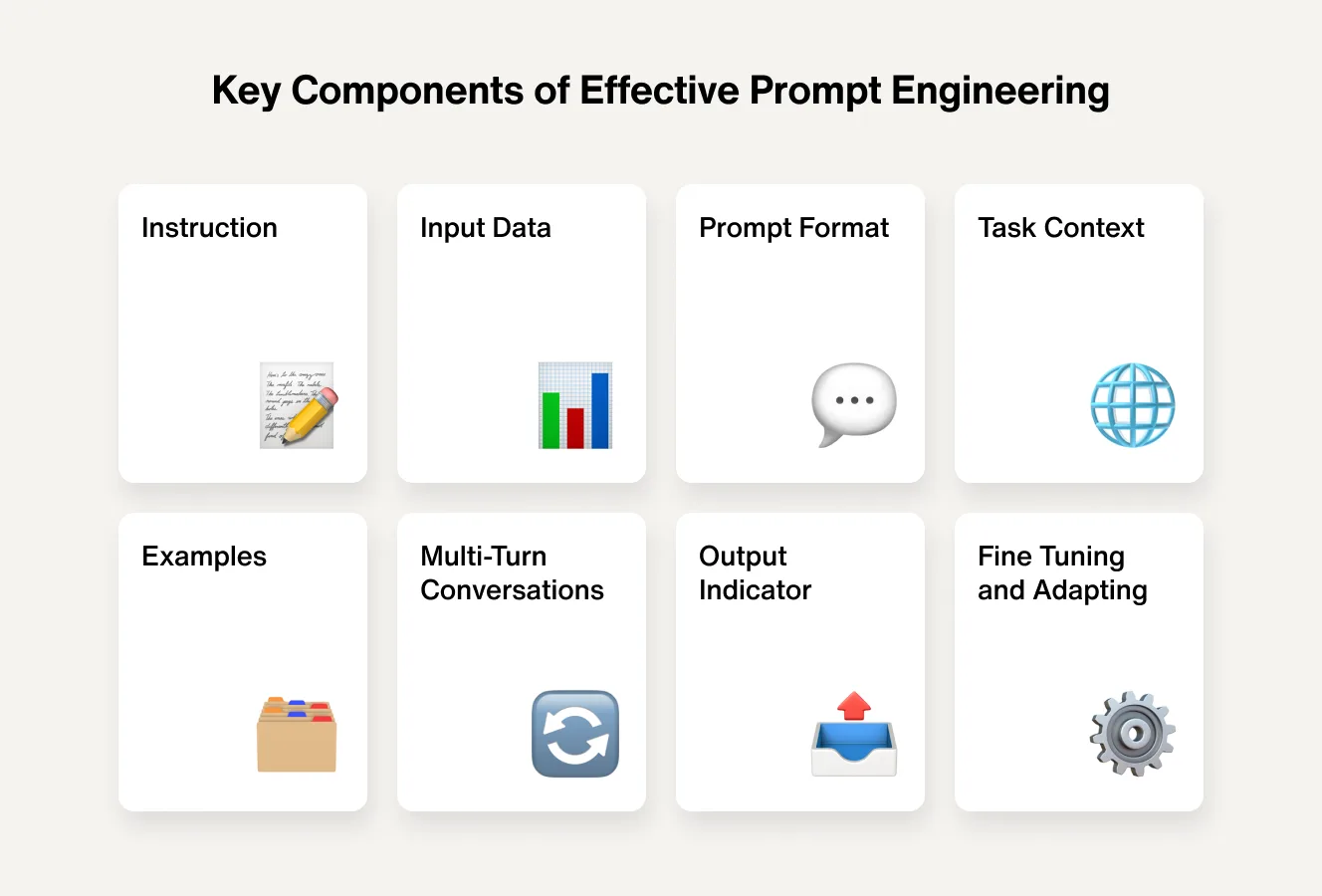

Key components of effective prompt engineering

Prompt engineering is an iterative process that guides and fine tunes model behavior by creating prompts that are clear, concise, and consistent.

The challenge is: AI models are as open-ended as they are powerful. They can perform various tasks like summarize texts, write code, and answer questions, all from as little as a single keyword input.

AI models work by predicting the next best output based on the prompt given. However, because they have no innate sense of context, they rely heavily on the subtleties of human language to direct their output.

For this reason, it can help to think of prompt engineering as ‘programming a machine with words’ to get back a certain response. As a result, the key to effective prompt engineering is using clear language and providing lots of detail and context.

This typically involves the following elements:

Instruction: The core of the prompt, the instruction provides an understanding of the task at hand, helping the model to frame its output appropriately. For example, “Summarize this text” provides a clear action to take. The more clear, concise, and specific the instructions, the more likely the LLM is produce a response that meets your exact requirements.

Prompt format: The structure of your prompt plays a key role in how the model interprets and responds to your input. Prompt formats can range from simple questions in natural language to direct commands to structured inputs with specific fields.

Task context: Context provides additional information that helps the model understand the broader scenario or background. For instance, "Considering the rise of AI, create a business plan" gives the model a backdrop against which to frame its response.

Examples: Examples greatly improve the model’s understanding and performance on a task, as they serve as concrete illustrations of the expected output. For instance, you can drop in text from an existing piece of content you want to emulate to guide output and improve results.

Input data: This includes any specific information or data you want the model to process for a given task. It could be a whole book, a set of numbers, or a single phrase.

Multi-turn conversations: Designing prompts for multi-turn conversations allows users to engage in continuous and context-aware interactions with the AI model, enhancing the overall user experience.

Output indicator: This element provides the model with a known format or type for its output. For example, "In the style of J.K. Rowling, rewrite the following sentence" gives the model a stylistic direction, which can be especially helpful in roleplaying scenarios.

Fine tuning: Fine-tuning the AI model on specific tasks or domains using tailored prompts can enhance its performance. Additionally, adapting prompts based on user feedback or model outputs can further improve the model's responses over time.

Leverage omnichannel AI for customer support

Key prompt engineering techniques: Types of AI prompts

You can craft prompts for AI in various ways, depending on the desired output. The most common of these prompt engineering techniques include:

Direct prompts (Zero-shot)

Zero-shot prompting provides the model with a direct instruction or question without any examples (aka shots). This is useful for broad, open-ended queries, such as when you ask the model to summarize a text, translate a text, or generate new ideas or brainstorm solutions.

Example prompt: “Explain what a large language model is.”

One-, few-, and multi-shot prompts

This method provides one of more examples (shots) of the desired input-output pairs before presenting the actual prompt. This context helps the model to better understand the task and generate more accurate responses.

Example one-shot prompt: “Basketball is a game of dribbling and shooting. Explain why it's popular." Few-shot: "Basketball involves dribbling and shooting, and soccer requires kicking and passing. What skills are important for tennis?"

Chain of Thought (CoT) prompts

This involves asking the model to break complex reasoning down into a series of steps. By guiding the model from one step to the next, this can lead to a more comprehensive, useful, and well-structured output.

Example prompt: “Describe the process of building an AI agent, from start to finish.”

Zero-shot CoT prompts

This method guides a model through a series of steps without examples, aiming to leverage the model's inherent knowledge. It can involve adding a phrase like "Let's think step by step" to the prompt, which invites the model to articulate its reasoning and can help to produce a better output.

Example prompt: "Is 523 divisible by 3? Explain your reasoning."

Prompt engineering examples and use cases

AI models are powerful tools with various applications. Whether you're looking to generate content, analyze documents, answer questions, create visuals, write code—a well-crafted prompt is how you shape the output's style, structure, and content to your needs.

Here are some key examples and use cases for prompt engineering:

Language and text generation

Scenario | Instructions | Example AI prompt |

Creative writing | Guide the AI by specifying genre, character archetypes, and setting for imaginative content. | "Write a sci-fi scene where a detective interrogates a robot accused of theft." |

Summarization | Give the AI long-form text and request a concise abstract that preserves the key takeaways. | "Summarize the main arguments from this essay on the ethics of gene editing." |

Translation | Clearly state the source and destination languages to preserve cultural and contextual meaning. | "Translate this sentence from French to German: 'La vie est belle.'" |

Dialogue | Set up conversational roles or scenarios for dynamic, contextual AI replies. | "Act as a travel agent helping a family plan a vacation to Italy. Start the chat." |

Question answering

Scenario | Instructions | Example AI prompt |

Open-ended Questions | Encourage the AI to explain or elaborate using detailed reasoning and contextual understanding. | "How does the human immune system differentiate between self and non-self cells?" |

Specific questions | Request focused, factual answers that pull from either AI knowledge or user-provided context. | "What is the tallest mountain in Africa?" or "According to the article, what causes inflation?" |

Multiple choice questions | Present options and ask the AI to select and justify the best answer. | "Which element is a noble gas? A) Oxygen B) Helium C) Nitrogen" |

Hypothetical questions | Ask the AI to explore imaginary scenarios and predict potential consequences or outcomes. | "What might society look like if cars were replaced entirely by autonomous drones?" |

Opinion-based questions | Ask the AI to articulate a position and support it with rationale or evidence. | "Should social media platforms be regulated by governments? Why or why not?" |

Code generation

Scenario | Instructions | Example AI prompt |

Code completion | Provide a code snippet or goal and ask the AI to finish or extend it logically. | "Complete this JavaScript function to filter even numbers from an array." |

Code translation | Specify the languages and instruct the AI to maintain logic while converting syntax. | "Convert this Ruby method to Python: def add(a, b) return a + b" |

Code optimization | Ask the AI to analyze and enhance code for better readability or speed. | "Improve the efficiency of this SQL query that selects customers from the last 12 months." |

Code debugging | Present problematic code and prompt the AI to detect and fix logic or syntax issues. | "Fix this Python code that’s returning a division by zero error and explain the fix." |

Image generation

Scenario | Instructions | Example AI prompt |

Photorealistic images | Describe the scene with clarity, including environment, subject, and lighting. | "A high-resolution image of a child blowing dandelions in a sunlit meadow during spring." |

Artistic images | Specify style, medium, and subject to generate visually expressive artwork. | "A cubist painting of a jazz band playing in a smoky 1920s bar." |

Abstract images | Focus on moods or ideas, encouraging the AI to use symbolism and design elements. | "An abstract visual of the feeling of anxiety using dark tones, sharp lines, and swirling forms." |

Image editing | Give clear instructions for modifying an existing image or composite creation. | "Add a rainbow over the waterfall in this photo." or "Replace the background with a futuristic city skyline." |

Harness proactive AI customer support

Prompt engineering best practices

Prompt engineering relies on providing specific instructions to get specific outputs. By providing the model with a set of meaningful constraints, you can get it to think and create in alignment with your wishes.

You can follow these prompt engineering best practices to quickly develop effective prompts:

1. Set clear goals and objectives

Tactic | AI prompt example | |

Specify the desired action using active verbs | "Generate a timeline that outlines the major milestones of the American Civil Rights Movement." | |

Define the desired length and format of the output | "Write a 200-word executive summary of the attached product strategy document." | |

Specify the target audience | "Draft an email campaign introducing our new budgeting app to college students with limited income." |

2. Provide context and background information

Tactic | AI prompt example | |

Include relevant facts and data | "Given that remote work increased by 300% between 2020 and 2022, discuss its impact on urban economies." | |

Reference specific sources or documents | "Using the attached customer feedback data, summarize the top three pain points experienced by users." | |

Define key terms and concepts | "Define 'blockchain consensus mechanism' for someone with no background in computer science." |

3. Use few-shot prompting (include examples)

Tactic | AI prompt example | |

Provide a few examples of desired input-output pairs | Input: "Rain" Output: "Water falling from the sky in droplets." Input: "Snow" Output: "Frozen crystals falling from the sky." Prompt: "Hail" | |

Demonstrate the desired style or tone | Example 1 (casual): "This phone is lightning fast and takes killer photos." Example 2 (technical): "The device features a Snapdragon 8 Gen 2 chipset." Prompt: "Write a product description for a smart watch." | |

Show the desired level of detail | Example 1 (simple): "A cat is an animal." Example 2 (detailed): "A cat is a small, carnivorous mammal often kept as a house pet for companionship." Prompt: "Describe a giraffe." |

4. Be specific

Tactic | AI prompt example | |

Use precise language and avoid ambiguity | Instead of: "Write something about social media," use: "Write a critical analysis of TikTok’s impact on teen mental health." | |

Quantify your requests whenever possible | Instead of: "Write a short story," use: "Write a 300-word science fiction story set on Mars in the year 2150." | |

Break down complex tasks into smaller steps | Instead of: "Design a product launch strategy," use: "1. Define the target market. 2. Identify key messaging. 3. Select distribution channels." |

5. Iterate and experiment

Tactic | Action | |

Try different phrasings and keywords | Rephrase "Summarize the article" as "Highlight the top three takeaways from the article in bullet points." | |

Adjust the level of detail and specificity | Add more context (e.g., audience, tone, format) or remove it to see how the output shifts. | |

Test different prompt lengths | Compare outputs from "List some benefits of AI" vs. "List five practical benefits of using AI in supply chain logistics." |

6. Leverage chain of thought prompting

Tactic | AI prompt example | |

Encourage step-by-step reasoning | "Solve step-by-step: If a car travels at 60 mph for 2.5 hours, how far does it go? Step 1: Multiply speed by time..." | |

Ask the model to explain its reasoning process | "Why do you consider the tone of this tweet to be sarcastic? Break down your analysis." | |

Guide the model through a logical sequence of thought | "To recommend a book, follow these steps: 1. Identify the reader’s age. 2. Ask about genre preferences. 3. Match accordingly." |

7. Use cues to guide tone, role, or format

Tactic | AI prompt example | |

Assign a role to the AI to evoke a specific perspective and expertise | "You are a hiring manager at a tech startup. Write interview feedback for a junior frontend developer candidate." | |

Specify the structural format of the output | "Respond as a bulleted list of pros and cons regarding the use of biometric authentication in smartphones." | |

Signal the tone or voice you want the AI to adopt | "You are a witty social media manager. Write a playful tweet announcing our new app feature." |

Dive deeper: Google: 5 best practices for prompt engineering

What are the benefits of prompt engineering?

Done well, prompt engineering enables you to harness the vast potential of generative AI models for any number of specific applications.

With AI becoming more embedded in our personal and professional lives, prompt engineering is shaping up to be a highly valuable skill that not only improves personal efficiency, but also leads to better business outcomes.

Other key benefits of prompt engineering include:

Improved model performance: Prompt engineering can significantly enhance the accuracy and relevance of AI outputs.

Reduced bias and risk: By clearly defining perspectives or contextual requirements, well-designed prompts can help mitigate inherent biases in AI model’s training data.

Greater control and predictability: By standardizing prompt formats and using system-level instructions, businesses can encourage consistent responses, helping to mitigate risk and ensure AI compliance.

Enhanced user experience (UX): Good prompts lead to more intuitive, helpful, user-friendly AI interactions that help to reduce misunderstandings and aligning outputs with user expectations.

Reinvent CX with AI agents

11 Prompt engineering tips

Prompt engineering is an evolving field that’s both an art and a science—requiring a mix of creativity and precision to get desired outputs. As a science, it involves methodical experimentation, logical structuring, and data-driven tweaking to reliably produce quality outputs. As an art, it requires intuition, linguistic finesse, and an understanding of human psychology to craft prompts that elicit the most useful and relevant responses.

These prompt engineering tips can help to guide your thinking:

1. Prompt like you're talking with a person

Include the level of detail and context you'd include when explaining a problem to a child, rather than just using keywords like when searching online with Google.

Example: Instead of just entering the term "AI agent," ask a complete question: "How can you use an AI agent?"

2. Assign a role

By asking the model to behave as a specific persona, you get outputs that better align with the expertise and perspective of that field.

Example: "You are a corporate lawyer. Evaluate this AI governance strategy in context of the current regulations on AI compliance.”

3. Break complex tasks into steps

LLMs often perform better if the prompt is broken down into smaller steps, as it helps them to think through each step in sequence rather than all at once.

Example: Create a multi-step prompt such as: “Step 1: Extract 3–5 factual claims from the text below. Step 2: For each extracted claim, write a search query that could be used to verify it using reliable sources.”

4. Balance openness and specificity

Models need goals and context, but there’s value to leaving prompts open-ended, especially in creative fields. Models can produce novel insights, ideas, and content if their word choice, tone, and structure aren’t too tightly defined.

Example: Try something like “Create a catchy slogan for an eco-friendly water bottle. The message should appeal to environmentally conscious young adults and highlight sustainability. Use your judgment to make it memorable.”

5. Use clear syntax

Using clear syntax in your prompt—such as punctuation, headings, and section markers—helps communicate intent and make outputs easier for the model to parse, especially in multi-step prompts, leading to more structured, accurate outputs.

Example: Try using syntax like: "STEP 1: Summarize the text below. --- STEP 2: Generate three search queries based on your summary. ---" The --- acts as a clear stopping point between steps, and uppercase headings help the model distinguish phases of the task.

6. Repeat instructions at the end

Models can be susceptible to recency bias, meaning information at the end of the prompt can have more influence over the output than information at the beginning. Repeating instructions at the end of the prompt can help ensure a more relevant, consistent output.

Example: Instead of ending a prompt abruptly, bookend it with the instructions you started with to help the model stay on task from start to finish.

7. Give the model an “out”

AI models can hallucinate, or confidently generate false outputs, when they don't have enough information. To help prevent this, build a fallback option into prompts that tell the model what to do if it can’t confidently complete the task.

Example: For reviewing a text, you could include phrases such as “If the content is accurate, evaluate, summarize, and include” or “If inaccurate, omit but include footnote as to why.”

8. Try conditional execution

This involves telling the model to perform an action only if specific criteria are met. Akin to "if-then" logic, it lets the AI skip, adapt, or add steps as needed. By controlling model behavior based on input, it helps prevent hallucinations and supports multi-step workflows where every step isn’t always needed.

Example: For an AI concierge, a prompt could say: “If the user mentions a technical error, initiate a step-by-step troubleshooting workflow. If it's a general question, provide a brief explanation.”

9. Take an iterative approach

It can help to begin with a broad prompt and gradually refine it based on the model’s responses. Known as prompt chaining, this adds constraints one by one, even using the model’s outputs as part of a feedback loop to inform adjustments, which can later be combined into one long prompt.

Example: Start with a basic prompt like “Describe how AI can improve customer service,” and based on output, refine it further—e.g., “Now focus on enterprise companies and include examples of key tools.”

10. Ask AI

AI models are knowledgable on many subjects, including prompt engineering. To identify areas on improvement, ask the model to analyze your prompt, or provide a prompt templates or a meta prompt to guide your efforts.

Example: Ask: “How would you rewrite this prompt to be more effective?” or “What additional context would improve this prompt?”

11. Think like a linguist

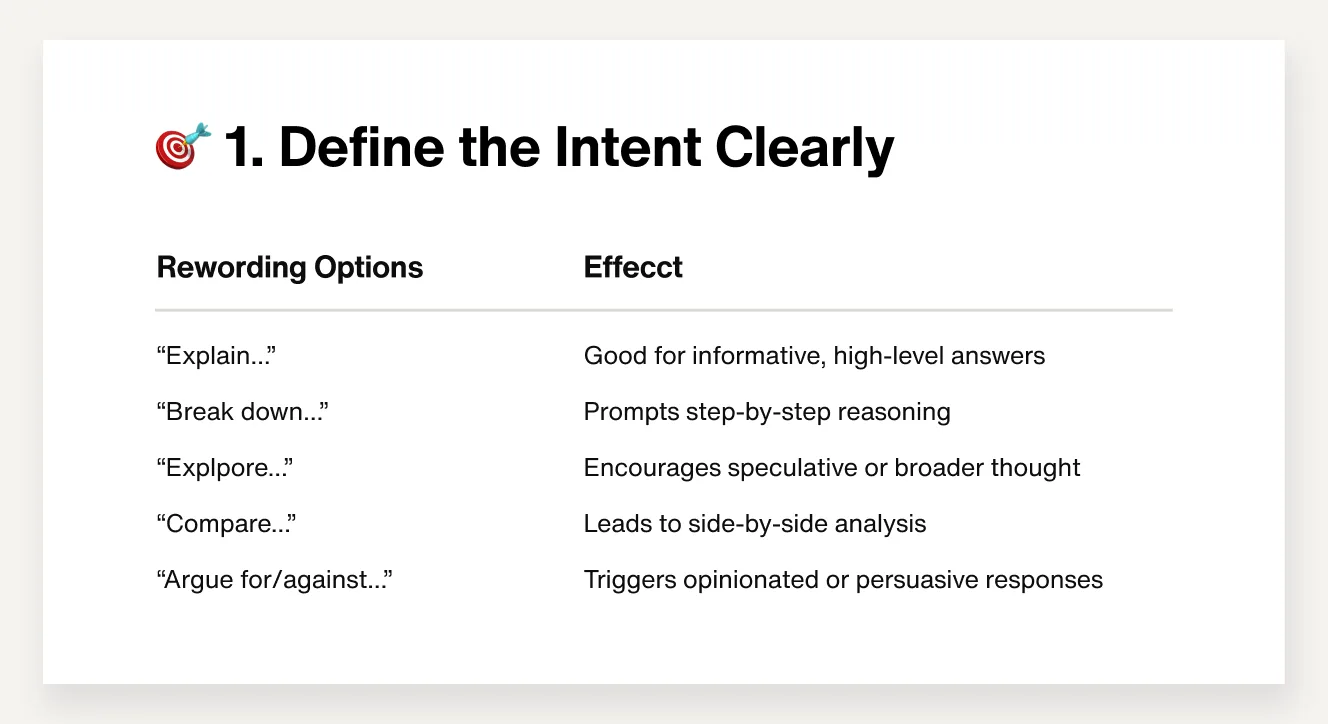

AI models over-index on human language, so it's not just what you ask—but how you ask. Nuanced tweaks to word choice, conjugation, and sentence structure can all lead to different outputs.

Example: In the image below, ChatGPT explains how subtle differences in word choice of AI prompts can lead to different outputs.

Related reading: How to write the best AI prompts

The technical aspects of prompt engineering

A well-crafted prompt is the foundation for successful interactions with AI models. As AI becomes more embedded in business operations, guiding them to provide accurate, useful outputs will be increasingly crucial for developers, business leaders, end-users—as well as in the emerging role of prompt engineers.

Here’s a quick overview of the technical side of prompt engineering:

Model calibration and fine-tuning

In addition to refining the prompt itself, you can also calibrate or fine-tune the AI model. This involves adjusting the models parameters to better suit specific tasks or datasets. This is an advanced practice, but can greatly improve model performance for specialized use cases.

Model architecture

The architecture of a language model—such as transformer-based designs like GPT or BERT—determines how it processes context, attention, and memory. For enterprise deployments, understanding the architectural trade-offs (e.g., model size, inference speed, latency) can influence which model is best suited for real-time AI customer service versus, say, data analysis.

Training data and tokenization

Models are trained on vast datasets, the content of which shape their knowledge and behavior. Similarly, the choice of tokenization—or the method the model uses to break language into pieces it can process—can also impact the model’s output. For instance, compound words or rare terms may be split inefficiently, which can dilute meaning and affect output relevance.

Model parameters

A range of configurable parameters influence the behavior of AI models. When crafting and testing AI prompts, adjusting these settings can help improve the consistency, relevance, and quality of the outputs.

This is usually done through an API, and requires some trial and error to find the optimal configuration, as different use cases may benefit from different parameter combinations.

Temperature and top-k sampling

These two LLM parameters control the randomness and diversity of AI-generated responses. They have a direct impact on the creativity and novelty of AI responses.

Temperature determines how adventurous the model is. A low temperature (e.g., 0.2) yields more deterministic, safe responses that are ideal for, say, customer service AI agents. A higher temperature (e.g., 0.8+) is better for creative tasks in, say, marketing.

Top-k sampling limits the model’s choices to the top “k” most likely next words, controlling variety and coherence. Depending on your goal, adjusting this up can reduce false responses (hallucinations) while adjusting it down encourages novelty.

Loss functions and gradients

Relevant in the model’s training phase, loss functions measure how far the model’s predictions are from the correct answer, and gradients guide how the model learns. While you don’t interact with these directly when prompting, understanding them is helpful in training or fine-tuning models—especially when trying to optimize for and stop specific behaviors.

Model differences

Not all LLMs behave or perform the same. Even when given identical prompts, different models—such as OpenAI’s GPT-4, Anthropic’s Claude, Google’s Gemini, or Meta’s LLaMA—can produce dramatically different outputs in tone, accuracy, reasoning depth, and factuality, and efficiency. It’s worth A/B testing prompts to determine which model is most aligned with your use case.

Dive deeper: Prompt engineering guide: LLM Settings

The future of prompt engineering

Prompt engineering is a rapidly evolving field. Transformer-based models are seeing widespread adoption across industries, research fields, and consumer applications. According to McKinsey, generative AI adoption leapt from 55% to 75% in 2024 among business leaders, signaling the growing importance of prompt engineering in competitive operations.

Some key prompt engineering trends to watch in 2025 include:

Evolving model capabilities

The latest AI models (OpenAI’s GPT-4.5 or Meta’s LLaMA 4) show a stronger grasp of natural language, and can often infer user intent with minimal input. Meanwhile, models such as Google’s Gemini 1 have demonstrated agent-like abilities to handle complex tasks autonomously. These capabilities could lessen the reliance on specialized prompt engineering.

Auto-prompting

AI systems can now generate their own prompts based on users' inferred goals or desired outputs. For example, Google’s Bard and OpenAI’s latest language models can automatically suggest prompts to refine the original, effectively guiding users without requiring them to know how to meticulously design prompts.

Tool-using LLMs

Thanks to advances in agentic AI, LLMs will increasingly use external tools, APIs, and plugins to retrieve data and formulate outputs. As a result, prompt engineering will shift from focusing solely on language to also include tool orchestration. This will require prompt engineers to guide the AI in using the right tools at the right time for optimal results.

Multimodal prompt engineering

AI models have evolved to process a range of inputs—including text, images, audio, and code—so prompt engineering will expand to harness these multimodal capabilities. Crafting effective prompts will increasingly involve guiding AI to interpret, generate, and connect across multiple data formats, enabling more sophisticated and dynamic interactions.

Prompt templates

As AI models see greater adoption, organizations will likely develop libraries of optimized prompts, making prompt engineering a modular and reusable process. This will involve creating scalable templates and maintaining prompt libraries for various use cases. Prompt templates may soon be for sale.

Integration with domain-specific models

The rise of domain-specific AI models and small language models will enable prompt engineering to reliably deliver more precise outputs for specific use cases. Specialized models are trained on industry-specific data, leading to more accurate, relevant outputs in fields such as medicine, law, and finance.

Automate customer service with AI agents

The role of a prompt engineer

The role of prompt engineers is to ensure AI models live up to their full potential. Part model whisperer, part testing engineer—this dynamic, evolving role typically involves a combination of computer science, linguistics, and psychology.

Market context

As AI models become a business staple, organizations are seeking professionals who can extract reliable, domain-specific outputs from their models.

According to one study, the global market for prompt engineering is expected to grow at 32.8% CAGR from 2024 to 2030. According to LinkedIn, prompt writing is among the top in-demand skills for AI-adjacent roles.

Technical skills

Effective, prompt engineers benefit from skills in natural language processing, basic programming (especially Python), and familiarity with AI APIs like OpenAI, Anthropic, or Google Vertex AI. Understanding model capabilities, limitations, and token constraints is also key to mastering prompt engineering and crafting optimized, scalable prompts.

A new discipline, "PromptOps," is also emerging. This focuses on managing, versioning, and deploying prompts in production workflows. This will involve tracking prompt performance through APIs, logs, and metrics.

Non-technical skills

Strong writing, critical thinking, and communication skills are essential. Prompt engineers must think creatively, reason through ambiguity, and structure language clearly to influence how AI interprets tasks and delivers value across different use cases.

Salary range

ZipRecruiter data indicates that prompt engineer jobs in the United States have an average annual pay of $62,977, with some salaries potentially reaching $95,500 or higher.