What is natural language processing (NLP)?

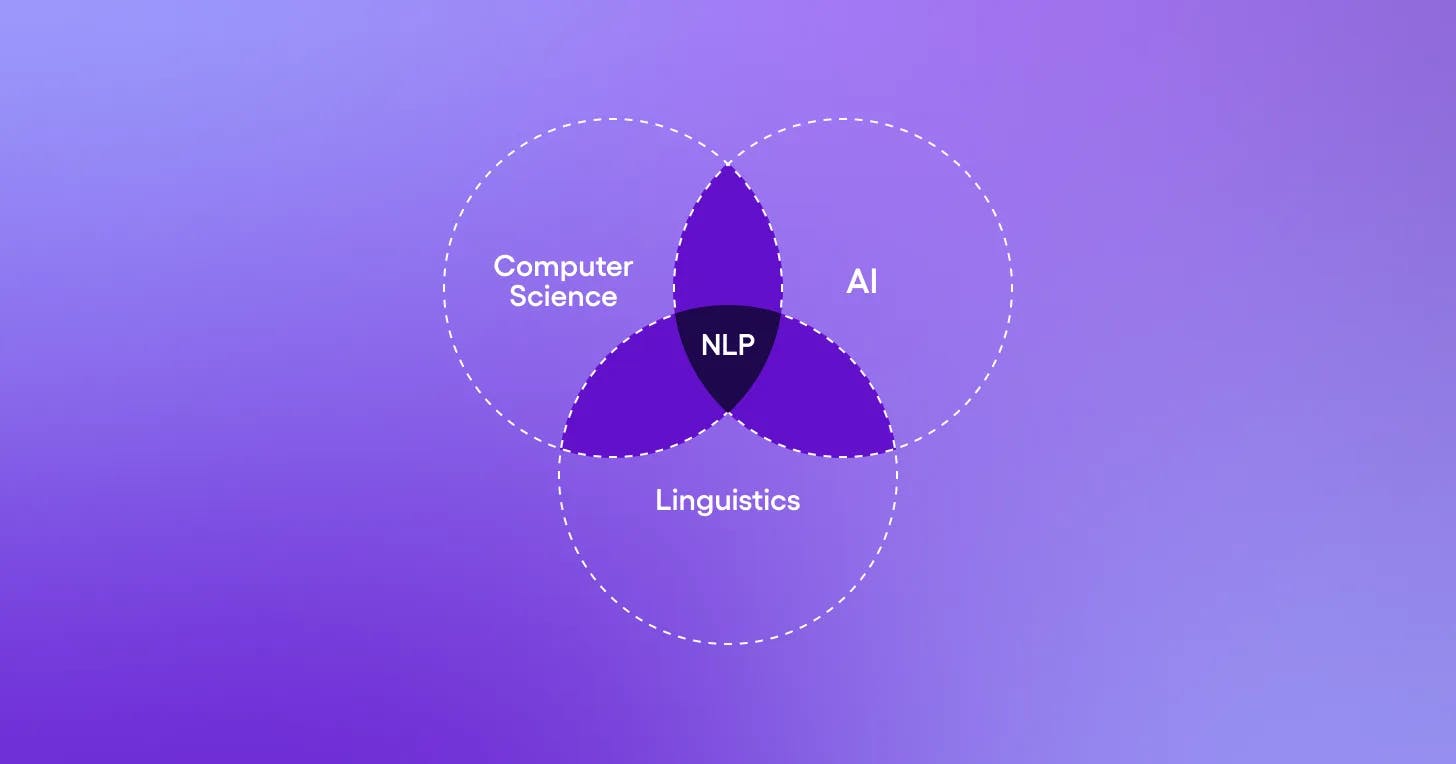

Natural Language Processing, or NLP, is a subset of artificial intelligence (AI) that forms the bridge between human communication and computer understanding. In particular, NLP focuses on enabling computers to interpret, generate, and make sense of human language meaningfully.

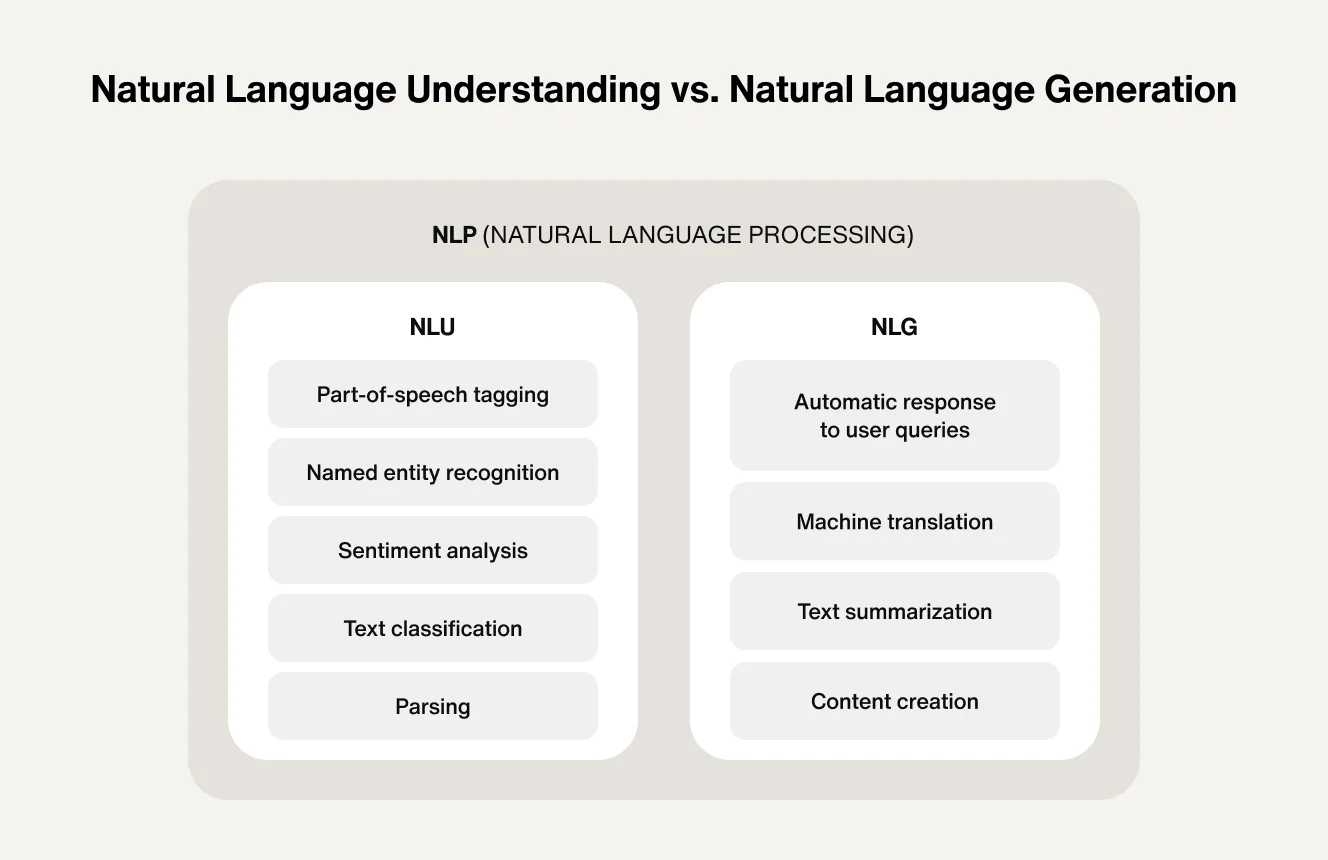

NLP includes two core components: Natural Language Understanding (NLU), which helps systems interpret meaning, intent, and context, and Natural Language Generation (NLG), which enables them to produce coherent, context-aware responses. By combining linguistics with statistical and deep learning models, NLP tackles the complexity of human language and enables critical applications across domains—from education to healthcare.

Why does NLP matter?

One of the most significant reasons NLP matters is its ability to unlock insights from unstructured data. Industry estimates suggest that 80% of all business data is unstructured: emails, documents, social media posts, customer feedback, and more. This data contains a wealth of valuable information, but it's challenging to analyze and derive insights from using traditional data processing methods.

NLP provides the tools and techniques to make sense of this unstructured text data. By applying NLP methods, organizations can automatically extract key information, identify patterns and trends, and gain a deeper understanding of their data. This enables data-driven decision-making, improved customer understanding, and the automation of many language-related tasks.

8 major support hassles solved with AI agents

NLP fundamentals: How computers learn language

The language understanding challenge for computers

Human language is inherently complex and ambiguous, which makes it challenging for computers to understand. Words can have multiple meanings depending on the context they're used in, sentences can have implied meanings beyond the literal words, and there are countless ways to express the same idea.

For example, consider the sentence, "I saw the man with the telescope." This could mean that you used a telescope to see the man, or it could mean you saw a man who was holding a telescope. As humans, we use our understanding of context and the world to disambiguate the meaning, but this is a difficult task for computers.

Traditional computer programs are designed to work with structured, unambiguous data, following strict logical rules. Human language, on the other hand, is anything but structured and unambiguous. NLP aims to bridge this gap, enabling computers to work with human language's unstructured, ambiguous nature.

Syntactic (traditional NLP) vs. semantic (modern NLP) understanding

In the early days of NLP, a lot of focus was placed on syntactic analysis.

What is syntactic analysis?

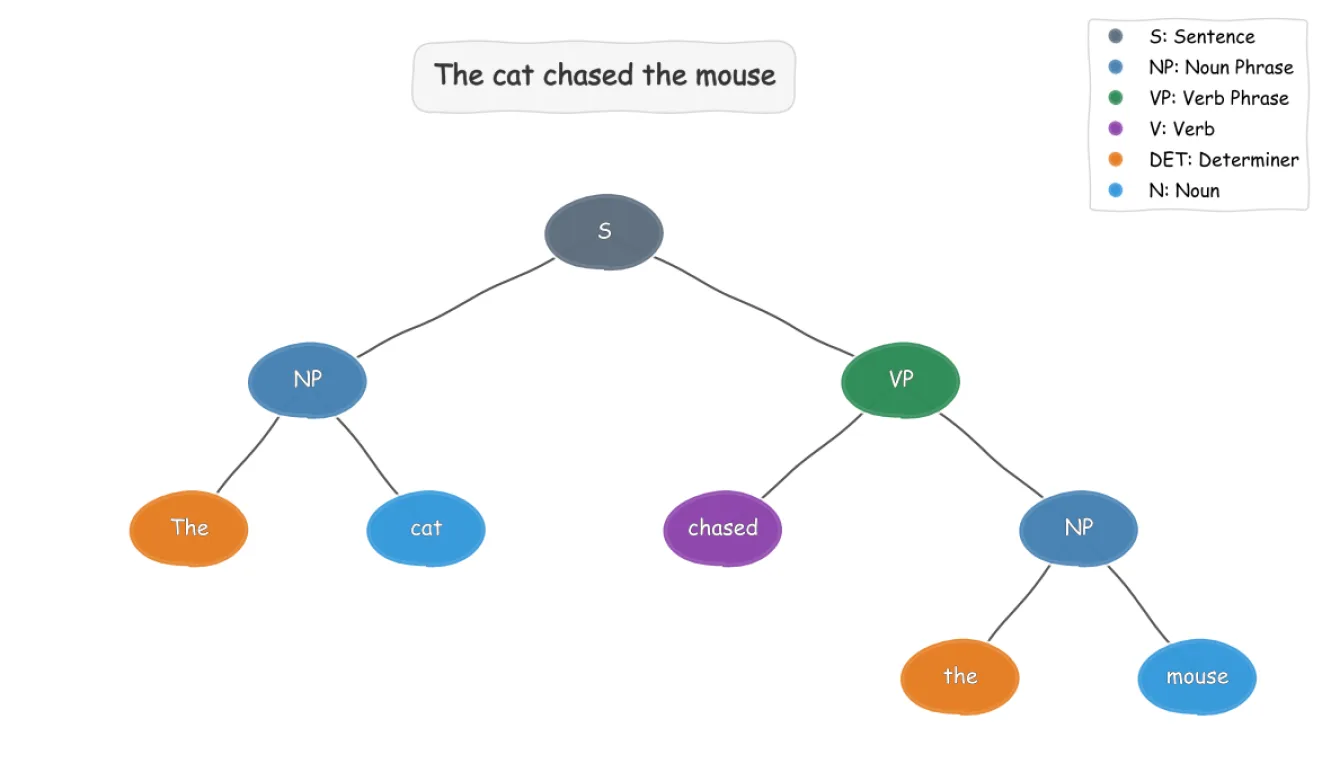

Syntactic analysis is the understanding of the grammatical structure and the word order of sentences. Techniques like part-of-speech tagging—identifying the grammatical role of each word—and parsing—analyzing the grammatical structure of a sentence—were used to try to make sense of the text.

However, while syntax is essential, it's only part of the picture. To truly understand language, NLP systems need to go beyond syntax and understand the meaning behind the words.

What is semantic understanding?

Semantic understanding involves identifying the conceptual relationships between words and phrases. It's about understanding the topic of a text, recognizing named entities (people, places, organizations), identifying sentiment and emotion, and grasping the intent behind a statement or question.

Modern NLP has shifted focus from syntactic approaches to being dominated by models prioritizing semantic understanding. Techniques like word embeddings—representing words as dense vectors that capture semantic relationships—and sequence models—considering the order and context of words—have led to significant progress in semantic understanding.

Core NLP techniques and processes

Before diving into advanced applications, it is helpful to understand the fundamental building blocks that make NLP pipelines work. These core techniques and preprocessing steps form the foundation of virtually every NLP application, from simple text analysis to sophisticated AI agents.

Text preprocessing: How to prepare data for the NLP analysis

Raw text data is rarely ready for immediate analysis. Text preprocessing transforms messy, inconsistent text into a format that algorithms can process reliably. This involves several steps:

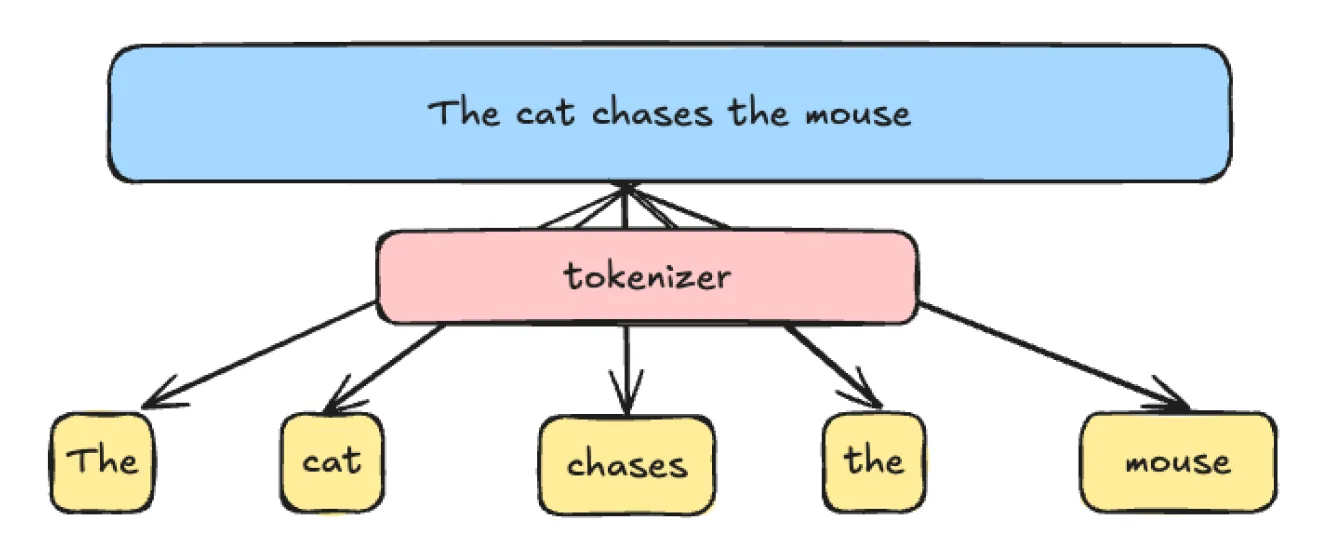

Tokenization

This is the process of breaking text into smaller units called tokens. Tokens can be individual words, phrases, or even whole sentences. In modern LLMs, tokens are often just a couple of letters, not even full words. Tokenization helps in analyzing the text piece by piece.

Stopword removal

Stop words are common words that usually do not contribute much to the meaning of a sentence, such as "a", "an", "the", "is", etc. Removing these words can help focus on the critical content that contributes to semantic meaning.

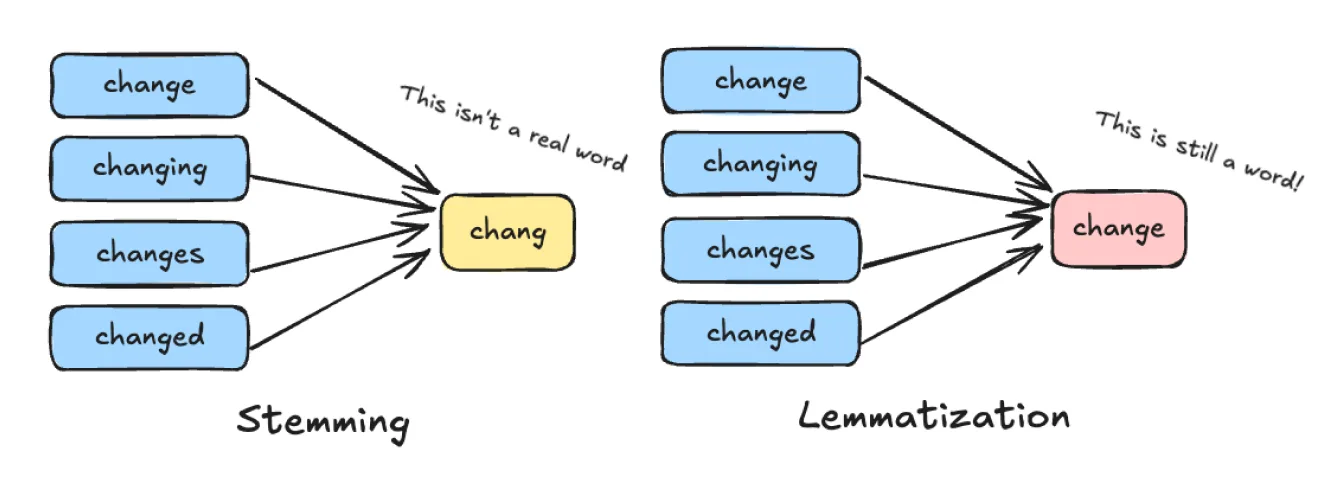

Stemming and lemmatization

Words often come in different forms (e.g., "run," "running," "ran"). Stemming and lemmatization are techniques for reducing words.

Stemming: The process of reducing a word to its root form (often called a "stem"), usually by chopping off suffixes. It’s fast and rule-based but can be rough or imprecise.

Example: “running” → “run”, “universities” → “univers”

Lemmatization: The process of reducing a word to its base or dictionary form (called a "lemma"), using linguistic context like part of speech. It’s more accurate but slower than stemming.

Example: “better” → “good”, “running” → “run”

Normalization

Text data can come in many forms - uppercase, lowercase, with punctuation, etc. Normalization converts text into a standard format, making it consistent for further processing.

Essential NLP techniques with examples

Once text data is properly preprocessed, we can apply specialized NLP techniques to extract meaning, identify patterns, and generate insights. These fundamental techniques demonstrate the practical power of NLP and offer a faster and more cost-effective solution to some tasks than using LLMs.

Linguistic analysis

Named entity recognition (NER)

NER is the linguistic analysis process of identifying and categorizing named entities in text into predefined categories such as person names, organizations, locations, time expressions, quantities, etc.

Example: In the sentence "Apple is looking at buying U.K. startup for $1 billion", NER would identify "Apple" as an organization, "U.K." as a location, and "$1 billion" as a monetary value.

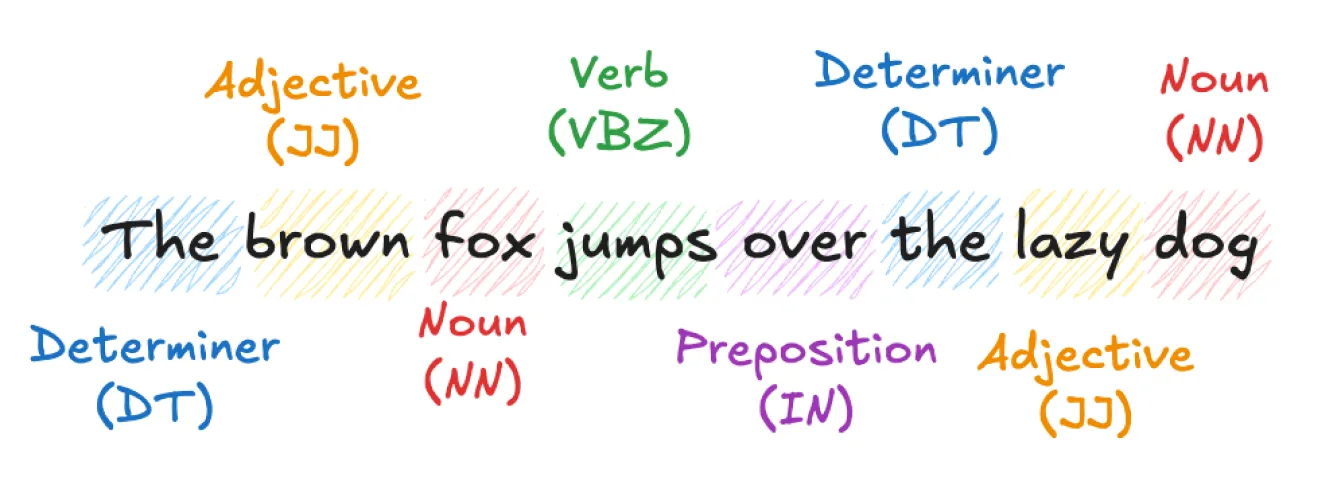

Part-of-speech (POS) tagging

POS tagging is the process of marking up a word in a text as corresponding to a particular part of speech based on its definition and context.

Example: In the sentence "The brown fox jumps over the lazy dog", POS tagging would identify "The" as a determiner, "quick" as an adjective, "fox" as a noun, "jumps" as a verb, and so on.

Sentiment analysis

Sentiment analysis is the process of determining whether a piece of writing is positive, negative, or neutral. It's often used to understand people's opinions in social media posts, reviews, survey responses, etc.

Example: For the review "The movie was great!" sentiment analysis would classify this as positive sentiment.

Language translation

Machine translation is the task of automatically converting source text from one language to another language.

Example: Translating the English text "What rooms do you have available?" to its Spanish equivalent, "Qué habitaciones tiene disponibles?" is an example of language translation.

Language generation

Text summarization

Text summarization is the task of creating a short, accurate, and fluent summary of a longer text document.

Example: A news article could be summarized from several paragraphs to a short synopsis capturing the main points.

Question answering

Question answering is the task of automatically providing an answer to a question posed in natural language.

Example: If the question is "What is the capital of France?", the question answering system should respond with "Paris".

Leverage omnichannel AI for customer support

NLP evolution: From rules to statistical AI

The development of NLP has followed a fascinating trajectory, with each era building upon the limitations and lessons of the previous approach. Understanding this evolution helps to understand why modern AI systems work the way they do and provides valuable context for choosing the right NLP approach for your specific needs.

Rule-based NLP systems

The earliest NLP systems relied heavily on handcrafted linguistic rules. These systems were built by linguists and computer scientists who manually encoded grammatical rules, dictionaries, and language patterns into computer programs.

For example, a rule-based system for part-of-speech tagging might include rules like "if a word ends in '-ing' and follows a verb, it's likely a gerund" or "words that appear after 'the' are often nouns." These systems required extensive domain expertise and careful rule crafting.

Strengths and limitations of an NLP rule-based systems

Strengths

Predictable and interpretable results

High precision for well-defined tasks

No training data required

Easy to understand and debug

Limitations

Extremely labor-intensive to build and maintain

Inflexible when encountering new language patterns

Difficulty handling ambiguity and exceptions

Poor scalability across different domains or languages

Table of the pros and cons of an NLP rule-based system.

Statistical and machine learning AI methods

The 1990s and 2000s saw a shift toward data-driven approaches. Instead of manually crafting rules, researchers began using statistical methods to learn patterns from extensive collections of text data, and machine learning methods to numerically capture semantic information from text.

The introduction of statistical AI models: The N-gram model

What are N-gram models?

N-gram models are one of the pioneering statistical techniques that predict the next word in a sequence based on the previous n words. However, unlike modern LLMs, they operate more like a lookup over past data. N-gram models answer the question, “What word has most often followed these n words?” They became popular for tasks like language modeling and machine translation.

What are the N-gram model limitations?

While effective for some tasks, n-gram models had significant limitations—they couldn't capture long-range dependencies and treated words as discrete symbols without understanding semantic relationships. The move to statistical methods solved some problems associated with rule-based approaches. Still, it led to the next question: how can computers represent words mathematically to capture semantic meaning rather than relying on statistical patterns?

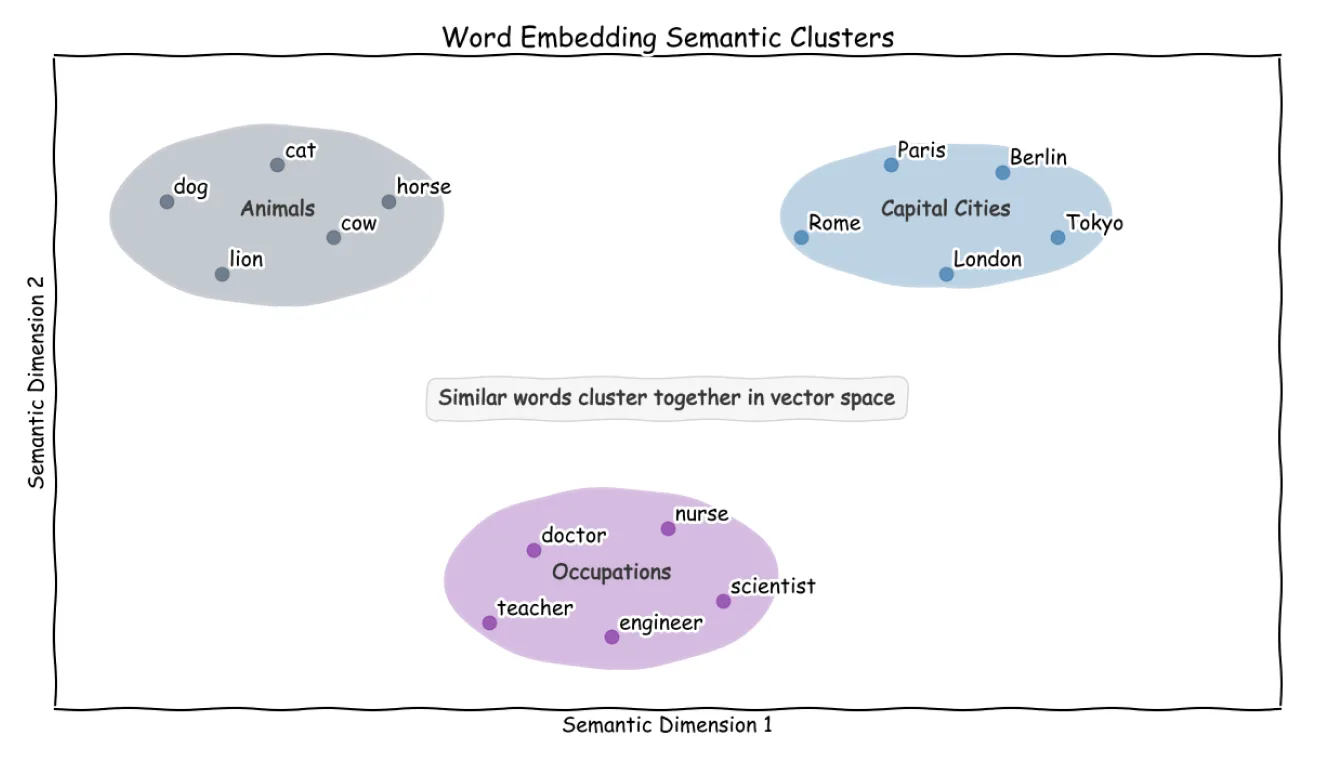

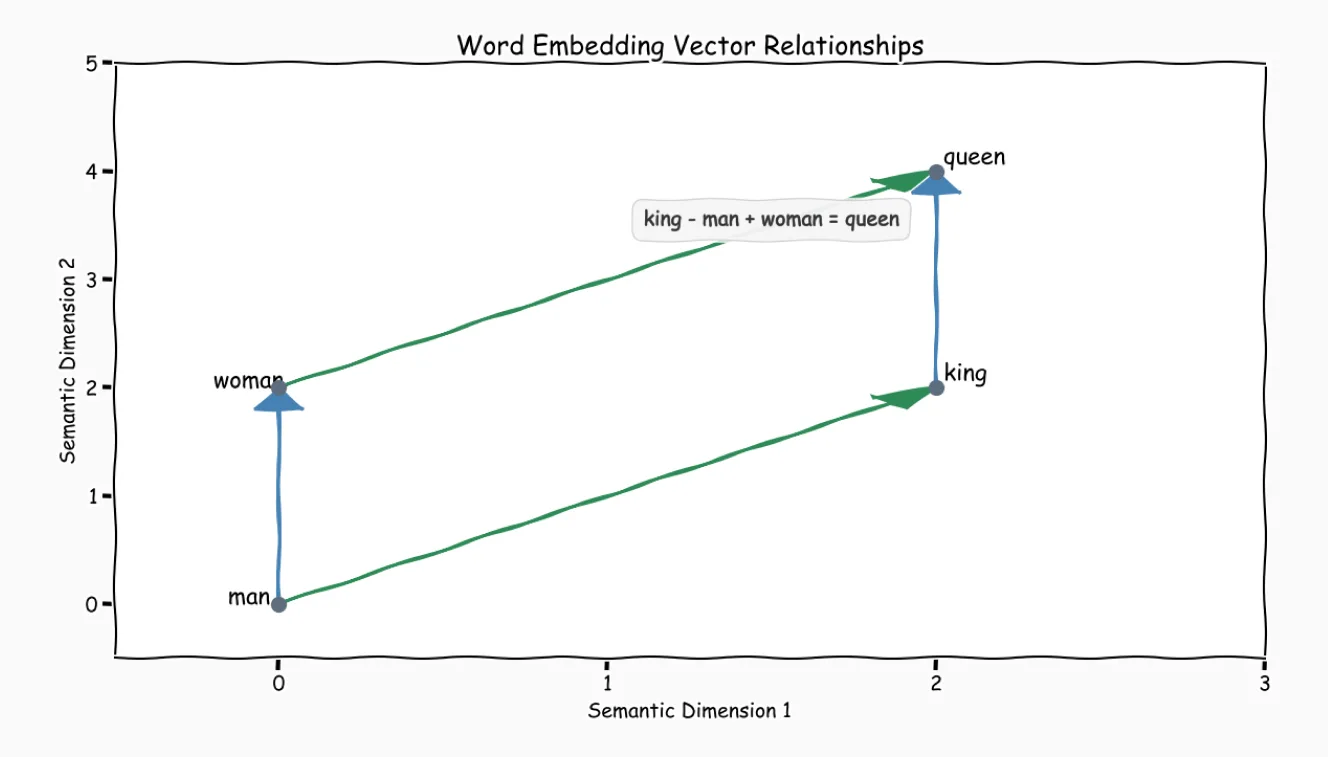

Capturing semantic meaning with word embeddings

What are word embeddings?

Word embeddings are a method for representing words in NLP where each word is mapped to a dense vector in a continuous vector space. This means that instead of treating words as just text, we turn each word into a set of numbers that a computer can understand. For example:

The word "king" might become: [0.27, 0.13, -0.44, ...]

The word "queen" becomes: [0.26, 0.12, -0.43, ...]

This is called a dense vector — a compact list of numbers, usually 50–300 values. Similar words have similar numbers. Words that mean similar things — like “dog” and “puppy”, or “angry” and “mad” — end up with vectors (number lists) that are close together in a kind of "map" or space.

So the computer starts to understand that "walk" is closer to "run" than it is to "banana."

The ability to capture semantic information in the language computers speak (numbers) marked a significant breakthrough in NLP, leading to the modern techniques powering LLMs today.

A pioneering breakthrough in word embeddings came with Word2Vec, an algorithm developed by Tomas Mikolov and his team at Google. Based on the distributional hypothesis—summed up by linguist J.R. Firth as "You shall know a word by the company it keeps"—Word2Vec trained models to understand the context of words by analyzing surrounding words. This allowed NLP systems to move beyond simple one-hot encoding and handle language in a more meaningful, scalable way.

Let’s give an example to explain the mathematical concept behind word embeddings: Let’s use a vector representation to show the relationship between the words man, king, woman, and queen.

The image above illustrates how word embeddings can capture semantic relationships through mathematical vector operations.

The classic example: vector("king") - vector("man") + vector("woman") ≈ vector("queen").

This showed that the models were learning meaningful representations of language, like the concept of gender or royalty, not just statistical patterns.

The deep learning revolution

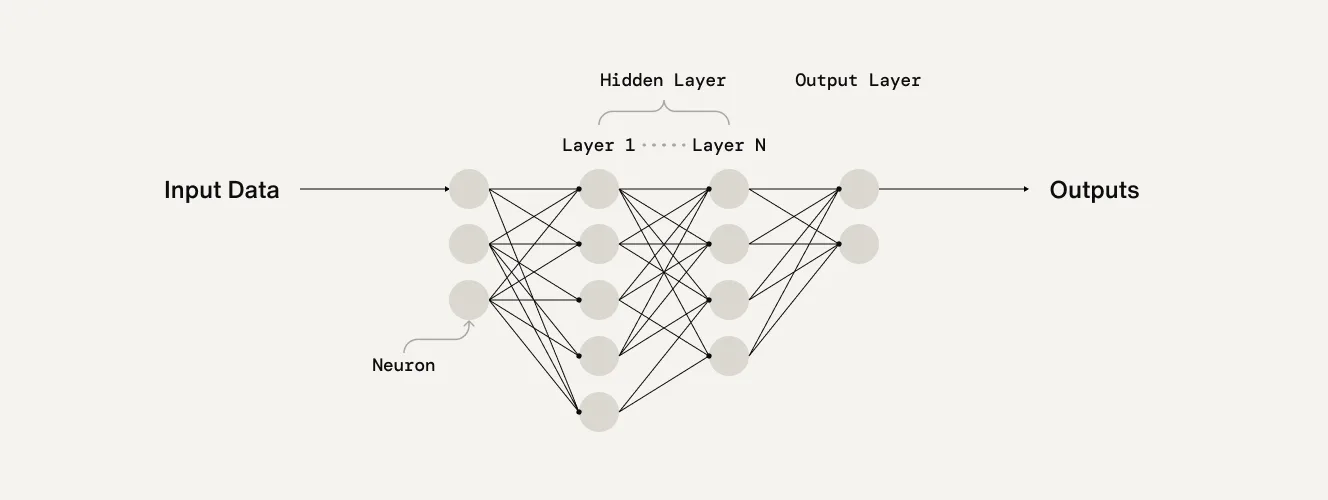

The introduction of deep learning to NLP marked another significant leap forward. Recurrent Neural Networks (RNNs) and Long Short-Term Memory (LSTM) networks enabled models to process sequences of text while maintaining the memory of previous words, addressing some limitations of earlier approaches.

The real breakthrough that unlocked the LLMs we know today came with the introduction of attention mechanisms. Traditional sequence models processed text one word after another, but the attention mechanism allowed models to focus on relevant parts of the input across the entire text.

Attention mechanisms represented significant progress toward solving the problem of long-range dependencies and enabled models to understand the context more effectively. Instead of compressing all information into a fixed-size representation, attention allowed models to dynamically access relevant information from the entire input sequence. This is the “AI” technology underpinning the conversational AI and AI agents that have risen to prominence in recent years.

Large language models (LLM) and the modern era

Google's 2017 development of the Transformer architecture revolutionized NLP by making attention the primary mechanism for processing sequences. This led to the development of increasingly powerful language models like BERT, GPT, and their successors.

How did LLMs change NLP?

Large Language Models (LLMs) changed the NLP landscape in several fundamental ways:

Scale: Modern LLMs are trained on massive datasets containing billions or trillions of words, enabling them to learn incredibly rich representations of language.

Transfer learning: Pre-trained LLMs can be fine-tuned for specific tasks with relatively small amounts of task-specific data, making advanced NLP capabilities accessible for specialized applications.

Generative capabilities: Unlike earlier models that were primarily designed for understanding tasks, LLMs excel at both understanding and generating human-like text.

Few-shot learning: Modern LLMs can perform new tasks with just a few examples or even just a description of the task without requiring extensive retraining.

Conversational AI: LLMs enabled the development of sophisticated chatbots and virtual assistants that can engage in natural, contextual conversations.

This evolution represents a fundamental shift from systems that follow explicit rules to systems that learn implicit patterns from data and, finally, to systems that generalize and adapt to new situations with minimal additional training.

The progression from syntactic focus to semantic understanding has enabled NLP to move from narrow, task-specific applications to general-purpose language understanding and generative systems.

The importance of context for LLMs in NLP

Context is the key to effectively leveraging modern LLMs. While early NLP systems focused on individual words or simple patterns, modern LLM systems excel because they understand how meaning changes based on surrounding information, domain knowledge, and conversational history. AI prompts are essential for natural language generative tasks, for example, and they give context.

What makes a good LLM prompt?

What is prompt engineering?

Prompt engineering is the practice of crafting effective inputs to guide language models toward desired outputs. It combines the art of clear communication with the science of understanding how models process and respond to different types of contexts.

3 principles of effective prompt engineering

Clarity and specificity

Vague LLM prompts often lead to vague and misaligned responses. Instead of asking,

"Tell me about sales."

A well-engineered AI prompt might specify:

"Analyze Q3 sales performance for our enterprise software division, focusing on conversion rates and deal size trends."

Context layering

Providing relevant background information helps models understand the specific domain or situation. This might include company-specific terminology, industry context, or the intended audience for the output.

Output formatting

Specifying the desired format, length, and structure helps ensure the response meets your needs.

For example: "Provide a 3-paragraph executive summary with specific metrics and actionable recommendations."

What are common pitfalls in prompt engineering?

Many organizations struggle with prompt engineering because they approach it like traditional search queries rather than conversations with an intelligent system.

Assuming implicit knowledge: AI Models don't automatically know your company's internal processes, product names, or industry-specific meanings of common terms. What seems obvious to you may be ambiguous to the model.

Overloading information: While context is important, providing too much irrelevant information can dilute the AI model's focus and lead to less accurate responses.

Inconsistent terminology: Using different terms for the same concept within a single prompt or across related prompts can confuse models and reduce consistency in outputs.

Neglecting iterative refinement: Effective prompts are rarely perfect on the first attempt. The best results come from testing, analyzing outputs, and refining the approach based on what works.

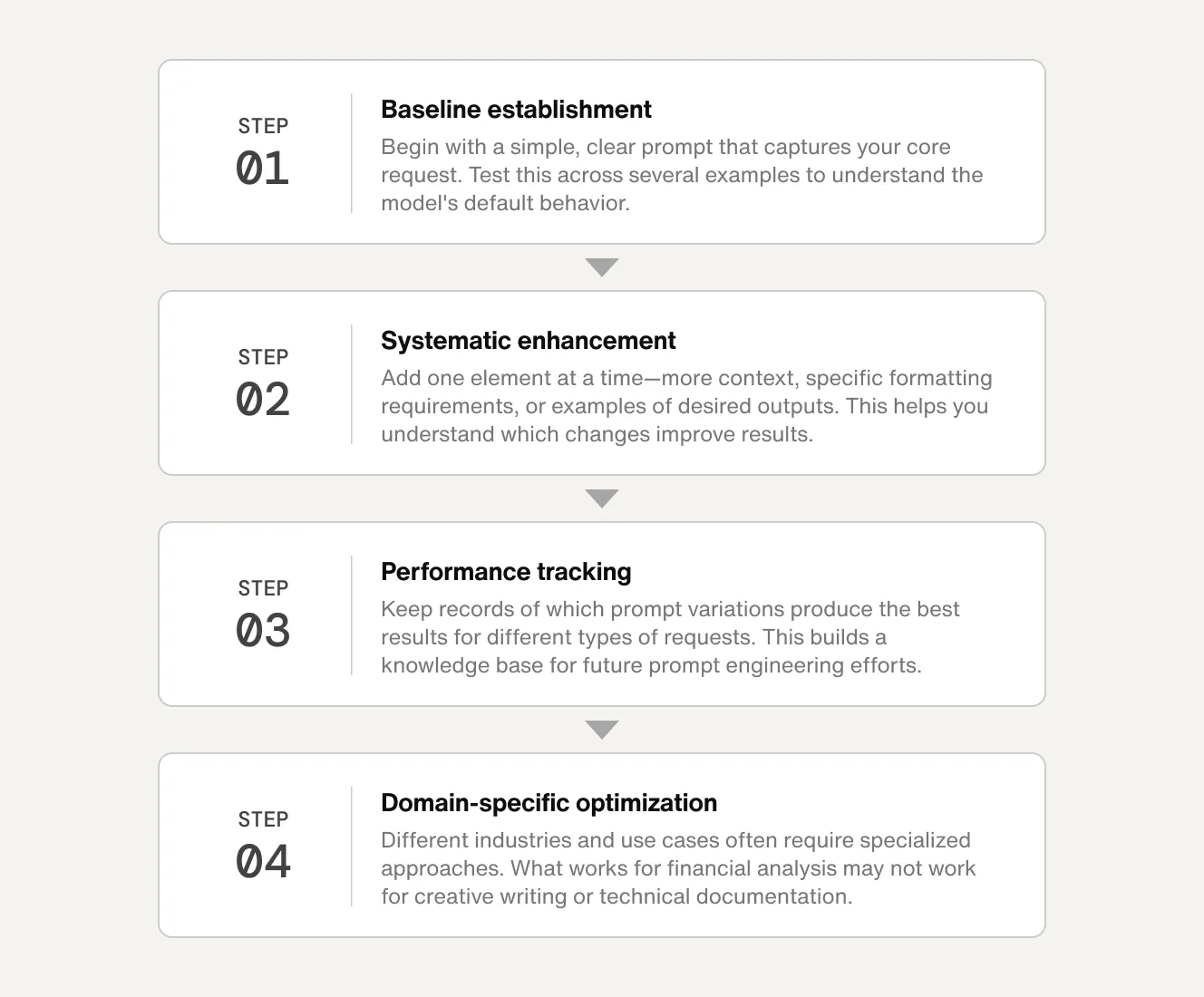

Implementing iterative prompt refinement

Iterative refinement is a systematic approach to improving AI prompt effectiveness over time. Start with a basic prompt and gradually enhance it based on the quality and relevance of the responses you receive.

Baseline establishment: Begin with a simple, clear prompt that captures your core request. Test this across several examples to understand the model's default behavior.

Systematic enhancement: Add one element at a time—more context, specific formatting requirements, or examples of desired outputs. This helps you understand which changes improve results.

Performance tracking: Keep records of which prompt variations produce the best results for different types of requests. This builds a knowledge base for future prompt engineering efforts.

Domain-specific optimization: Different industries and use cases often require specialized approaches. What works for financial analysis may not work for creative writing or technical documentation.

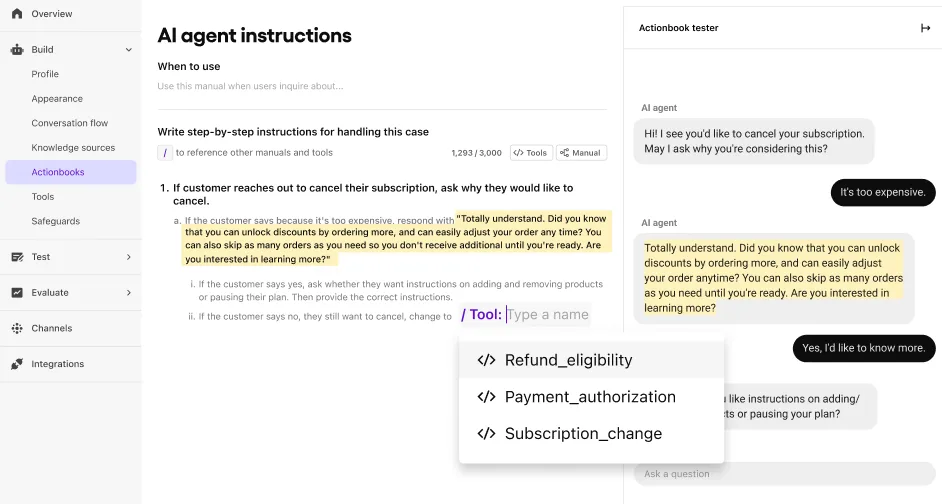

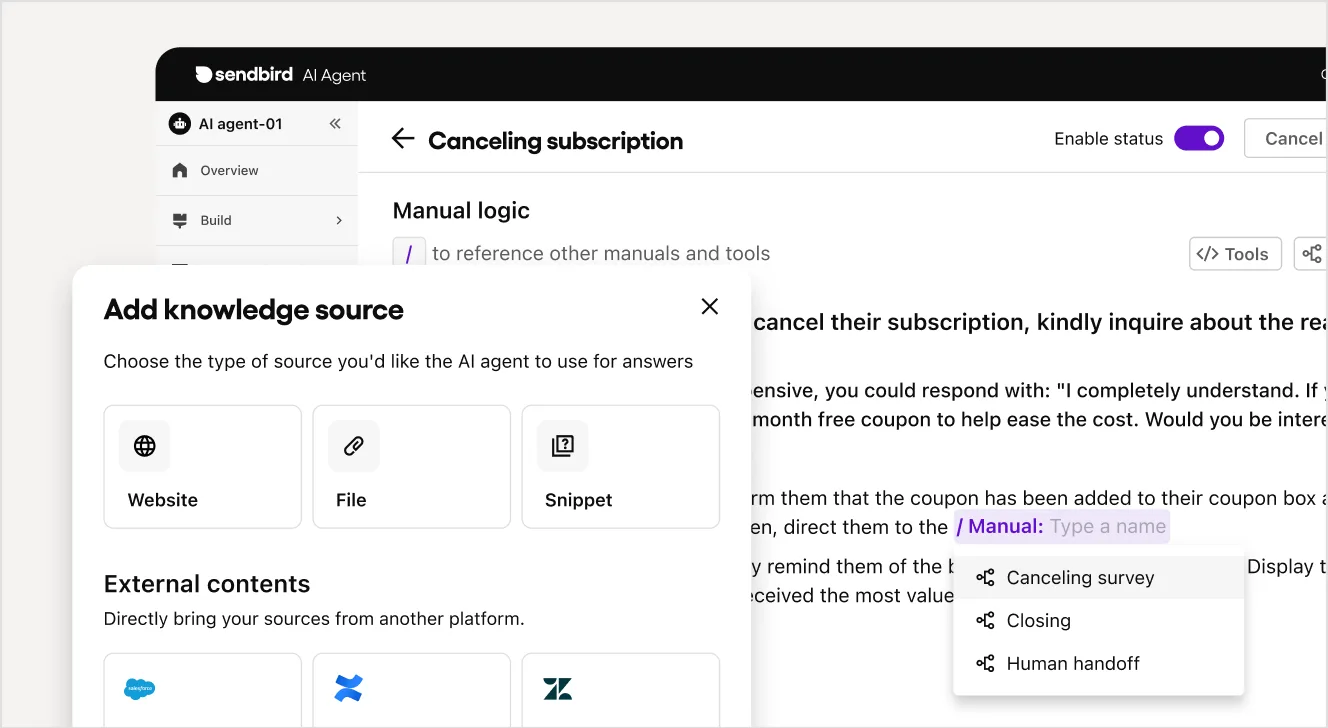

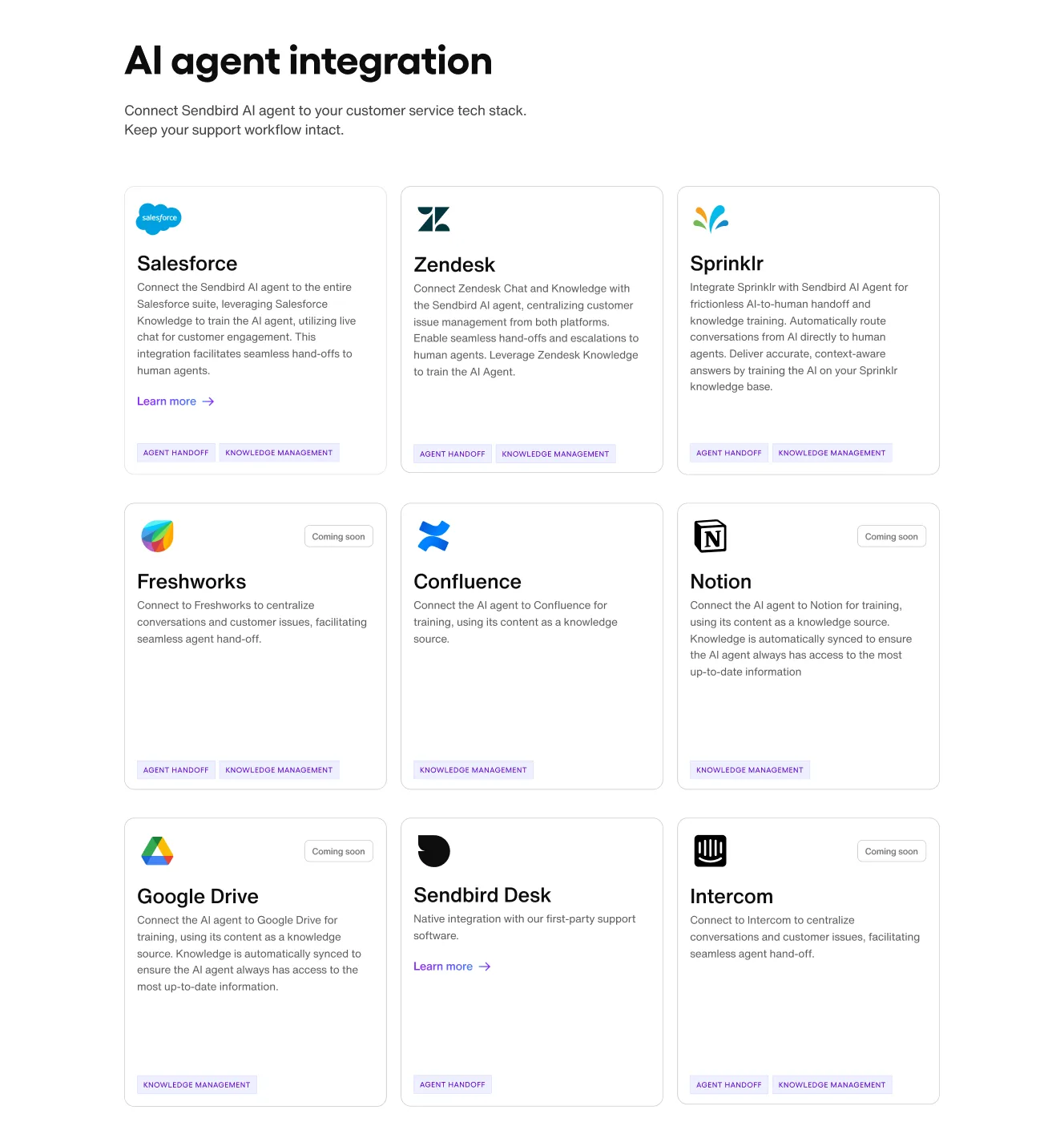

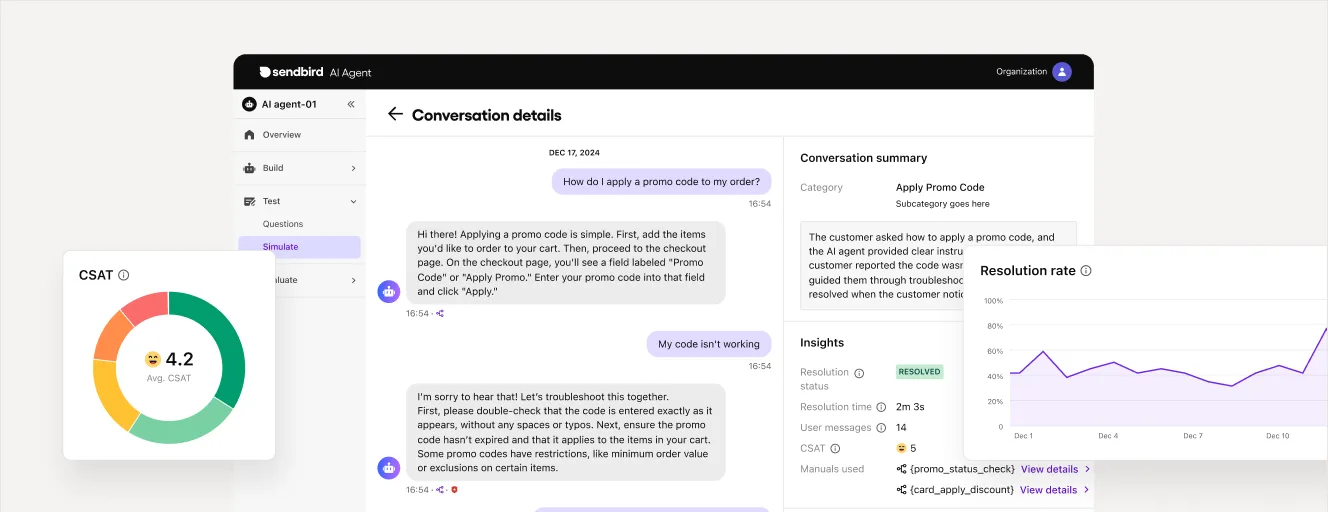

High-grade AI agent platforms offer a no-code AI agent builder, which allows prompt versioning and performance tracking, as well as continuous improvement of prompts and AI agent behavior. No-code tools aren’t the end of the story, however. Learn how Sendbird ensures AI customer service agents perform for businesses.

How does Named Entity Recognition (NER) enhance context engineering?

Named Entity Recognition (NER) can play a crucial role in context engineering by identifying terms and concepts that may need additional explanation or context when working with language models.

Identifying context gaps: NER can automatically scan your text inputs to identify company names, product terms, technical jargon, and other entities that might be unfamiliar to a general-purpose language model.

Building context dictionaries: Once you've identified key entities in your domain, you can create context dictionaries that provide definitions, explanations, or additional context for these terms. This ensures consistent understanding across different interactions.

Automated context injection: Advanced implementations can automatically detect when domain-specific entities appear in prompts and inject relevant context from your dictionaries, improving accuracy without manual intervention.

Why is domain-specific context crucial for business applications?

Generic language models are trained on broad datasets that may not capture the specific nuances of your industry, company, or use case. Domain-specific context bridges this gap.

Industry terminology: Every industry has a specialized vocabulary. "Conversion" means something different in marketing, chemistry, and religious contexts. Providing clear definitions ensures the model interprets terms correctly.

Company-specific knowledge: Your business is unique in terms of internal product names, acronyms, process terminology, and organizational structure. Models need this context to provide relevant, actionable insights.

Regulatory and compliance considerations: Many industries have specific regulatory requirements that affect how information should be processed and presented. Context engineering helps ensure outputs meet these AI risk requirements.

Cultural and regional variations: Language use varies significantly across regions and cultures. Context engineering helps models understand and respond appropriately to these variations. Sendbird helps companies create localized AI agents that provide culturally aware AI customer service.

The investment in proper context engineering pays dividends in improved accuracy, relevance, and reliability of NLP applications. Organizations that master context engineering can unlock significantly more value from their language model implementations, turning generic AI capabilities into powerful, domain-specific tools that understand and respond to their unique needs.

Reinvent CX with AI agents

Real-world applications across industries

NLP has moved beyond academic research to become a critical technology driving innovation across virtually every industry. The ability to automatically understand, analyze, and generate human language at scale has opened up new possibilities for automation, insight generation, and customer engagement.

Customer service and support transformation

AI customer service represents one of the most visible applications of NLP technology, fundamentally changing how organizations interact with their customers.

Intelligent AI agents (formerly chatbots) and virtual assistants

Modern NLP-powered assistants have evolved far beyond simple rule-based systems. They can understand complex customer queries, maintain context across multi-turn conversations, and provide personalized responses. These systems handle routine inquiries 24/7, escalating only complex issues to human agents.

Automated ticket routing and prioritization

NLP analyzes incoming support requests, automatically categorizing them by urgency, topic, and required expertise. This ensures critical issues reach the right specialists quickly while routine requests are handled efficiently.

Real-time sentiment monitoring

Advanced systems analyze customer interactions in real-time, identifying frustrated customers who may need immediate attention or flagging conversations that require supervisor intervention. This proactive AI agent approach helps prevent negative experiences from escalating.

Healthcare and life sciences applications

Healthcare generates enormous amounts of unstructured text data, from clinical notes to research papers, making it a natural fit for NLP applications.

Clinical documentation and analysis

NLP helps healthcare providers extract insights from patient records, identify potential drug interactions, and flag important symptoms or conditions that might otherwise be overlooked in lengthy clinical notes.

Medical research acceleration

Advanced NLP systems analyze vast databases of research papers, clinical trials, and patient data to identify patterns, potential treatments, and research opportunities. This significantly speeds up the discovery process for new treatments and therapies.

Patient communication and education

AI in healthcare leverages NLP to create personalized health information, translate complex medical terminology into patient-friendly language, and provide automated responses to common health questions.

Finance and legal sector innovations

AI for financial services and legal firms are using NLP to process vast amounts of documentation and extract actionable insights.

Document review and contract analysis

NLP automates the review of legal documents, contracts, and regulatory filings, identifying key clauses, potential risks, and compliance issues that would take human reviewers significantly longer to process.

Regulatory compliance monitoring

Financial institutions use NLP to monitor communications, transactions, and market data for compliance violations, automatically flagging suspicious activities or potential regulatory breaches.

Risk assessment through text analysis

Advanced systems analyze news articles, social media, earnings calls, and other text sources to assess market sentiment and identify potential risks with AI for investments or business operations.

Marketing and sales optimization

Teams are using AI in marketing and AI in sales to better understand customers, personalize experiences, and optimize their messaging across channels.

Social media monitoring and brand analysis

NLP tracks mentions of brands, products, and competitors across social platforms, news sites, and review platforms, providing real-time insights into brand perception, emerging issues, and market opportunities.

Content personalization and generation

Modern systems create tailored marketing messages, product descriptions, and customer service emails based on customer preferences, behavior patterns, and demographic information. This level of personalization was previously impossible at scale.

Lead qualification and sales insights

NLP generates leads by analyzing customer communications, website interactions, and engagement patterns. It also provides sales teams with insights about prospect interests and pain points.

Competitive intelligence gathering

Organizations monitor competitor communications, product announcements, and customer feedback to identify market trends, competitive threats, and positioning opportunities.

Education and training enhancement

Educational institutions and corporate training programs are leveraging NLP to create more personalized and effective learning experiences.

Personalized learning experiences

NLP systems adapt content difficulty, pacing, and style based on individual student performance and learning patterns. These AI systems analyze student responses to identify knowledge gaps and recommend targeted interventions.

Automated assessment and feedback

Advanced NLP provides immediate, detailed feedback on written assignments, helping students improve their writing while reducing the grading burden on instructors. These systems evaluate not just grammar and spelling, but also argument structure, evidence quality, and writing clarity.

Language learning applications

NLP powers conversational practice tools, pronunciation feedback systems, and personalized vocabulary-building applications. Advanced systems simulate real-world conversations and adapt to learners' proficiency levels.

Educational content creation

NLP helps instructors generate quiz questions, study guides, and supplementary materials from existing course content, making it easier to create comprehensive learning resources.

NLP agents and autonomous language systems

The evolution of NLP has reached a point where systems can do more than understand and generate text—they can act to complete complex workflows autonomously. This represents a fundamental shift from reactive language processing to proactive AI actions.

Beyond simple chatbots: What makes an NLP agent?

Traditional chatbots follow predetermined conversation flows and provide scripted responses. NLP agents, by contrast, can plan, reason, and make decisions about how to accomplish goals using natural language as both input and output.

Planning and reasoning capabilities

An NLP agent can break down complex requests into manageable steps, determine the sequence of actions needed, and adapt their approach based on intermediate results. For example, when asked to "prepare a quarterly business review," an agent might identify the need to gather financial data, analyze performance metrics, create visualizations, and format a presentation.

Tool integration and external system access

Rather than being limited to text generation, AI agents can query databases, send emails, schedule meetings, or update records in business systems. This tool integration for AI agents enables them to interact with external systems and databases via APIs to gather information and execute actions.

Multi-step conversation management

LLM Agents can maintain context across extended interactions, ask clarifying questions when needed, and remember previous decisions throughout a complex task. This enables more natural, collaborative problem-solving between humans and AI systems.

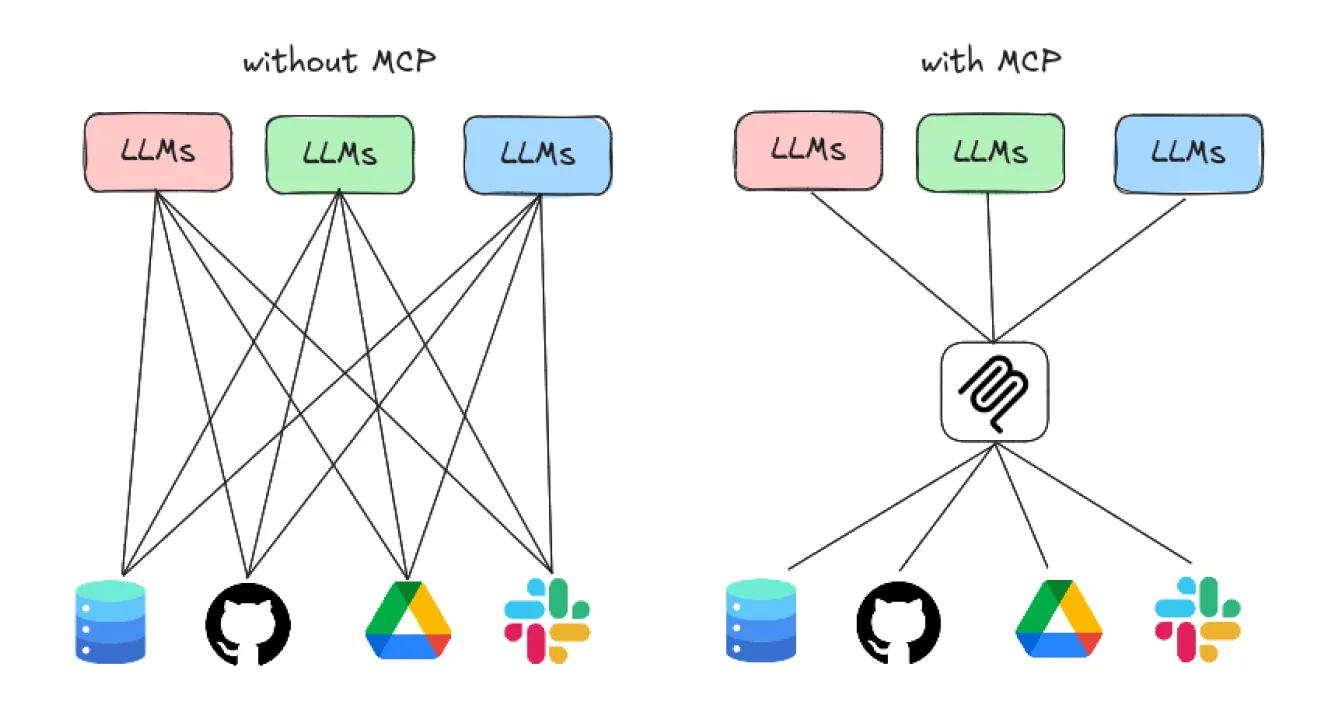

Integration capabilities and the Model Context Protocol (MCP)

Modern NLP agents excel when they can seamlessly connect with existing business systems and workflows. The Model Context Protocol (MCP) has emerged as a promising standard for enabling these connections.

Understanding the Model Context Protocol (MCP)

MCP provides a standardized way for language models to interact with external tools and data sources. This protocol allows AI agents to access real-time information, execute functions, and integrate with business applications without requiring custom integration work for each new connection.

Real-world integration examples

Consider an AI concierge that needs to resolve a billing inquiry. With proper integration capabilities, the agent can access the customer's account information, review recent transactions, identify the source of confusion, and even process refunds or adjustments—all while maintaining a natural conversation with the customer.

Workflow automation and orchestration

AI agentic workflow can trigger complex business processes, coordinate between different systems, and manage approvals. This enables organizations to automate not just individual tasks, but entire business processes that previously required human coordination.

Implementation considerations for NLP agents

Not every use case benefits from the complexity of custom AI agents, and they can be much more expensive than regular chat-style usage of LLMs. Understanding when agents add value versus simpler solutions is crucial for successful and cost-effective implementation.

When agents add value over simpler solutions

Agents excel in scenarios involving complex, multi-step processes that require decision-making and adaptation. If your use case involves gathering information from multiple sources, applying business logic, and coordinating several actions, an agentic AI approach may be appropriate.

High-variability tasks where the specific steps depend on context and circumstances are good candidates for agent implementation. Unlike rigid robotic process automation (RPA), which follows the same steps every time, agents can adapt their approach based on the specific situation.

Balancing autonomy with human oversight

Most successful agent implementations include mechanisms for human review of important decisions, escalation procedures for edge cases, and clear boundaries around what actions agents can take independently.

Managing complexity and maintaining reliability

For straightforward, predictable tasks with clear inputs and outputs, simpler NLP solutions often provide better reliability and easier maintenance. The key is matching the complexity of your solution to the complexity of your problem.

Delight customers with AI customer service

Implementation considerations: Choosing the right approach

Successfully implementing NLP solutions requires careful consideration of technical requirements, organizational constraints, and business objectives. The right approach depends on your specific context and goals.

Key decision factors for NLP implementation

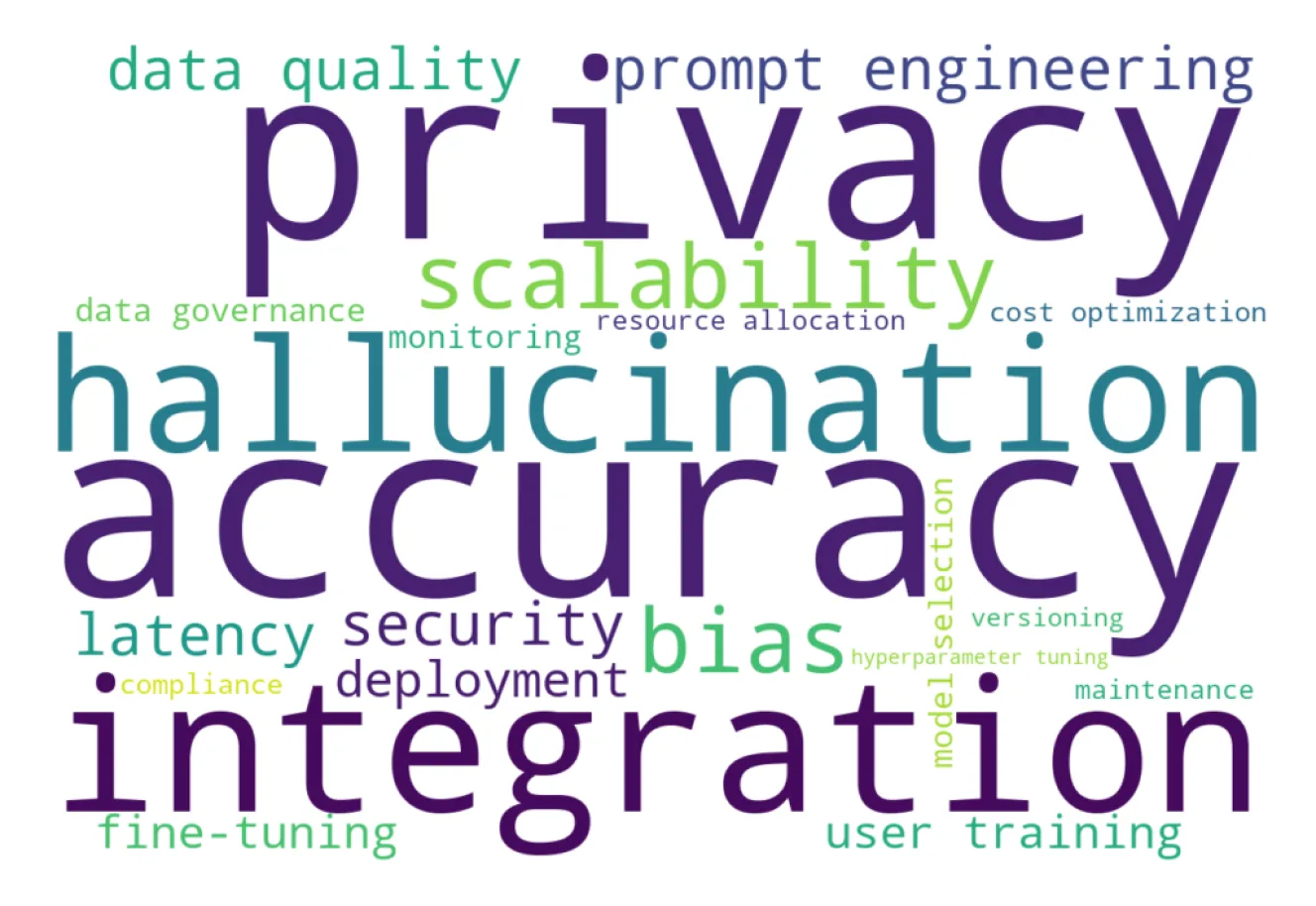

Accuracy requirements and validation processes

Accuracy requirements vary significantly across different applications. A customer service chatbot might tolerate occasional misunderstandings, while a medical diagnosis system requires extremely high precision. Understanding your AI accuracy threshold helps determine the appropriate technology stack and validation processes.

Data sensitivity and privacy considerations

AI data sensitivity and privacy considerations often drive architectural decisions. Organizations handling sensitive information may need on-premises deployment, specialized security measures, or careful data anonymization procedures. Financial services, healthcare, and legal organizations face particularly stringent requirements that affect technology choices.

Integration complexity with existing systems

Integration complexity depends on your existing technology ecosystem. Organizations with modern, API-driven architectures typically find NLP integration more straightforward than those with legacy systems requiring custom connectors or data transformation processes.

Scale and performance requirements

Scale and performance requirements influence both technology selection and infrastructure planning. Real-time applications like AI agent chat need low-latency responses while batch-processing applications like document analysis can tolerate longer processing times in exchange for higher throughput.

Common implementation challenges and solutions

Data quality and preparation challenges

Data quality issues represent the most frequent obstacle to successful NLP implementation. Text data is often inconsistent, contains errors, or lacks the structure needed for effective processing. Investing in data cleaning, standardization, and quality monitoring processes pays dividends throughout the project lifecycle.

Managing stakeholder expectations

Managing expectations requires clear communication about what NLP systems can and cannot do. Stakeholders often have unrealistic expectations for AI agents and do not understand the additional complexity of enterprise applications. Setting realistic timelines and success metrics helps ensure project success.

Handling edge cases and model limitations

Edge cases and model limitations inevitably emerge during real-world deployment. Building robust error handling, fallback procedures, and continuous monitoring for AI agents helps maintain system reliability even when encountering unexpected inputs or scenarios.

Change management and user adoption

Change management often receives insufficient attention during NLP implementations. Users need training on how to interact effectively with NLP systems, and business processes may need adjustment to accommodate new capabilities. Planning for organizational change alongside technical implementation improves adoption rates. See Sendbird considerations on the most effective way to implement AI agents.

Making NLP work for you

NLP has evolved from an academic curiosity to a practical technology that can transform how organizations handle their most valuable asset: information. The journey from rule-based systems to modern large language models represents more than just technological progress—it represents a fundamental shift in how we can interact with and extract value from human language.

The unstructured data opportunity

The most significant opportunity for most organizations lies in unlocking insights from unstructured data. The emails, documents, customer feedback, and other text-based information that accumulates in every business contains patterns, insights, and opportunities that traditional analysis methods cannot capture. NLP provides the tools to transform this dormant asset into actionable intelligence.

The importance of context engineering

Context engineering emerges as a critical skill for organizations implementing NLP solutions. The difference between mediocre and exceptional results often comes down to how well you can provide relevant context with LLM system integrations, engineer effective AI prompts, and iteratively refine your AI systems. This is not just a technical consideration—it requires a deep understanding of your business domain, processes, and objectives.

Getting started with NLP implementation

Organizations considering NLP implementation, like AI agents, must start with clear business objectives and well-defined use cases. The technology is powerful, but success depends on thoughtful application to real business problems. Consider beginning with pilot projects using a no-code AI agent platform, for example, with a great AI team that can help you demonstrate the value of AI agents and help you succeed.

The future of NLP

The landscape continues to evolve rapidly, with NLP agents and autonomous language systems representing the next frontier. These developments promise even greater automation and intelligence, but they also require careful consideration of governance, oversight, and integration with human workflows.

As NLP technology becomes more accessible and powerful through AI agent providers, the people who will benefit most are those who invest in understanding not just the technology itself but also how to apply it thoughtfully to their unique challenges and opportunities.

The future belongs to those who can effectively combine human insight with machine intelligence to unlock the full potential of their language data.

If you’re ready to leverage the full potential of NLP AI agents, Sendbird can help.