AI control: Agentic AI governance & change management (Part 2)

AI agents in customer service constantly evolve. They're updated with new logic, knowledge, and AI tools across teams and environments. Without a structured approach to AI governance and change management, this flexibility becomes a source of operational risk.

That’s why the second layer of AI trust at Sendbird is AI control.

Observing AI agents (covered in part 1) is key to understanding how their logic applies and influences their behavior, but it’s not enough. Teams need control mechanisms to define what an LLM agent is allowed to do, who can make changes, and when those changes take effect. Without strong AI governance, even a well-configured AI agent can drift into unintended behavior over time.

5 key questions to vet an AI agent platform

Why is AI governance necessary?

AI governance is essential to ensuring reliability, accountability, and consistency in enterprise agentic AI systems. Unlike traditional software that relies on constant logic, LLM agents operate in dynamic environments with changing inputs from nuanced and unpredictable conversations. Their logic, as a result, isn't static—it’s also shaped by multiple internal factors such as finely-tuned prompts, LLM settings, AI toolsets, and more.

To manage this dynamism, organizations need precise mechanisms to track what’s changed and define clear scopes of responsibility for those who make changes.

AI governance is paramount—not to restrict flexibility—but to reduce AI risk by ensuring that change is deliberate, transparent, and controlled. Without this accountability layer, it becomes difficult to answer questions like:

Who changed the AI agent’s workflow last week—and why?

What knowledge sources were added to production?

Why is one agent responding differently from another?

Answering these questions is crucial to maintaining confidence in your AI agent and ensuring AI accountability through collaborative development.

How does Sendbird support agentic AI governance?

Sendbird delivers a suite of AI governance capabilities built to match how enterprise teams operate. It gives organizations full control without slowing development and innovation.

Let’s review these AI governance and change management capabilities.

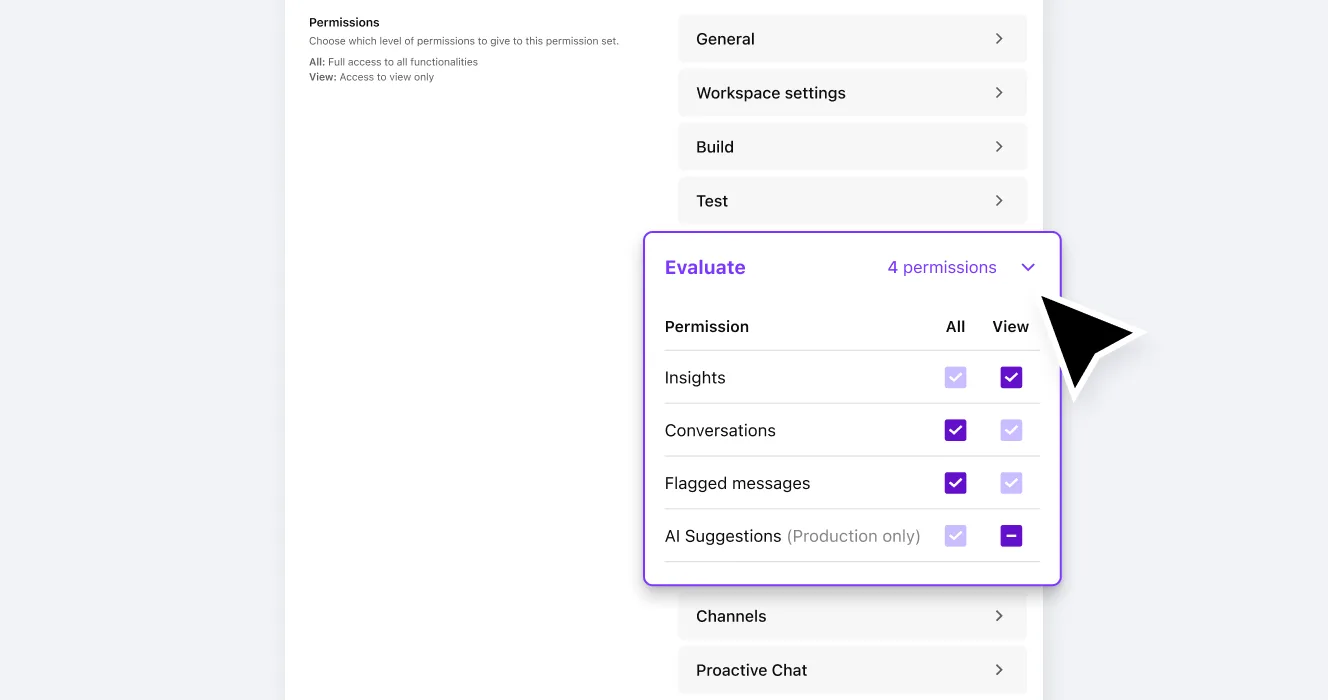

AI role-based access control (AI RBAC)

Not everyone should have permission to update production logic or deploy new content. AI RBAC lets teams:

Assign scoped roles to developers, prompt engineers, product owners, or operations leads

Restrict access to development or production environments, APIs, AI SOPs, or LLM agents

Enforce organizational boundaries between teams to prevent edits from unqualified employees and ensure efficiency

AI RBAC brings operational discipline to AI agent development by clarifying ownership and enabling safer collaboration.

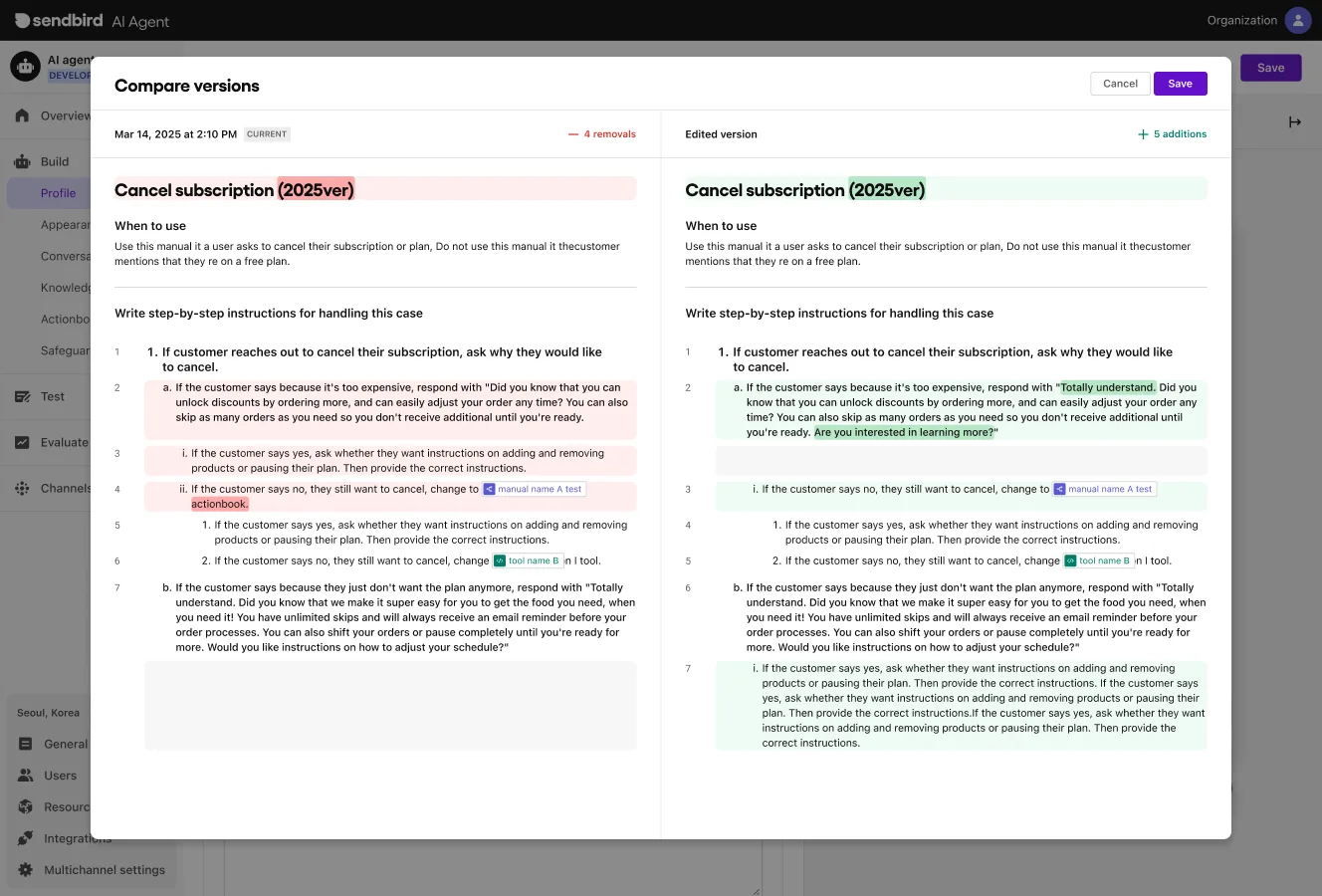

AI agent version control and rollback

Teams can keep track of the different sets of AI agents’ playbooks, knowledge bases, settings, and prompt configurations they’ve created. They can:

See who made which changes, and when

Compare version histories across development and production

Roll back a previous AI agent configuration instantly if an update introduces regressions

These AI change management capabilities allow valuable AI agent performance optimization.

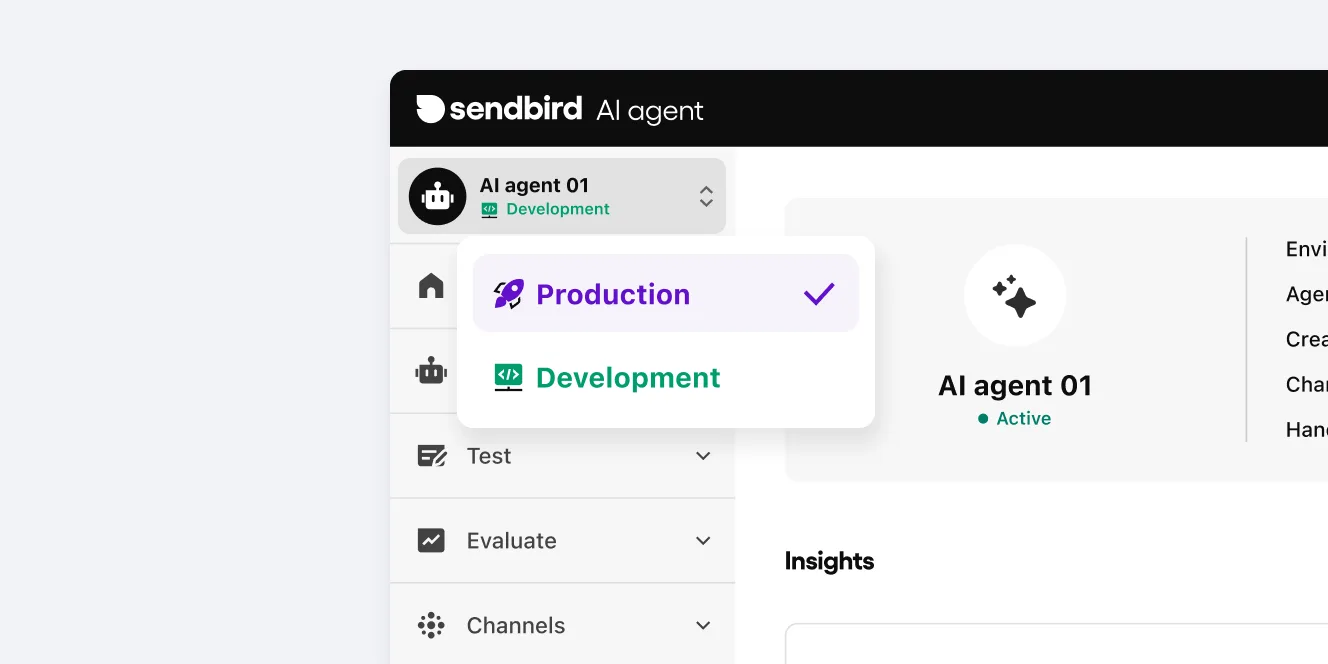

AI agent development and production separation

Sendbird's AI customer experience platform separates the development and production environments for AI agents, mirroring best practices from software engineering. Teams can:

Test changes in the AI agent sandbox before rollout

Deploy LLM agents in production while continuing to build in parallel

Push to production LLM agents that passed quality control

This structure protects the AI agent's integrity in production while enabling iterative AI improvement.

Selective AI agent deployment

Sendbird AI enables targeted releases. Teams can push updates with precision, deploying:

New AI logic without changing specific components, like a knowledge source

Additional AI tool integrations without affecting existing ones

Refined configurations to one agent while leaving others unaffected

This targeted approach to AI change management allows for incremental iteration without coordination delays or unwanted changes.

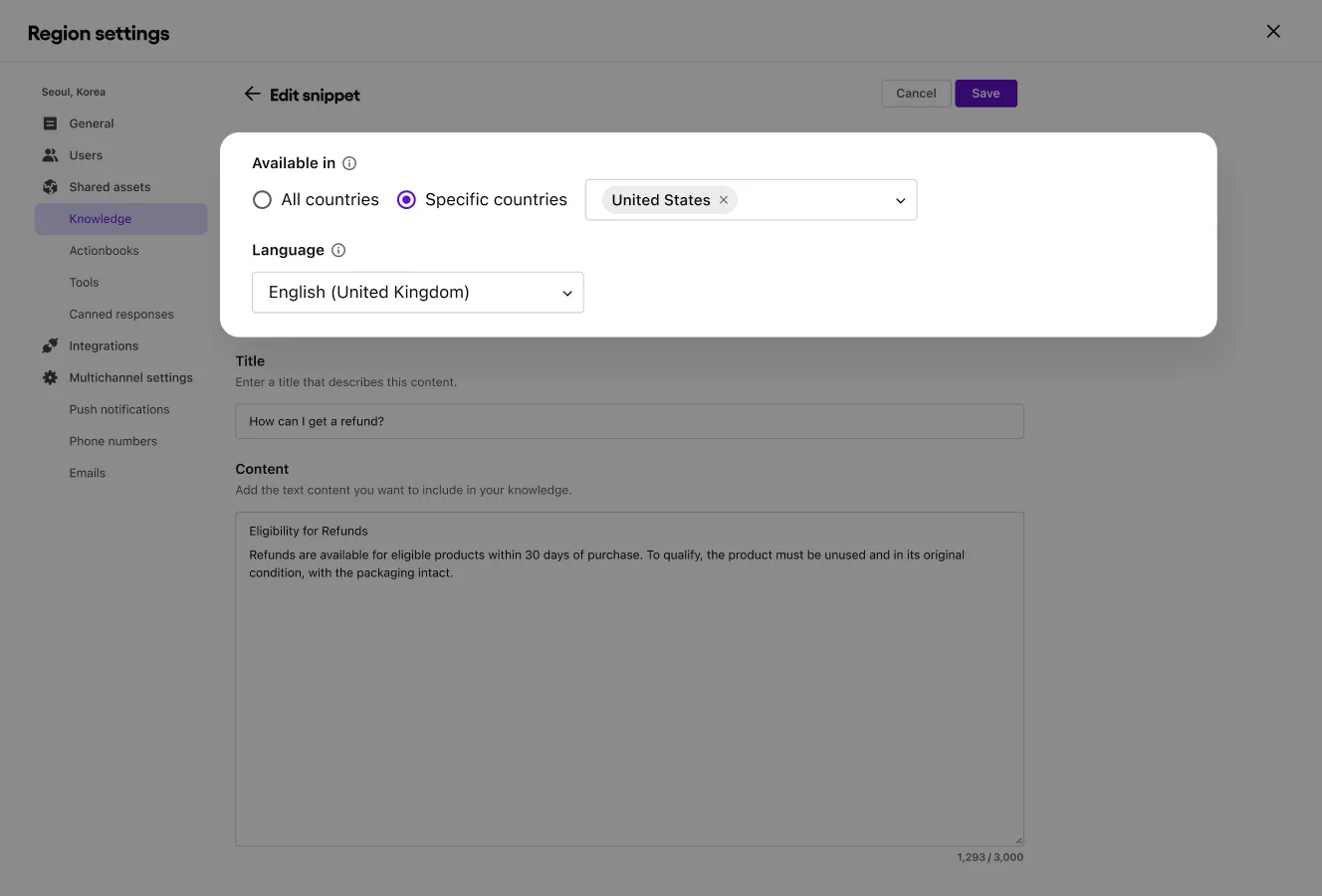

Regional AI Agent behavioral logic and modular setup

Sendbird AI supports regional or customer segment configurations in multi-AI agent environments. This enables LLM agents to share foundational assets such as knowledge sources, while adapting their behavior to local or functional requirements. Governance remains centralized, even as logic is distributed.

How to choose an AI agent platform that works

It takes more than good AI governance and change management to trust your AI agent

AI governance and change management give you control over how LLM agents are built, tested, and released. It reduces AI risk and enforces a structure essential for scaling AI operations. But in production, even a well-governed agent can behave in ways that create exposure—legally, reputationally, or ethically.

What happens when your LLM agent isn’t moderating harmful chat content? Is hallucinating an answer in a regulated context? Or is subtly violating a policy you didn’t realize was in scope?

AI governance streamlines collaboration and deployments, but it can’t always prevent a bad outcome.

That’s why the third trust layer of Sendbird is agentic AI compliance and safety.

In the next post, we’ll explore how Sendbird's AI agent platform helps teams implement built-in AI safeguards, real-time issue detection in AI conversations, and reporting that protect users and uphold responsible AI in live environments.

👉 Explore responsible AI (the third AI trust layer), or contact us to experiment with Sendbird's AI agent Trust OS.