AI agent observability: Building trust through AI transparency (Part 1)

Did you hear about the Chevy dealer’s chatbot that offered to sell a brand-new truck for $1? Funny—until it happens to your business.

Jokes aside, the more responsibility that AI agents take on, the more critical it becomes to understand how they operate. If you can’t see exactly what your LLM agent is doing, why, and how it's reaching those decisions, you’re at risk of whatever comes out—AI hallucinations, policy violations, and loss of trust.

In any autonomous system where decisions are made—whether by humans or Large Language Model (LLM) agents—observability is the prerequisite for effective operations. Without it, you can’t evaluate performance, trace errors, and improve your AI agent. You’re left guessing.

This is why AI observability is the very foundation of AI trust. It’s how you move from hoping your AI agent is behaving and performing correctly to knowing it is.

5 key questions to vet an AI agent platform

What does AI observability enable?

AI agent observability is critical to achieving operational trust in AI. It isn’t just about watching your AI agent; it’s about being able to inspect its behavior in depth and take meaningful corrective action with these observed insights. With this capability, teams can:

See what the AI agent did (AI transparency)

Trace how and why a decision was made step-by-step (AI explainability)

Verify alignment with policy and intended outcomes (AI auditability)

Identify errors, regressions, and blind spots before they scale (AI enhancement)

Ensure regional compliance with local laws, standards, and safety expectations (AI compliance)

Without this foundation for AI trust, enterprise teams face increased AI risk, longer customer support ticket resolution cycles, and decreased confidence in the AI agents they’re deploying.

How does Sendbird enable AI agent observability?

The Sendbird trust layer provides a robust suite of AI agent observability tools to monitor and understand agent behavior, logic, and performance. Located in the AI agent builder, these include:

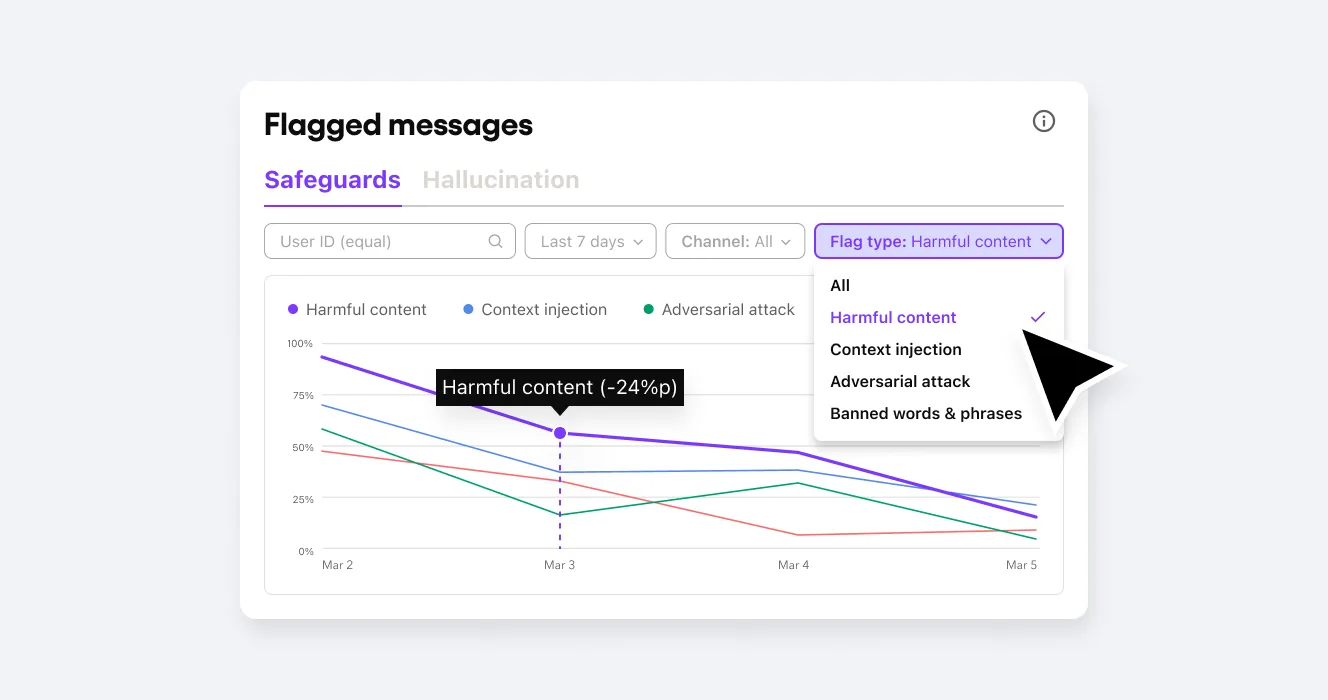

Real-time AI agent monitoring and alerting

Sendbird provides a complete trust system to:

Monitor AI conversations in real time

Instantly detect non-compliant AI behavior (policy violations)

Report unsafe AI behavior to humans or connected services (through APIs and webhooks).

This AI oversight ensures issues are caught and contained before they cause damage.

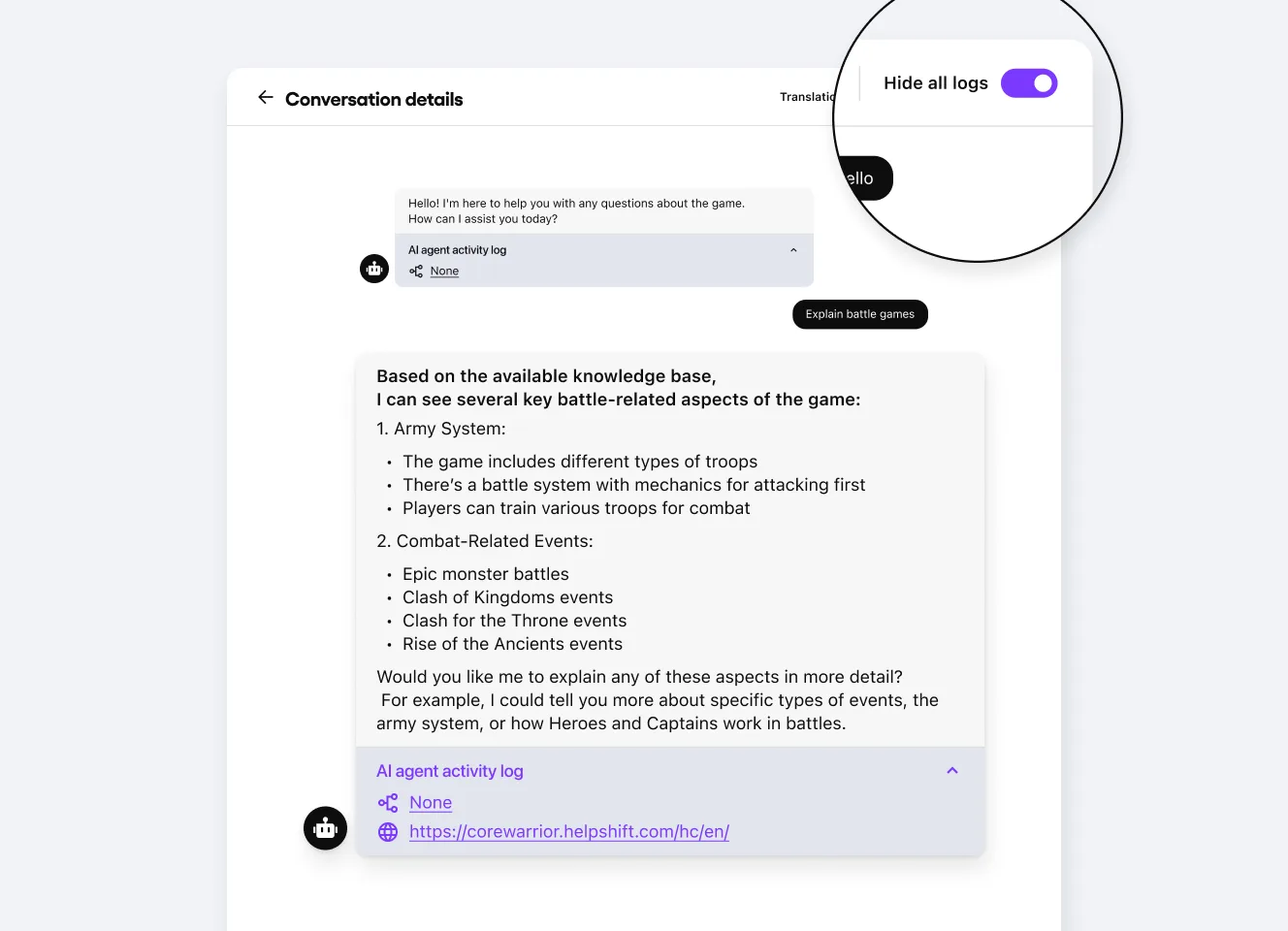

Activity log

AI agent activity logs provide a structured view of what the agent did, how, and why during real conversions:

See which API (tools) were triggered

Track which knowledge sources were used

See which Actionbooks (= AI Standard Operating Procedures) were followed

These insights help teams understand the reasoning behind every AI agent's decision and action.

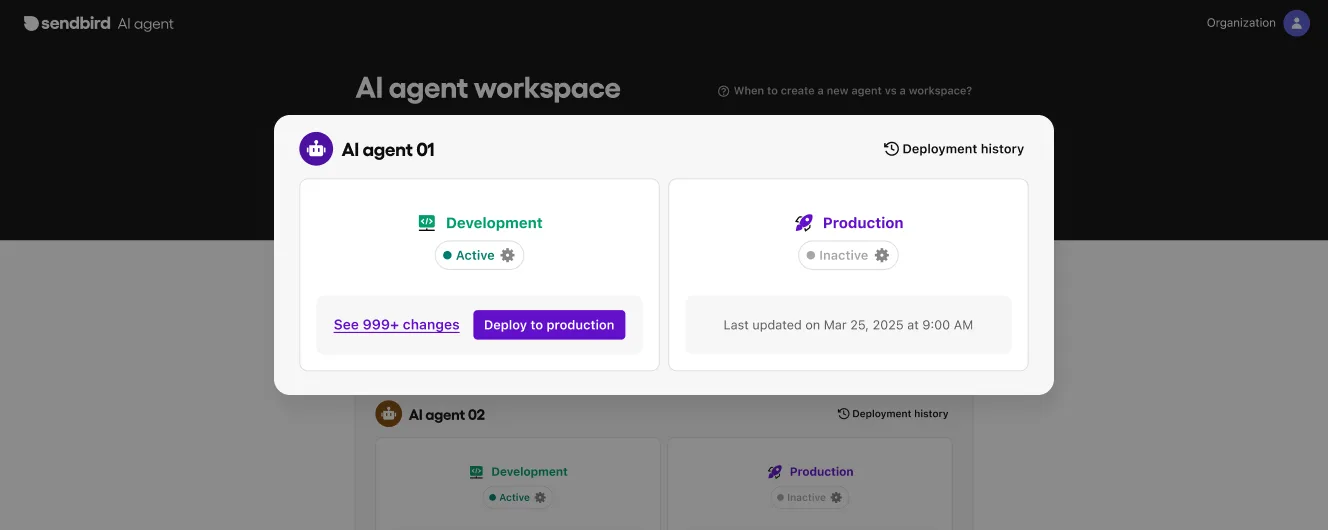

Audit logs and content change history

All changes made to the LLM agent are tracked with full attribution across development and production environments. Teams can:

Track AI prompts updates, AI agentic workflows edits, and LLM settings

Attribute all edits across environments (dev & production)

Compare side-by-side actionbook versions for structured QA and rollback readiness

Ensure alignment with change management and compliance requirements

This provides teams with the insights to improve agent behavior over time.

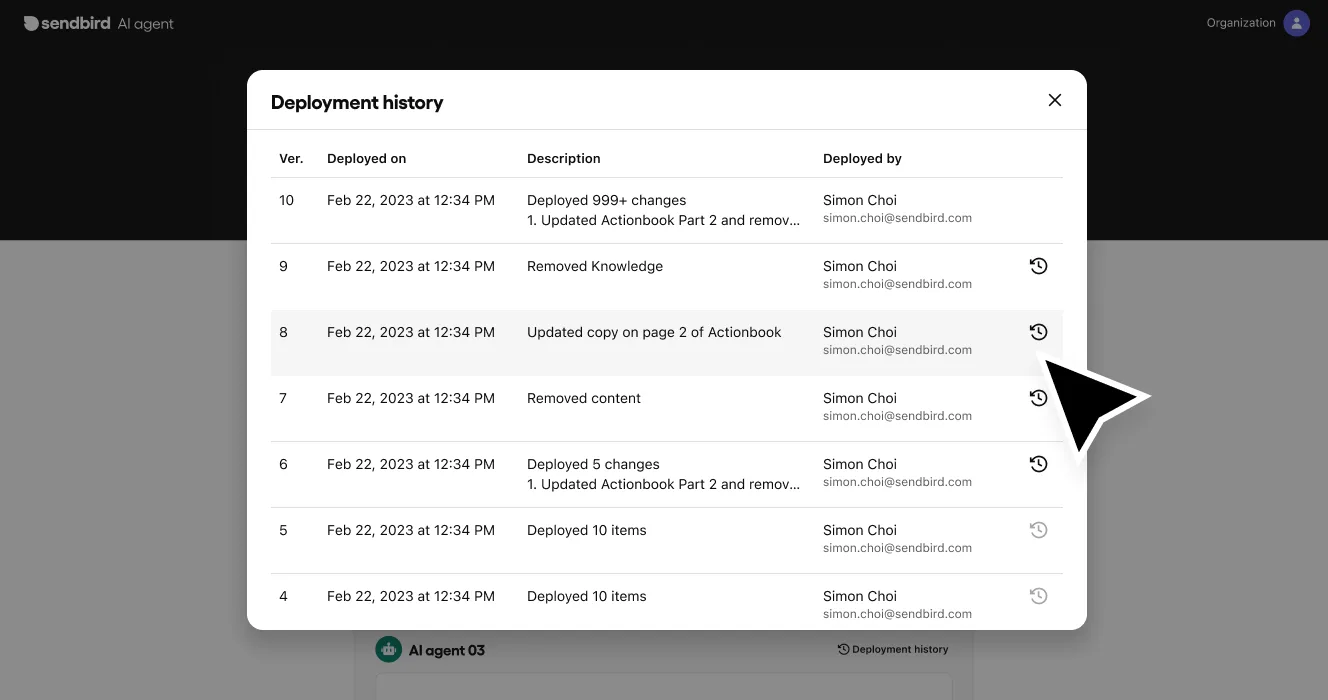

AI agent deployment logs

AI agent deployment logs provide a clear record of what happened going to production, giving teams the clarity to troubleshoot issues and to move fast with control. Teams can:

View a timestamped record of every AI deployment in production

Track which AI agent configuration was pushed and by whom

Diagnose what caused an unexpected LLM agent behavior in production

Confirm that only reviewed and approved artificial intelligence agent versions are in production

If something fails or performs unexpectedly in production, the deployment log is the first place to check.

How to choose an AI agent platform that works

Why does AI observability matter?

AI observability is the foundation of AI trust and paves the way for everything else in AI operations. After all, you can only scale what you can see, understand, and control.

With the observability features built into Sendbird's AI agent platform, you get:

AI feedback loops: Identify, review, and improve performance across the lifecycle in one centralized AI agent dashboard

Real-time AI monitoring: Auto-detect violations, risks, and anomalies as they happen across systems

Centralized AI operations: a structured AI agent platform to confidently scale AI customer service.

Next AI trust layer: From observing your AI agent to controlling it

AI observability gives you clear insight into what your AI agent is doing. But before you can trust your LLM agent, you also need control over how it’s configured, changed, and by whom.

This brings us to the second capability needed to build AI trust: AI control through AI governance and change management.

In the next blog, we’ll uncover how Sendbird AI brings discipline to managing your LLM agent through agentic AI governance, including version control, role-based access, and selective deployment of AI workflows.

👉 Learn about AI control (the second AI trust layer) or contact us to experiment with Sendbird's AI agent Trust OS.