Scaling AI: The need for enterprise AI agent architecture and infrastructure (Part 5)

As AI agents mature from pilot projects to core infrastructure, the challenge is no longer proving their value—it's ensuring they work everywhere, every time, and all at once.

However, scaling AI customer support isn’t just about handling more AI conversations. It’s about making sure your AI agents perform to their full potential across a myriad of teams, regions, use cases, with different risk thresholds, and all without compromise.

This is why Sendbird's fifth layer of AI trust is scalable AI.

Part blueprint for deploying LLM agents across markets, part foundation of enterprise-grade communication infrastructure—it’s how Sendbird's AI customer experience platform ensures you can deploy global AI concierges with absolute confidence.

How to choose an AI agent platform that works

Why is AI agent scalability a must-have for enterprises?

In large organizations, LLM agents don’t live in isolation. One team might build an AI concierge, another for onboarding, and so on, followed by agents specialized in retention, engagement, regional variants, etc.

Each LLM agent needs its own logic, tools, and knowledge, but this shouldn’t require re-configuring AI compliance and safety or re-evaluating the AI agent each time. LLM agent must behave consistently while adapting to local needs.

However, without a modular AI architecture and scalable AI infrastructure to support a growing AI workforce of specialized AI agents, organizations face issues like:

Higher maintenance and coordination overhead from duplicated agent configurations

Fragmented AI agent logic and behavior across business units

Inconsistent branding, AI compliance, and safety posture

Slow LLM agent rollouts that are holding back AI transformation

How does Sendbird support AI scalability?

Sendbird AI offers a powerful set of capabilities to support enterprise AI agent operations. It includes:

Modular multi-agent architecture

Sendbird allows organizations to orchestrate multiple AI agents from a unified platform. Each LLM agent can be customized for specific customer segments, use cases, and regional customs while sharing foundational assets—tools, Actionbooks (AI logic templates), and safety rules.

These shareable core configurations, combined with customized ones, accelerate time to market and save precious development time.

Local AI agents

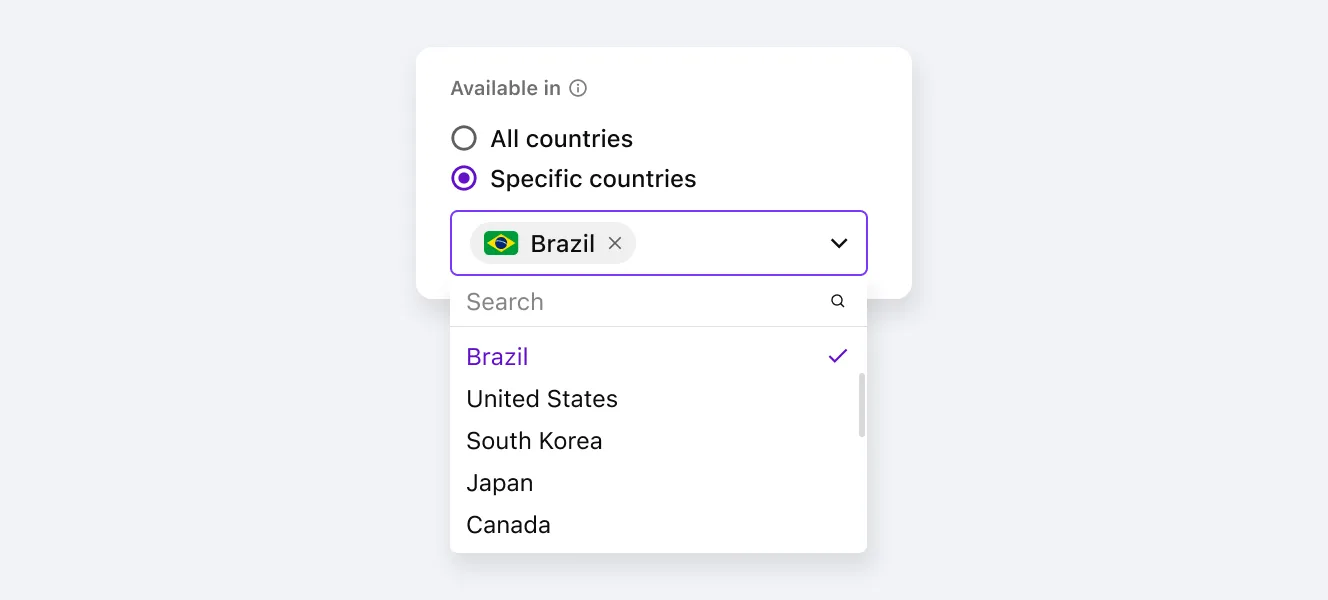

Sendbird lets you create an LLM agent that can adapt to local requirements. As a result, teams can:

Quickly route customers to the right LLM agent based on geography, customer segment, or journey stage.

Continuously improve local AI performance based on regional feedback.

AI doesn’t scale globally unless it adapts locally. Sendbird makes it possible.

Multilingual capabilities

Sendbird’s LLM agents come with built-in language detection and response translation. This enables AI agents to engage users in their preferred language without cloning or reconfiguring them for each locale. This is an essential feature for scaling AI customer service globally with ease.

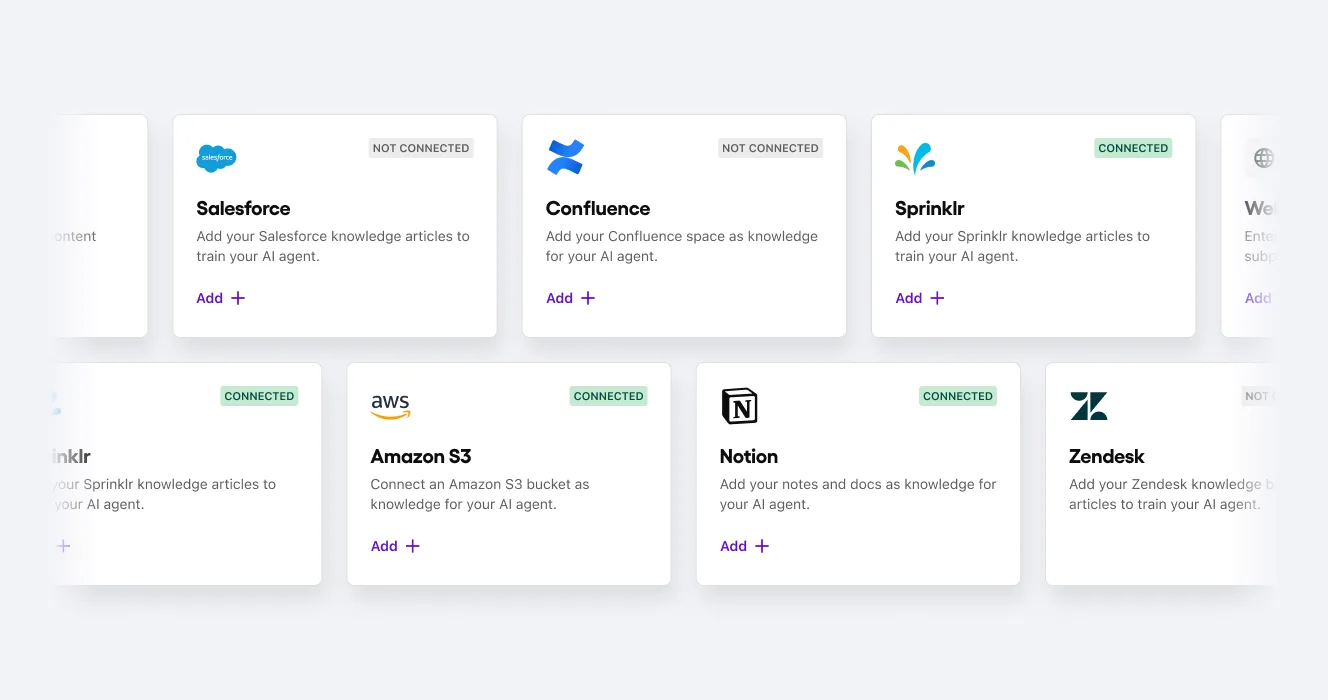

Shared workspace-level assets

You shouldn’t have to build every AI agent from scratch. Many resources—like API tools, knowledge bases, and Actionbooks—can be shared. Sendbird AI introduces a two-level configuration model where assets are managed centrally at the workspace level and can be selectively activated for each agent.

This way, you can:

Eliminate duplications

Maintain global consistency

Allow local customization through agent-level overrides

With Sendbird, you can easily create infinite AI agent variations using a single unified platform.

Environment separation for safe operation

Sendbird enforces a strict separation between the AI agents' development and production environments so teams can iterate quickly without disrupting live operations. This is essential to scale deployment and accelerate time to value.

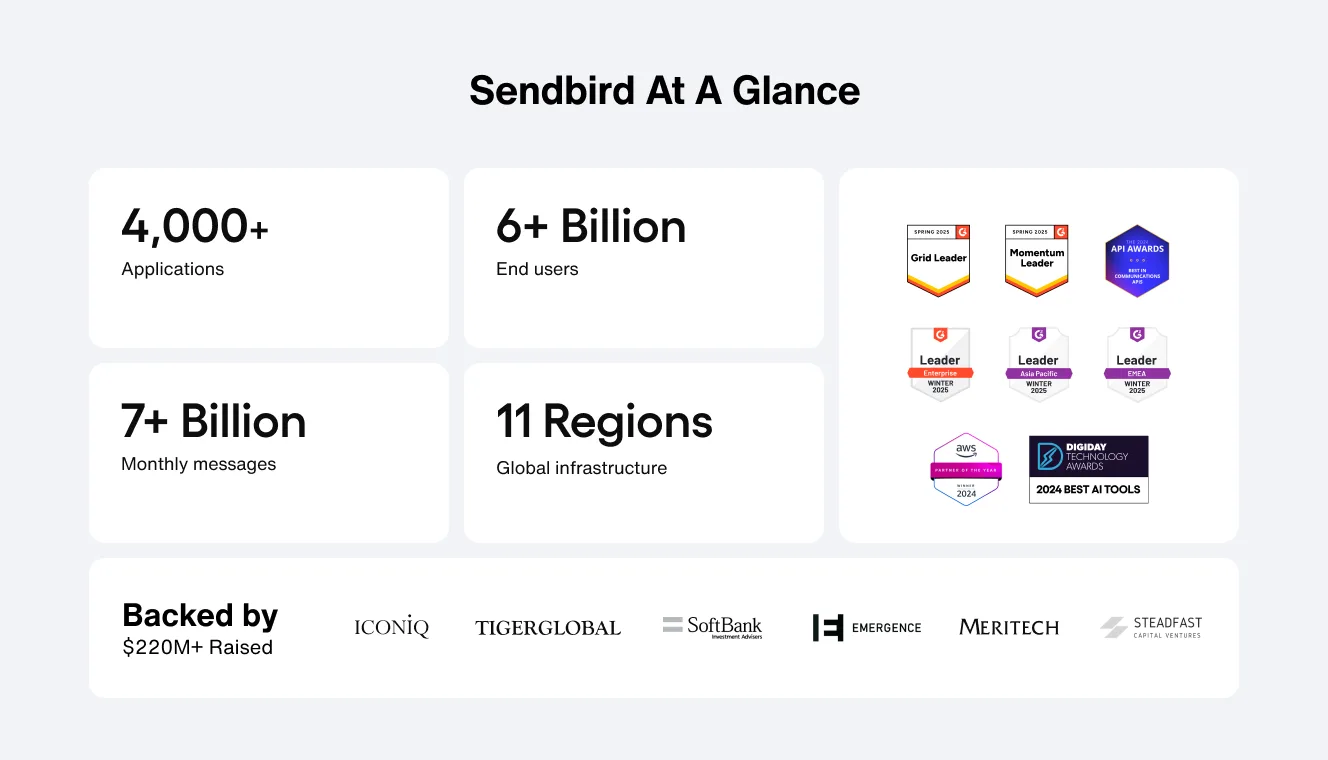

Proven real-time, omnichannel, communication infrastructure

The Sendbird AI agent platform is built on a trusted omnichannel, cloud communication infrastructure that supports over seven billion monthly conversations across 4,000+ global brands, including Yahoo, Hinge, Virgin Mobile, Redfin, Lotte, and more.

It powers proactive, multi-channel AI customer service across voice, email, SMS, WhatsApp, web, and mobile channels.

Sendbird’s AI agent platform features:

Global ultra-low latency

Enterprise-grade security and compliance

High availability under unpredictable load

Real-time omnichannel customer reach

Businesses that value operational AI security, reliability, and scalability choose Sendbird.

8 major support hassles solved with AI agents

Replacing AI risk with trust in AI agents

AI Trust can’t be built on a scattered set of features—it must be grounded in a system designed to build confidence in your AI agents. Sendbird’s AI agent platform unifies five essential layers of trust—transparency, control, responsibility, reliability, and scale—into one framework: Trust OS, the solution for enterprise-ready AI.

"We chose Sendbird and Anthropic Claude for three reasons: accuracy for QA work, supplier access management, and scalability for future AI services."

Oh Ju-young, AI Team at Lotte Homeshopping

👉 Check out the Lotte case study

👉 To learn more about Trust OS, request a demo today or explore the first AI trust layer of Trust OS: AI observability.