Introducing Trust OS: The foundation for responsible AI agents

When it comes to AI, we’ve all heard the same promises: “enterprise-grade,” “secure by design,” “reliable.” But too often, those promises ring hollow.

In today’s AI arms race, most companies want their AI agents to resolve tickets, take real action, and move the business forward. They want AI to be a driver of outcomes, not just a chatbot. But here’s the uncomfortable truth: The more agency you give your AI, the more trust it demands. And most enterprise AI agent platforms aren’t ready for that tradeoff.

At Sendbird, we power over 7 billion conversations every month, serving more than 4,000 customers around the world—including some of the most complex and demanding brands. For our customers, trust isn’t a luxury. It’s the foundation everything else is built on.

Imagine bringing on a junior employee and asking them to handle your most sensitive customer escalations on day one. You give them access to internal tools, customer data, and the authority to take real action, like processing refunds or resetting passwords. But you provide no training, no supervision, and no way to monitor what they’re doing. There’s no audit trail, no oversight, no way to reverse a mistake.

You would never do that with a human agent. But that’s how most companies are deploying AI agents today. They’re delegating responsibility to systems they can’t fully see, test, or control. Real trust doesn’t come from hope or optimism. It comes from having the systems in place to evaluate, govern, and improve. It comes from accountability.

How to choose an AI agent platform that works

Meet Trust OS: The industry’s first system for AI accountability

Today, I’m proud to introduce Sendbird Trust OS, a foundational system built to make AI agents accountable, responsible, and ready for enterprise scale. It’s everything you’d expect from a mission-critical platform: testability, traceability, governance, human oversight, and global scale.

It’s built on four core pillars:

- Observability – See what your AI is doing, and why

- Control – Guardrails to deploy with confidence

- Oversight – Great AI still needs a manager

- Scale-proven infrastructure – Global, multilingual, enterprise-ready

Observability: See what your AI is doing, and why

You can’t trust what you can’t see. That’s why Trust OS gives you visibility into every AI decision, before and after deployment.

Without clear insight into how your AI agent makes decisions, you’re flying blind. Observability is the first pillar of Trust OS, and it’s designed to bring full transparency to every interaction. With Conversation Benchmark Testing, teams can simulate multi-turn conversations and compare AI responses against expected outcomes before an AI agent talks to a customer. These tests can be scheduled to run continuously in the background, helping you identify regressions or blind spots before they impact customers.

But AI observability goes deeper than testing. Every AI interaction is recorded with detailed activity logs, showing exactly which Actionbooks were triggered, which API calls were made, and what knowledge sources were used to inform a response. Audit logs help you trace every change across your deployment. Together, these capabilities form a diagnostic layer that enables proactive quality assurance—so you’re not just reacting to AI mistakes, you’re preventing them.

Control: Guardrails to deploy with confidence

Enterprise teams move fast, but trust requires safety at every step. Trust OS gives you the controls to move quickly without compromising integrity.

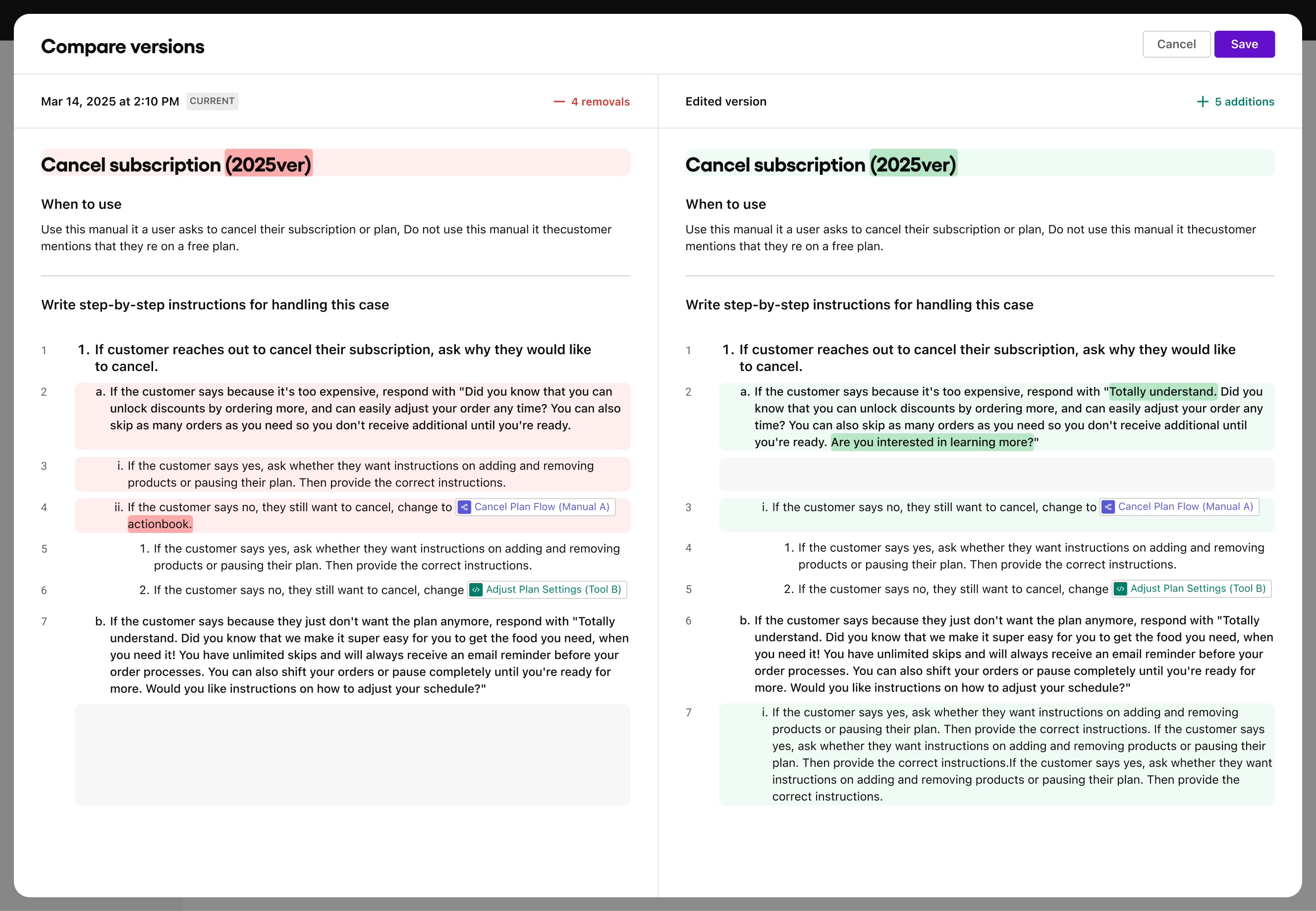

AI governance frameworks aren't about slowing down; they’re about moving fast without breaking trust. Control is the second pillar of Trust OS, giving your team a robust framework for managing changes with precision. Version control allows you to track prompt and configuration history across your AI agents, compare changes side-by-side, and roll back when needed. Development and production environments are separated, ensuring that updates can be thoroughly tested before being deployed to end users.

When you’re ready to launch, you can choose exactly which updates to deploy. It’s granular, intentional, and enterprise-safe. Role-based access control (RBAC) lets you define who can do what across the system, so developers, prompt engineers, and support leads each operate within the boundaries of their responsibilities.

Read the full report + in-depth CX case studies

Oversight: Great AI still needs a manager

Even the most advanced AI agents need a human in the loop. That’s why the third pillar of Trust OS is oversight, built with multiple safety nets to catch, flag, and fix problems before they escalate. With AI Agent Scorecards, teams can evaluate conversations across key dimensions like tone, clarity, relevance, and solution effectiveness. This makes it easy to identify weak spots in AI performance and continuously improve through direct human feedback.

When something goes off-script, Trust OS flags it automatically. AI monitoring means hallucinations, harmful content, context injection, banned phrases, and other policy violations are surfaced before they become customer issues. And if a serious problem arises, you can instantly deactivate the AI agent. This kind of oversight transforms your AI from a black box into a supervised system that is always learning, always improving, and always accountable.

Scale-proven infrastructure: Global, multilingual, enterprise-ready

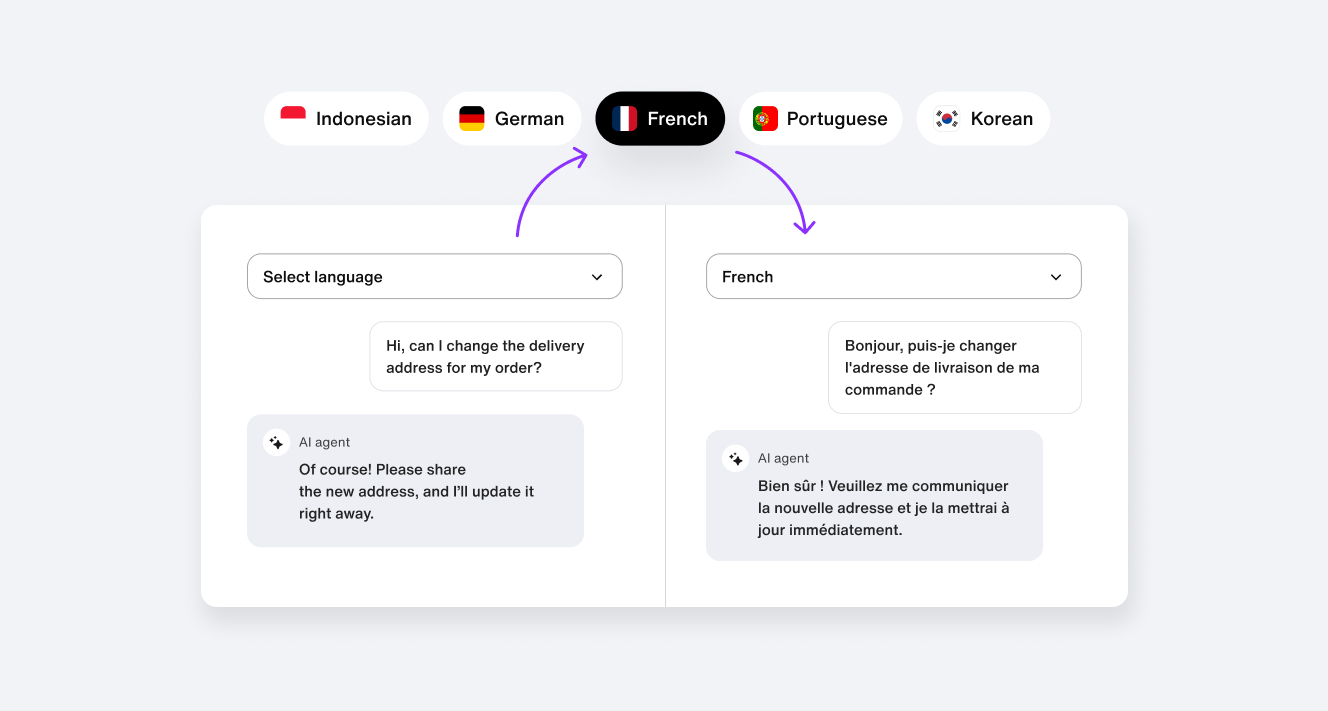

This isn’t our first platform. We power over 7 billion conversations every month across 4,000+ global customers. Our systems are trusted by companies with the highest compliance, privacy, and availability requirements. That same foundation now powers the next generation of AI agents with support for multilingual conversations, global deployment environments, and centralized workspace assets.

Trust OS adapts to your operating model. Whether you're managing one agent or orchestrating dozens across regions and business lines, our platform gives you the confidence to scale AI without compromising on safety or control.

5 key questions to vet an AI agent platform

Trust is the bridge to AI maturity

AI maturity isn’t about flashy demos or vanity metric wins. It’s about confidently giving your AI agent real responsibility—the ability to take action, resolve issues, and carry the weight of customer experience. That level of agency is where true business value lies. But agency can’t be granted blindly. It has to be earned. And the only way to earn it is through trust.

Without that bridge, even the most capable AI remains confined to low-risk, low-reward tasks. It becomes another chatbot that’s scripted, shallow, and ultimately ineffective. If AI is going to take on real responsibility, it needs real systems behind it. When you have the framework to see what AI is doing, to control how it behaves, and to intervene when it matters, AI becomes a multiplier. That is when real responsibility is earned.

Just as engineering teams wouldn't ship code without testing, reviewing, and monitoring, AI agents should never be deployed without rigorous accountability. Without it, they’re not “enterprise-ready,” they’re a liability.

At Sendbird, we believe that trust is not a marketing claim—it’s an operating system. One built on four critical foundations: Observability, control, oversight, and scale-proven infrastructure. That’s what Trust OS delivers.

Ready to put trust into production? Request a demo and see how Trust OS can transform your AI from a black box into a trusted agent.